Support Questions

- Cloudera Community

- Support

- Support Questions

- NiFi JVM settings for large files.

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

NiFi JVM settings for large files.

- Labels:

-

Apache NiFi

Created 02-28-2018 07:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Our current nifi jvm settings are

java.arg.2=-Xmx16g

java.arg.3=-Xms16g

i need to read a huge JSON file 22GB , mainly to replace white spaces from it.

i am planning to use the list-->fetch-->splittext-->replacetext-->mergecontent approach which i used earlier for similar use cases. but since the file now is bigger than the JVM , i am thikning i will get outofmemory errors since NiFi needs to read the file before it splits it. am i correct.?

i can change the jvm settings to use 32 or 48 gb , but just want to get expert opinion on this.??

Regards,

Sai

Created 03-01-2018 02:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi ,

Can anyone share their experiences with files bigger than the jvm.?

Created 03-01-2018 02:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You don't necessarily need a heap larger than the file unless you are using a processor that reads the entire file into memory, which generally most processors should not do unless absolutely necessary, and if they do then they should document it.

In your approach of "list-->fetch-->splittext-->replacetext-->mergecontent" the issue is that you are splitting a single flow file into millions of flow files, and even though the content of all these flow files won't be in memory, its still millions of Java objects on the heap.

Whenever possible you should avoid this splitting approach. You should be using the "record" processors to manipulate the data in place and keep your 22GB as a single flow file. I don't know what you actually need to do to each record so I can't say exactly, but most likely after your fetch processor you just need an UpdateRecord processor that would stream 1 record in, update a field, and stream the record out, so it would never load the entire content into memory, and would never create millions of flow files.

Created on 03-01-2018 04:27 PM - edited 08-17-2019 05:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your inputs Bryan.

This huge JSON file (22gb) we got needs to be cleaned up, while we notified source about the issue and asked them to cleanup before they send it us. i was trying to see if i can use NiFi to fix it.

as it is this file is not opening in any json viewers or reporting tools as it has white spaces between integers.

For ex look at second Customer_Id record where it has id as 436 796

[{"Customer_Id":236768,"Brand":[{"Brand_Nm":"abcc","Question":[{"Question_Txt":"The type of food prefers is ...","Answer":[{"Answer_Txt":"Combination of Both","Response":[{"Response_Dt":"2017-04-10T18:33:40"}]}]},{"Question_Txt":"My favorite is ...","Answer":[{"Answer_Txt":"Over 17 years old","Response":[{"Response_Dt":"2016-12-07T03:43:57"}]}]}]}]},{"Customer_Id":436 796,"Brand":[{"Brand_Nm":"edfr","Question":[{"Question_Txt":"At the end of the day I am greeted with ...","Answer":[{"Answer_Txt":"kisses","Response":[{"Response_Dt":"2017-04-12T01:54:12"}]}]}]}]}

I am trying to read this as text file and replace those..i used the simple getFile-->ReplaceText-->PutFile processors to do it on a 150MB file which worked fine and took a couple of minutes. I am just being cautious with the big file.

Created 03-01-2018 04:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok GetFile -> ReplaceText -> PutFile should be fine, it will just take a long time 🙂

- GetFile will stream file from source location to NiFI's internal repository

- ReplaceText will read line-by-line from content repo, and write line-by-line back to content repo

- PutFile will stream from content repo to local disk

Created 03-01-2018 05:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i will try and see how it goes.

But Is there a better approach.? in order for me to use json record processors I am thinking it will fail to read the json records where it has issue with rows like the one mentioned above.

Created on 03-01-2018 05:38 PM - edited 08-17-2019 05:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It looks like ReplaceText is not streaming , I am getting out of memory errors.

I am trying this with a 7.5 gb file..

11:33:19 CSTERRORddf8b120-0161-1000-a2c4-c410a98382c1

ReplaceText[id=ddf8b120-0161-1000-a2c4-c410a98382c1] ReplaceText[id=ddf8b120-0161-1000-a2c4-c410a98382c1] failed to process due to java.lang.OutOfMemoryError; rolling back session: java.lang.OutOfMemoryError

11:33:19 CSTERRORddf8b120-0161-1000-a2c4-c410a98382c1

ReplaceText[id=ddf8b120-0161-1000-a2c4-c410a98382c1] ReplaceText[id=ddf8b120-0161-1000-a2c4-c410a98382c1] failed to process session due to java.lang.OutOfMemoryError: java.lang.OutOfMemoryError

Created 03-01-2018 05:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

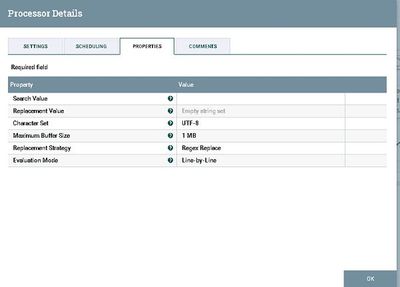

Is ReplaceText configured as shown above with Line-By-Line and a 1MB buffer?

Created 03-01-2018 06:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Bryan Bende yes , exactly the same.

Created 03-01-2018 07:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is it possible that your content does not contain any new line characters like \n or \r?

I'm wondering if the way ReplaceText works it might be using new-lines and if it doesn't encounter any then it ends up loading the whole content which is not what we want.