Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: NiFi Node showing 2 nodes and not respecting n...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

NiFi Node showing 2 nodes and not respecting node down fault tolerance.

- Labels:

-

Apache NiFi

Created on 01-19-2022 08:15 AM - edited 01-19-2022 08:22 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Guys,

We have 3 node NiFi cluster and the NiFi version is 1.12.1

nifi-hatest-01

nifi-hatest-02

nifi-hatest-03

All the 3 nodes are in cluster, but not in UI.

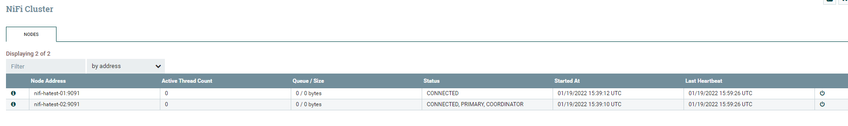

In all the 3 NiFi nodes, we see this 2 nodes in cluster mode:

Now, as we see nifi-hatest-02 is the primary and cooridantor. And nifi-hatest-03 is not being shown here.

In nifi-hatest-01, we see nifi-hatest-01 is connecting to nifi-hatest-02

2022-01-19 16:02:09,840 INFO [Clustering Tasks Thread-1] o.a.n.c.c.ClusterProtocolHeartbeater Heartbeat created at 2022-01-19 16:02:09,820 and sent to nifi-hatest-02:9088 at 2022-01-19 16:02:09,840; send took 20 millis

In nifi-hatest-03, we see nifi-hatest-03 is also connecting to nifi-hatest-02

2022-01-19 16:02:09,840 INFO [Clustering Tasks Thread-1] o.a.n.c.c.ClusterProtocolHeartbeater Heartbeat created at 2022-01-19 16:02:09,820 and sent to nifi-hatest-02:9088 at 2022-01-19 16:02:09,840; send took 20 millis

In nifi-hatest-02, we see nifi-hatest-02 is also connecting to all the 3 nodes as its coordinator:

2022-01-19 16:04:52,573 INFO [Process Cluster Protocol Request-30] o.a.n.c.p.impl.SocketProtocolListener Finished processing request 35f2ed1a-ca6f-4cc6-ab4a-6c0774fc9c6d (type=HEARTBEAT, length=2837 bytes) from nifi-hatest-03:9091 in 19 millis

2022-01-19 16:04:52,782 INFO [Process Cluster Protocol Request-31] o.a.n.c.p.impl.SocketProtocolListener Finished processing request 73d477cd-a936-4541-a854-03712cbc5fe9 (type=HEARTBEAT, length=2836 bytes) from nifi-hatest-02:9091 in 18 millis

2022-01-19 16:04:52,782 INFO [Clustering Tasks Thread-3] o.a.n.c.c.ClusterProtocolHeartbeater Heartbeat created at 2022-01-19 16:04:52,762 and sent to nifi-hatest-02:9088 at 2022-01-19 16:04:52,782; send took 19 millis

2022-01-19 16:04:53,916 INFO [Process Cluster Protocol Request-32] o.a.n.c.p.impl.SocketProtocolListener Finished processing request 4fbc39d9-5154-4841-b3be-ddde58b22cd2 (type=HEARTBEAT, length=2836 bytes) from nifi-hatest-01:9091 in 19 millis

No errors regarding cluster in either of nodes. But still see 2 nodes only in UI.

Also Cluster is using zk as cluster state provider.

Another thing is that when we create a processor in any of the NiFi UI, it gets propagated to other nodes too! So functionality its kind of working.

Created 01-19-2022 10:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Reading your sample log messages closer I can see that the coordinator received a heartbeat from node 3

"2022-01-19 16:04:52,573 INFO [Process Cluster Protocol Request-30] o.a.n.c.p.impl.SocketProtocolListener Finished processing request 35f2ed1a-ca6f-4cc6-ab4a-6c0774fc9c6d (type=HEARTBEAT, length=2837 bytes) from nifi-hatest-03:9091 in 19 millis"

So I also wonder if we have a caching rendering issue, can you see what the UI shows using incognito mode?

And finally if this is a DEV environment you can also try and delete your local state directory, that value is set in file "state-management.xml"

Deleting state will clear out any local state your processors might depend on if configured as such, so remove with caution.

It will also clear out cluster node ID's it local knows of.

Created 01-19-2022 09:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This to me sounds like a hostname issue.

could you confirm the value on nifi.properites for:

nifi.cluster.node.address=

nifi.web.https.host=

Those values should match for the name of the host.

If nothing there stands out check log entries for latest messages contain below strings:

"org.apache.nifi.cluster.coordination.node.NodeClusterCoordinator: Status"

"org.apache.nifi.controller.StandardFlowService: Setting Flow Controller's Node ID:"

"org.apache.nifi.web.server.HostHeaderHandler"

Created 01-19-2022 10:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Reading your sample log messages closer I can see that the coordinator received a heartbeat from node 3

"2022-01-19 16:04:52,573 INFO [Process Cluster Protocol Request-30] o.a.n.c.p.impl.SocketProtocolListener Finished processing request 35f2ed1a-ca6f-4cc6-ab4a-6c0774fc9c6d (type=HEARTBEAT, length=2837 bytes) from nifi-hatest-03:9091 in 19 millis"

So I also wonder if we have a caching rendering issue, can you see what the UI shows using incognito mode?

And finally if this is a DEV environment you can also try and delete your local state directory, that value is set in file "state-management.xml"

Deleting state will clear out any local state your processors might depend on if configured as such, so remove with caution.

It will also clear out cluster node ID's it local knows of.

Created on 01-19-2022 07:32 PM - edited 01-19-2022 07:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We have removed all these files from all the 3 nodes, as we don't have any flows.

sudo rm -f /hadoop/nifi/conf/flow.xml.gz

sudo rm -f /hadoop/nifi/conf/users.xml

sudo rm -f /hadoop/nifi/conf/authorizations.xml

sudo rm -f /etc/nifi/3.5.2.0-99/0/state-management.xml

sudo rm -f /etc/nifi/3.5.2.0-99/0/authorizers.xml

And since its external zk, we are giving new root node to start with. But the issue still persists.

The new root node is "nifi3" and here is what i see in zk, is it expected output?

[zk: nifi-hatest-03:2181(CONNECTED) 2] ls /nifi3/leaders

[Primary Node, Cluster Coordinator]

[zk: nifi-hatest-03:2181(CONNECTED) 3] ls /nifi3/leaders/Primary Node

Node does not exist: /nifi3/leaders/Primary

[zk: nifi-hatest-03:2181(CONNECTED) 4] ls /nifi3/leaders/Cluster Coordinator

Node does not exist: /nifi3/leaders/Cluster

[zk: nifi-hatest-03:2181(CONNECTED) 5] ls /nifi3/components

We have also verified these 2 props and it matches the respective hostnames:

nifi.cluster.node.address=

nifi.web.https.host=

Created 01-19-2022 09:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@DigitalPlumber The state file in local were deleted and the cluster is showing all the 3 nodes, thanks for the help.

However, we are trying fault tolerance feature of NiFi, and when primary node goes down can see this message in other nodes:

Cannot replicate request to Node nifi-hatest-03:9091 because the node is not connected

Below is article which had same errors, but we cannot clean state file always as if one node goes down our NiFi cluster should be still available without manual intervention?

https://community.cloudera.com/t5/Support-Questions/NiFi-Cannot-replicate-request-to-Node-nifi-domai...

Created 01-20-2022 09:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Deleting that state directory should not be a normal maintenance function.

What you initially described is a very odd case.

The fact that your node went down 003 and if it was the primary node or the coordinator node, internally there would have been a election to nominate a new cluster member node to perform those functions.

In your case node 003 is a member of the cluster but it is not connected.

Why it is not connected could be the cause of n reasons typically node is down or it was manually disconnected. When you see that message how many member nodes do you have?

I expect the UI to show 2/3 because node 3 is not connected.

The solution is to connect it by fixing the issue of why node is down or connect it through the UI