Support Questions

- Cloudera Community

- Support

- Support Questions

- Nifi:How does ListHdfs processor work?

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Nifi:How does ListHdfs processor work?

- Labels:

-

Apache NiFi

Created 09-15-2017 06:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I want to read some file (which are put in hdfs directory) and i want to use ListHdfs processor for it , there are several questions i am interested in:

- when i start listHdfs procesoor it will capture all files from directory and if i change it's state then( i mean i stop the processor) and then start it it again it willl take only those files whcih were put in dircetory recentrly or all files which are in directory?

Created on 09-15-2017 08:46 PM - edited 08-17-2019 11:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @sally sally, List Hdfs processor are developed as store the last state..

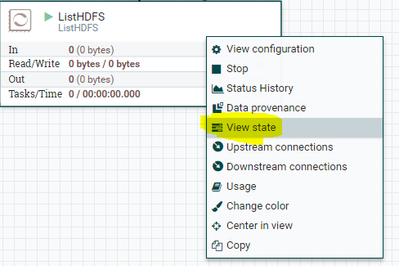

i.e when you configure ListHDFS processor you are going to specify directory name in properties. once the processor lists all the files existed in that directory at the time it will stores the state as maximum file time when it got stored into HDFS. you can view the state info by clicking on view state button.

if you want to clear the state then you need to get into view state and click on clear the state.

2. so once it saves the state in listhdfs processor, if you are running the processor by scheduling as cron(or)timer driven it will only checks for the new files after the state timestamp.

Note:- as we are running ListHDFS on primary node only, but this state value will be stored across all the nodes of NiFi cluster as primary node got changed, there won't be any issues regarding duplicates.

Example:-

hadoop fs -ls /user/yashu/test/ Found 1 items -rw-r--r-- 3 yash hdfs 3 2017-09-15 16:16 /user/yashu/test/part1.txt

when i configure ListHDFS processor to list all the files in the above directory

if you see the state of ListHDFS processor that should be same as when part1.txt got stored in HDFS in our case that should be

2017-09-15 16:16

it would be unix time in milliseconds when we convert the state time to date time format

that should be

Unixtime in milliseconds:- 1505506613479

Timestamp :- 2017-09-15 16:16:53

so the processor has stored the state, when it will run again it will lists only the new files that got stored after the state timestamp in to the directory and updates the state with new state time (i.e maximum file created in hadoop directory).

Created 09-15-2017 08:19 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The processor will only list the files which were not included in the first listing it created.

Created 09-15-2017 08:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In order to have listing start over again, you would need to perform the following:

1. Open "Component State" UI by right clicking on the listHDFS processor and select "view state".

2. Within that UI you will see a blue link "Clear state" which will clear the currentlr retained state.

Created on 09-15-2017 08:46 PM - edited 08-17-2019 11:37 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @sally sally, List Hdfs processor are developed as store the last state..

i.e when you configure ListHDFS processor you are going to specify directory name in properties. once the processor lists all the files existed in that directory at the time it will stores the state as maximum file time when it got stored into HDFS. you can view the state info by clicking on view state button.

if you want to clear the state then you need to get into view state and click on clear the state.

2. so once it saves the state in listhdfs processor, if you are running the processor by scheduling as cron(or)timer driven it will only checks for the new files after the state timestamp.

Note:- as we are running ListHDFS on primary node only, but this state value will be stored across all the nodes of NiFi cluster as primary node got changed, there won't be any issues regarding duplicates.

Example:-

hadoop fs -ls /user/yashu/test/ Found 1 items -rw-r--r-- 3 yash hdfs 3 2017-09-15 16:16 /user/yashu/test/part1.txt

when i configure ListHDFS processor to list all the files in the above directory

if you see the state of ListHDFS processor that should be same as when part1.txt got stored in HDFS in our case that should be

2017-09-15 16:16

it would be unix time in milliseconds when we convert the state time to date time format

that should be

Unixtime in milliseconds:- 1505506613479

Timestamp :- 2017-09-15 16:16:53

so the processor has stored the state, when it will run again it will lists only the new files that got stored after the state timestamp in to the directory and updates the state with new state time (i.e maximum file created in hadoop directory).