Support Questions

- Cloudera Community

- Support

- Support Questions

- Nifi - How to use getHDFSFileInfo for the next ste...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Nifi - How to use getHDFSFileInfo for the next step?

- Labels:

-

Apache NiFi

Created 07-07-2020 08:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi.

I'm trying to utilize GetHDFSFIleInfo(not GetHDFS) to start after previous step.

Actually, this is what I exactly want to do (https://community.cloudera.com/t5/Support-Questions/NiFi-fetchHDFS-without-ListHDFS/td-p/211708)

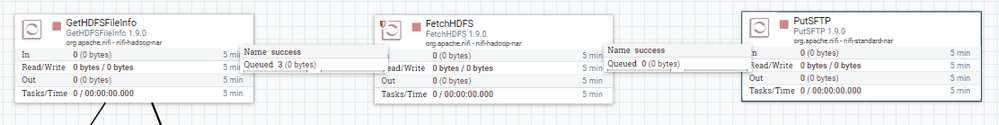

flow

streamcommand -> GetHDFSFileInfo -> FetchHDFS -> PutSFTP

I did make gethdfsfileinfo first, after then tried to get flow file info from previous job at FetchHDFS.

However, the result of gethdfsfileinfo was only one attribute and I don't know how to fetch all files at fetchHDFS.

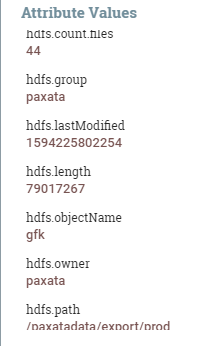

This is an attribute of gethdfsfileinfo. I got an attribute as below and want to fetch those all files.

filename : faaa~~~~~

hdfs.count.dirs : 1

hdfs.count.files : 44

hdfs.full.tree : {"ojbectName";"gfk",""...., "content":[{"objectName":"Weekly_GfK_02_Merge_F_HP_AT.txt",".....]}

hdfs.objectName : gfk

....

Could you please tell how to use it? or how to solve this problem?

Created 07-08-2020 05:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The GetHDFSFileInfo processor will produce one output FlowFile containing a complete listing of all files/directories found based upon the configured "Full path" and "Recurse Subdirectories" properties settings when the property "Group Results" is set to "All".

Since you want a single FlowFile for each object listed from HDFS, you will want to set the "Group Results" property to "None". You should then see a separate FlowFile produced fro each object found.

Then in your FetchHDFS processor you would need to set the property "HDFS Filename" to "${hdfs.path}/${hdfs.objectName}".

You may also find that you need to insert a RouteOnAttribute processor between your GetHDFSFileInfo and FetchHDFS processors to route out any FlowFIles produced by the GetHDFSFileInfo processor that are for directory objects only (not a file). You simply add a dynamic property to route any FlowFile produced from GetHDFSFileInfo processor that has the attribute "hdfs.type" set to "file" on to the fetchHDFSprocessor and send all other FlowFiles to the unmatched relationship which you can just auto-terminate.

Other things to consider:

1. Keep in mind that the GetHDFSFileInfo processor does not maintain any state, so every time it executes it will list all files/directories from the target regardless of whether they were listed before or not. The ListHDFS processor uses state.

2. If you are running your dataflow in a NIFi multi-node cluster, every node in your cluster will be performing the same listing (which may not be what you want). If you only want the list of target files/directories listed by one node, you should configure the GetHDFSFileInfo processor to "execute" on "Primary node" only (configured from processors "scheduling" tab). You can use load balancing configuration on the connection out of the GetHDFSFileInfo processor to redistribute the produced FlowFiles across all nodes in your cluster before they are processed by the FetchHDFS processor.

Hope this helps,

Matt

Created 07-08-2020 05:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The GetHDFSFileInfo processor will produce one output FlowFile containing a complete listing of all files/directories found based upon the configured "Full path" and "Recurse Subdirectories" properties settings when the property "Group Results" is set to "All".

Since you want a single FlowFile for each object listed from HDFS, you will want to set the "Group Results" property to "None". You should then see a separate FlowFile produced fro each object found.

Then in your FetchHDFS processor you would need to set the property "HDFS Filename" to "${hdfs.path}/${hdfs.objectName}".

You may also find that you need to insert a RouteOnAttribute processor between your GetHDFSFileInfo and FetchHDFS processors to route out any FlowFIles produced by the GetHDFSFileInfo processor that are for directory objects only (not a file). You simply add a dynamic property to route any FlowFile produced from GetHDFSFileInfo processor that has the attribute "hdfs.type" set to "file" on to the fetchHDFSprocessor and send all other FlowFiles to the unmatched relationship which you can just auto-terminate.

Other things to consider:

1. Keep in mind that the GetHDFSFileInfo processor does not maintain any state, so every time it executes it will list all files/directories from the target regardless of whether they were listed before or not. The ListHDFS processor uses state.

2. If you are running your dataflow in a NIFi multi-node cluster, every node in your cluster will be performing the same listing (which may not be what you want). If you only want the list of target files/directories listed by one node, you should configure the GetHDFSFileInfo processor to "execute" on "Primary node" only (configured from processors "scheduling" tab). You can use load balancing configuration on the connection out of the GetHDFSFileInfo processor to redistribute the produced FlowFiles across all nodes in your cluster before they are processed by the FetchHDFS processor.

Hope this helps,

Matt

Created on 07-08-2020 04:36 PM - edited 07-09-2020 01:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

Firstly, I appreciate your detail guide.

I changed Group Result option as you guided, but I saw only one attribute which was about directory.

This is my properties on GetHDFSFileInfo.

And this is what I got as a result of GetHDFSFileInfo.

hdfs.objectName : gfk <-- directory

hdfs.path : /paxatadata/export/prod <-- parent directory

hdfs.type : directory

Could you advice me more?

Created 07-09-2020 05:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The GetHDFSFileInfo processor still only produced one single output FlowFile after making the change?

I'd expect to see a separate FlowFile for each sub-directory found as well as each file found within those directories. This is where the RouteOnAttribute processor i mentioned would be used to drop any of the FlowFiles specific to just the "directory" and not a specific "file". Then only the FlowFiles specific to "files" would be sent on to your FetchHDFS for content ingestion.

Matt

Created 07-15-2020 01:38 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your guide was really helpful and it worked well.

However, I think there is some problems if "Full Path" has deeper hierarchy.

Firstly, I set full path like below.

aa/bb/cc/dd/

It created only one flow file which was "dd", of course its type was a directory.

I removed "dd" and set a dir filter and file filter to get files only I wanted.

After changed full path from "aa/bb/cc/dd" to "aa/bb/cc", it made all flow file information under the cc directory.

Thanks for your advice.

Cheers.