Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Nifi MergeContent Not Merging

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Nifi MergeContent Not Merging

- Labels:

-

Apache NiFi

Created on 08-15-2017 05:34 PM - edited 08-17-2019 07:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

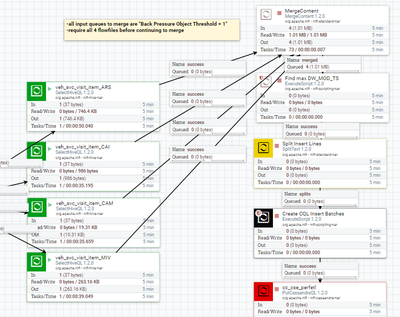

I have 4 Hive queries returning 4 separate flowfiles going in to Merge. I'd like the 4 files to be merged into one, but everything I've tried is not working.

-all input queues to merge are "Back Pressure Object Threshold = 1"

-require all 4 flowfiles before continuing to merge

4 files go in and 4 files come out?

Created 08-15-2017 05:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

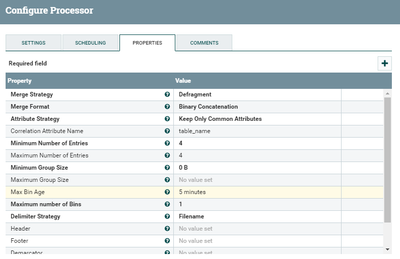

It's hard to tell from your flow if you have the 4 flow files you want to merge with their "fragment.*" attributes set correctly. If you use Defragment as a Merge Strategy, then the flow files must share the same value for fragment.count and fragment.id attributes. If those are not set and you just want to take the first 4 you get, set Merge Strategy to Bin-Packing Algorithm.

Created 08-15-2017 05:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 08-15-2017 06:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issue you are most likely running in to is caused by only having 1 bin.

https://issues.apache.org/jira/browse/NIFI-4299

Change number of bins to at least 2 and see if the resolves your issue.

Thanks,

Matt

Created 08-15-2017 08:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 08-16-2017 01:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is this a NiFi standalone or a NiFi cluster?

If cluster, are the FlowFiles being produced by each of your SelectHiveQL processors being produced on the same node? The MergeContent processor will not merge FlowFiles from different cluster nodes.

Assuming that all FlowFiles are on same NiFi instance, the only way I could reproduce your scenario was:

- Each FlowFile had a different value assigned to the "table_name" FlowFile Attribute and Merge Strategy was set to "Bin-Packing Algorithm". This caused each FlowFile to be placed in its own bin. At the end of 5 minutes max bin age, each bin of 1 was merged. If the intent is always to merge one FlowFile from each incoming connection, what is the purpose of setting a "Correlation Attribute Name"

- Setting Maximum number of bins to 1 and having 4 source FlowFiles become queued at different times.

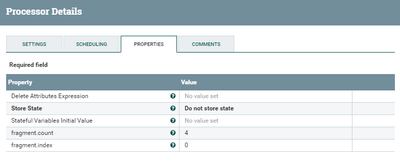

- The "Defragment" Merge Strategy will bin FlowFiles based on FlowFiles with matching values in the "fragment.identifier" FlowFile Attribute. It will then merge the flowFiles using the "fragment.index" and "fragment.count" attributes. Since you have also specified a correlation attribute, the MergeContent processor will instead use the value associated to that attribute instead of "fragment.identifier" to bin your files. If I have unique values on each FlowFile for "table_name", then each FlowFile ends up in a different bin and are routed to failure right away (if bins set to 1) or after 5 minutes max bin age since not all fragments where present.

- The other possibility is that "fragment.count" and "fragment.index" is set to 1 on every FlowFile.

I would stop your MergeContent processor and allow 1 FlowFile to queue in each connection feeding it. Then use the "list queue" capability to inspect the attributes on each queued FlowFile.

What values are associated to each FlowFile for the following attributes:

- fragment.identifier

- fragment.count

- fragment.index

- table_name

Thank you,

Matt

Created on 08-16-2017 06:17 PM - edited 08-17-2019 07:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 08-16-2017 10:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ugh... I was incorrect. It does NOT wait for all 4 when all processors are running... Back to unresolved. I must've had the merge turned off on the previous run, then turned it on.

Created on 08-29-2017 05:32 PM - edited 08-17-2019 07:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

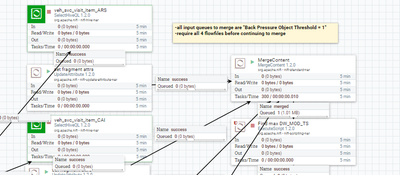

I setup a similar dataflow that is working as expected. The only difference is you made your fragment.index values 0-3 and I made mine 1-4. Is the FlowFile Attribute "table_name" set on all four FlowFiles? Is the value associated to the FlowFile Attribute "table_name" on all 4 FlowFiles exactly the same?

Below is my test flow that worked:

As you can see one 4 FlowFile merge was successful and a second is waiting for that 4th file before being merged.

Thanks,

Matt

Created 08-29-2017 05:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Matt Clarke For some reason, I was unable to reply directly to your comment above... Yes table_name was the same across all inputs. Not sure, why it wasn't working but moved to a different approach to resolve. I basically unioned all the independent hive queries to make it one input.