Support Questions

- Cloudera Community

- Support

- Support Questions

- Nifi Processor : Insert data into CLOB column

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Nifi Processor : Insert data into CLOB column

- Labels:

-

Apache NiFi

Created 09-25-2018 06:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

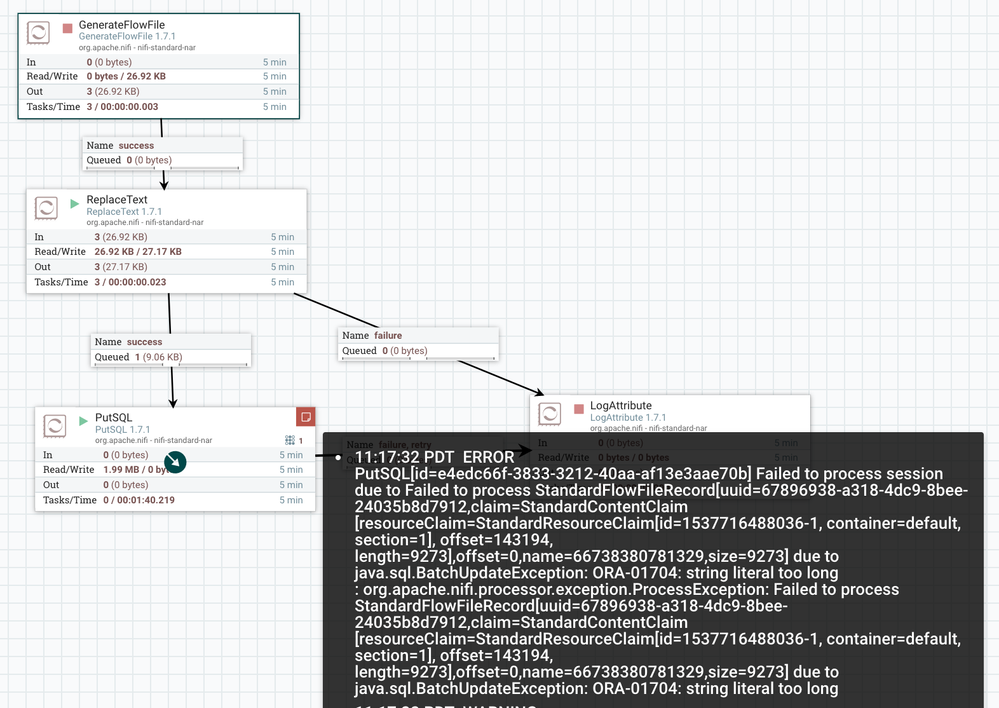

I have data pipeline to consume message from Kafka and insert into oracle database. The message from Kafka is in JSON format. If any error occurs during the processing then insert whole message (flowfile content) to invalid payload table. The trimmed version of the pipeline is as follows GenerateFlowFile => ReplaceText => PutSQL.

The table structure is as follows

CREATE TABLE CLOB_TEST

("TRAN_ID" VARCHAR2(36 BYTE) NOT NULL ENABLE,

"PAYLOAD" CLOB NOT NULL ENABLE,

CONSTRAINT "CLOB_TEST" PRIMARY KEY ("TRAN_ID")

)

Payload is more than 4000 characters. Creating insert SQL using replacetext failed and error is as follows

Error report -

SQL Error: ORA-01704: string literal too long

01704. 00000 -"string literal too long"

*Cause:The string literal is longer than 4000 characters.

*Action: Use a string literal of at most 4000 characters.

Longer values may only be entered using bind variables.

is there any other option to bind CLOB column and insert to the table. Any help will be greatly appreciated. Thanks.

screen-shot-2018-09-24-at-111751-am.png

Created 10-05-2018 05:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The ReplaceText puts the CLOB data directly into the content, which is why the error message occurs. You could use a PreparedStatement to get around this, but you very likely don't want to use an attribute as the CLOB is large.

The alternative is PutDatabaseRecord, you can provide a JsonTreeRecordReader (with schema) and it will generate the prepared statement but use the content to get the CLOB data rather than an attribute. If they won't know the schema (but it only contains primitive types) then they could use InferAvroSchema if necessary. However it is preferable to provide the schema directly via the Record Reader and/or a Schema Registry.

Created 05-02-2019 08:28 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 10-06-2018 04:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Matt for your reply. The whole flow file content have to be inserted into database. is there way to configure JsonTreeRecordReader to indicate whole flow file content as a value in prepared statement?

Regards

Subbu

Created 10-12-2018 03:51 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The entire flow file content is one "row" to be inserted into the database? You should still be able to use this, as of NiFi 1.7.0 the JsonTreeReader should recognize "one JSON per line" rather than expecting an array.

Created 10-08-2018 06:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you upload or share the sample workflow for PutDatabaseRecord to insert the flow file content into database.

Thanks Subbu