Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Nifi PutSFTP failed to rename dot file when "O...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Nifi PutSFTP failed to rename dot file when "Outbound Policy = Batch Output" for a child processor group

- Labels:

-

Apache NiFi

-

HDFS

Created on 04-05-2024 06:49 AM - edited 04-05-2024 11:48 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My data flow starts from a single FlowFile produced by a Sqoop job, which then expands into multiple FlowFiles after executing the GetHDFSFileInfo Processor (based on the number of HDFS files). To capture all failure scenarios, I have created a Child processor group with the following processors

Input Port --> GetHDFSFileInfo --> RouteOnAttribute --> UpdateAttribute --> FetchHDFS --> PutSFTP --> ModifyBytes --> Output Port

Main Processor Group

--------------------

RouteOnAttribute --> Above mentioned Child Processor Group --> MergeContent --> downstream processors

The child processor group is configured with "FlowFile Concurrency = Single FlowFile Per Node" and "Outbound Policy = Batch Output" to ensure that all fetched FlowFiles are successfully processed (written to the SFTP server).

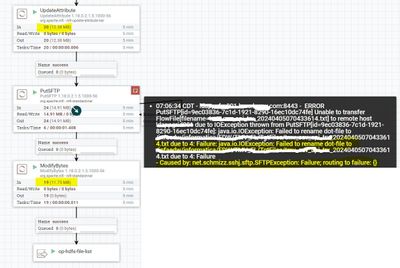

My GetHDFSFileInfo processor returns 20 HDFS files, and each execution successfully transfers 18 to 19 files to my SFTP server. However, during each execution, one or two file transfers fail in the PutSFTP Processor with the error message 'Failed to rename dot-file.' An error screenshot is attached below

I am facing this issue only when the child processor is configured with "Outbound Policy = Batch Output".

If we try without child processor group, then also it is working.

Could you please help to fix the issue with putSFTP processor.

This is in continuation with the solution provided in the thread https://community.cloudera.com/t5/Support-Questions/How-to-convert-merge-Many-flow-files-to-single-f...

Created 04-23-2024 05:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@s198

The two most common scenarios for this type of failure are:

1. File already exists with same name when trying to rename. Typically resolved by using an update attribute when a failure exists to modify the filename. Perhaps use the nextInt NiFi expression Language function to add an incremental number to filename or in your case modify the time by adding a few milliseconds to it.

2. Some process is consuming the dot (.) filename before the putSFTP processor has renamed it. This requires modifying the downstream process to ignore dot files.

While it is great that run duration and run schedule increases appear to resolve this issue, I think you are dealing will a millisecond raise condition and these two options will not always guarantee success here. Best option is to programmatically deal with the failures with a filename attribute modification or change who you are uniquely naming your files if possible.

Please help our community thrive. If you found any of the suggestions/solutions provided helped you with solving your issue or answering your question, please take a moment to login and click "Accept as Solution" on one or more of them that helped.

Thank you,

Matt

Created on 04-09-2024 04:33 AM - edited 04-09-2024 04:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 04-13-2024 08:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It looks like a timing or multiple components accessing the same sftp server. sFTP is very slow and nonthreaded and timing of accessing them can be tricky.

I recommend setting only 1 PutSFTP instance (dont run on cluster and don't have more than one post at once).

We need to see logs and know operating system, java version, NiFi version and some error details. File system type , sftp server version and the type and size of the HDFS files.

Created 04-11-2024 09:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@TimothySpann @steven-matison @SAMSAL , would you be able to help @s198 please?

Regards,

Vidya Sargur,Community Manager

Was your question answered? Make sure to mark the answer as the accepted solution.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Learn more about the Cloudera Community:

Created 04-15-2024 04:40 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The first PutSFTP processor is used to transfer the actual file and the second one is used to transfer the stats file corresponding to that file like file name, size, row count etc.

I can limit the second PutSFTP processor to transfer it once with all the 20 files details. ie, Transfer one stats file with the details of all 20 files. Can we store like this info in a variable line by line and then send at the end ?

FileName~RowCount~FileSize

file1~100~1250

file2~200~3000

The above will also satisfy my requirement instead of multiple stats files for the second PutSFTP processor.

Could you pleae some inputs on this issue.

Thank you

Created 04-15-2024 10:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How much RAM on the machine and how much is dedicated in the NiFi configuration (or Cloudera manager)? Should be at least 8GB of RAM.

Either the network is slow or you don't have enough RAM.

You can build files from the stats very easily. That is a good strategy.

Created on 04-18-2024 02:25 AM - edited 04-18-2024 02:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

If we introduce any "Run Schedule" delay for the putSFTP processor will it help to fix this issue ? I mean to change the "Run Schedule" from 0 seconds to 30 seconds or something?

There is no network delay and regarding the RAM size, we are yet to hear back from the platform team.

Created 04-18-2024 04:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

delay could help

Created 04-18-2024 06:10 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@s198

Question for you:

- If you leave the putSFTP processor stopped, run your dataflow so all FlowFiles queue in front of the putSFTP processor and then start the putSFTP processor, does the issue still happen?

- Does issue only happen when the flow is an all started/running state?

Answers to above can help in determining if changing the run schedule will help here.

Run Schedule details:

- The run schedule works in conjunction with Timer Driven scheduling strategy. This schedule setting controls how often a component will get scheduled to execute (different from when it actually executes. Execution depends on available threads in the NiFi Timer Driven thread pool shared by all components). By default this is set to 0 secs which means that NiFi should schedule his processor as often as possible (Basically schedule it again as soon as an available concurrent task (concurrent tasks default is 1) is available to it. To avoid CPU saturation here, NiFi builds in a yield duration if upon scheduling of a processor there is no work to be done (inbound connection(s) are empty).

Depending on load on yoru system and dataflow, speed of network, this could happen very quick meaning it scheduled, sees only one FlowFile in the inbound connection at time of schedule and processes only that one FlowFile instead of a batch. It then closes that thread and starts a new one for next FlowFile instead of processing multiple FlowFiles in one SFTP connection. By changing run schedule you are allowing more time between scheduling for FlowFiles to queue on the inbound connection so they get batch processed in a single SFTP connection.

Run Duration details:

Another option on processors is the run duration setting. What this adjustment does is upon scheduling of a processor the execution will not end until the configured run duration has elapsed. So lets say at time of scheduling (run schedule) there is one FlowFile in inbound connection queue (remember we are dealing with micro seconds here, so not something you can visualize yourself via the UI). That execution thread will execute against that FlowFile, but rather then close out the session immediately committing the FlowFile to an outbound relationship, it will check inbound connection for another FlowFile and process it in same session. It will continue to do this until the run duration is satisfied at which time all processed FlowFiles during that execution are committed to downstream relationship(s). So Run Duration might be another setting you try to see if it helps with your issue. If you try run duration, i'd set run schedule to default.

You may also want to look at your SFTP server logs to see what is happening when the file rename attempts are failing.

Please help our community grow. If you found any of the suggestions/solutions provided helped you with solving your issue or answering your question, please take a moment to login and click "Accept as Solution" on one or more of them that helped.

Thank you,

Matt

Created 04-18-2024 12:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @MattWho

Thank you for the great suggestions and super helpful information.

Here are the results of what I tried:

- I set the Run Schedule to 0 seconds and stopped the PutSFTP processor. After all 20 flowfiles were queued up, I started it again.

Result: Out of 20 flow files, 1 failed. - I set the Run Schedule to 0 seconds and let the flow run with all processors started (Here also, all 20 flow files came almost same time)

Result: Out of 20 flow files, 2 failed. - I updated the Run Schedule of the PutSFTP processor from 0 seconds to 30 seconds.

Result: No failures, all 20 flow files passed. - I updated the "Run Duration" to 500ms.

Result: No failures, all 20 flow files passed.

Could you please suggest the best approach to address this scenario? Option 3 or 4