Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Nifi queues are full and create backlogs

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Nifi queues are full and create backlogs

- Labels:

-

Apache NiFi

Created 11-25-2022 12:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

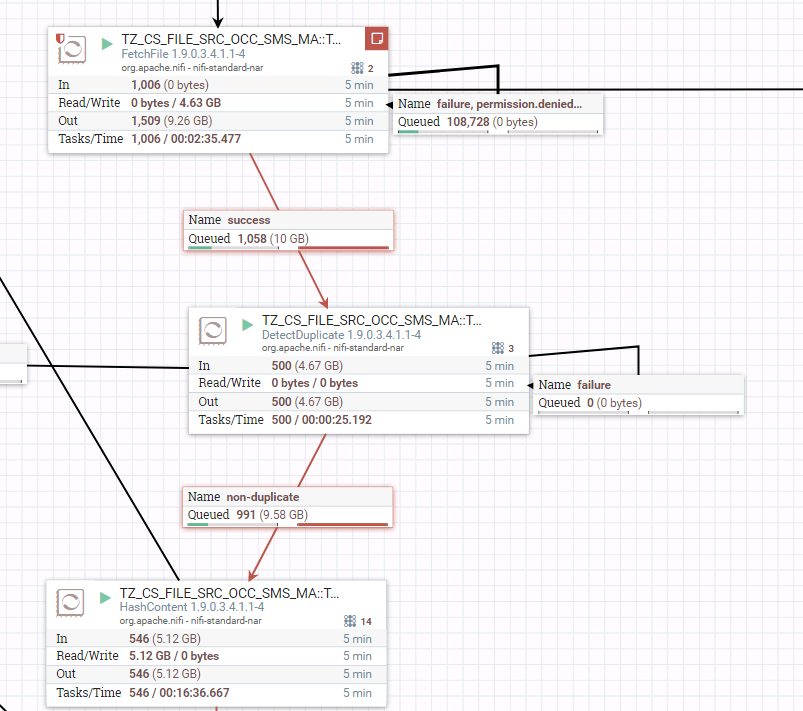

Hi guys, I am facing a problem with Nifi I have about 80G data in queues that make backlogs and I need the data to be ingested faster and but I can't clear the queues in case of missing files, Please help me solve this issue, thanks a lot.

Created 11-25-2022 07:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey @F_Amini ,

If the data has already been loaded into nifi, I don't believe you can do much other than to increase your resources. I see you're already familar with increasing concurrent tasks (it appears your HashContent processor has an increased number) - perhaps if your entire flow is getting bottlenecked by a single processor, you could temporarily increase its tasks even further (at the cost of other processors getting less).

Created 11-25-2022 10:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi dear @Green_ Thanks for your answer,

Yes I raised the current task but it doesn't change anything, so the ultimate solution you suggest is increasing the CPU, Is there anything we can do for tuning to speed up the ingestion in Nifi?

It's crucial to my job so I appreciate any suggestion you have, Thanks a lot.

Created 11-25-2022 10:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So long as backpressure is building up behind a processor, the processor that is previous in line will not be scheduled. This will fall back all the way throughout your flow until it reaches the processor that fetches the data and cause it to stop. As such, all it takes is one weak-link processor to limit your entire flow's throughput. I see the relationship coming out of your HashContent is also red, which implies that there is a different processor further down-flow that is causing backpressure. Can you describe which processor causes this? perhaps fixing its slow performance will improve your entire flow.

Created 12-09-2022 02:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@F_Amini

@Green_ Is absolutely correct here. You should be careful when increasing concurrent tasks as just blindly increasing it everywhere can have the opposite effect on throughput. I recommend stopping setting the concurrent tasks back to 1 or maybe 2 on all the processor where you have adjusted away from the default of 1 concurrent task.

Then take a look at the processor further downstream in your dataflow where it has a red input connection but black (no backpressure) outbound connections. This processor s @Green_ mentioned is the processor causing all your upstream backlog. Your'll want to monitor your CPU usage as you make small incremental adjustments to this processors concurrent tasks until you see the upstream backlog start to come down.

If while monitoring CPU, you see it spike pretty consistently at 100% usage across all your cores, then your dataflow has pretty much reached the max throughput it can handle for yoru specific dataflow design. At this point you need to look at other options like setting up a NiFi cluster where this work load can be spread across multiple servers or designing your datafow differently with different processors to accomplish same use case that may have a lesser impact on CPU (not always a possibility).

Thanks,

Matt

Created 12-09-2022 05:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content