Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Nifi throw error: java.lang.NoSuchMethodError:...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Nifi throw error: java.lang.NoSuchMethodError: org.apache.hadoop.conf.Configuration.reloadExistingConfigurations()V

- Labels:

-

Apache NiFi

Created on 03-02-2018 02:41 PM - edited 08-17-2019 05:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Bryan,

I am following this link to set up Nifi putHDFS to write to Azure Data Lake.Connecting to Azure Data Lake from a NiFi dataflow

The Nifi is within HDF 3.1 VM and the Nifi version is 1.5.

We got the jar files mentioned in the above link, from a HD Insight(v 3.6, which supports hadoop 2.7) head node, these jars are:

adls2-oauth2-token-provider-1.0.jar

azure-data-lake-store-sdk-2.1.4.jar

hadoop-azure-datalake.jar

jackson-core-2.2.3.jar

okhttp-2.4.0.jar

okio-1.4.0.jar

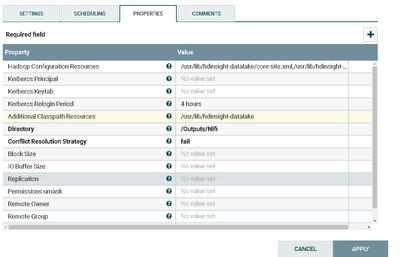

And they are copied to the folder /usr/lib/hdinsight-datalake of the Nifi host. And the putHDFS config(picture) is as attached(exactly as the link above).

But in the nifi log we are getting this:

Caused by: java.lang.NoSuchMethodError: org.apache.hadoop.conf.Configuration.reloadExistingConfigurations()V at org.apache.hadoop.fs.adl.AdlConfKeys.addDeprecatedKeys(AdlConfKeys.java:112) at org.apache.hadoop.fs.adl.AdlFileSystem.<clinit>(AdlFileSystem.java:92) at java.lang.Class.forName0(Native Method) at java.lang.Class.forName(Class.java:348) at org.apache.nifi.processors.hadoop.AbstractHadoopProcessor$ExtendedConfiguration.getClassByNameOrNull(AbstractHadoopProcessor.java:490) at org.apache.hadoop.conf.Configuration.getClassByName(Configuration.java:2099) at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2193) at org.apache.hadoop.fs.FileSystem.getFileSystemClass(FileSystem.java:2654) at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:2667) at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:370) at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:172) at org.apache.nifi.processors.hadoop.AbstractHadoopProcessor$1.run(AbstractHadoopProcessor.java:322) at org.apache.nifi.processors.hadoop.AbstractHadoopProcessor$1.run(AbstractHadoopProcessor.java:319) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1698) at org.apache.nifi.processors.hadoop.AbstractHadoopProcessor.getFileSystemAsUser(AbstractHadoopProcessor.java:319) at org.apache.nifi.processors.hadoop.AbstractHadoopProcessor.resetHDFSResources(AbstractHadoopProcessor.java:281) at org.apache.nifi.processors.hadoop.AbstractHadoopProcessor.abstractOnScheduled(AbstractHadoopProcessor.java:205) ... 16 common frames omitted

The AdlConfKeys class is from the hadoop-azure-datalake.jar file above. From the above exception, it seems to me this AdlConfKeys is loading an older version of the org.apache.hadoop.conf.Configuration class, which does not have the reloadExistingConfigurations method. However we cannot find out from where this older class gets loaded. This HDF 3.1 has the hadoop-common-XXXX.jar in multiple locations, all those on version 2.7 something has the org.apache.hadoop.conf.Configuration containing the method reloadExistingConfigurations, only those on version 2.3 don't have this method.(I decompiled both 2.7 and 2.3 jars to find out)

[root@NifiHost /]# find . -name *hadoop-common*

(the output is a lot more than below, however I removed some display purpose, most of them are on 2.7, only 2 of them are on version 2.3):

./var/lib/nifi/work/nar/extensions/nifi-hadoop-libraries-nar-1.5.0.3.1.0.0-564.nar-unpacked/META-INF/bundled-dependencies/hadoop-common-2.7.3.jar

./var/lib/ambari-agent/cred/lib/hadoop-common-2.7.3.jar

./var/lib/ambari-server/resources.backup/views/work/WORKFLOW_MANAGER{1.0.0}/WEB-INF/lib/hadoop-common-2.7.3.2.6.2.0-205.jar

./var/lib/ambari-server/resources.backup/views/work/HUETOAMBARI_MIGRATION{1.0.0}/WEB-INF/lib/hadoop-common-2.3.0.jar

./var/lib/ambari-server/resources/views/work/HUETOAMBARI_MIGRATION{1.0.0}/WEB-INF/lib/hadoop-common-2.3.0.jar

./var/lib/ambari-server/resources/views/work/HIVE{1.5.0}/WEB-INF/lib/hadoop-common-2.7.3.2.6.4.0-91.jar

./var/lib/ambari-server/resources/views/work/CAPACITY-SCHEDULER{1.0.0}/WEB-INF/lib/hadoop-common-2.7.3.2.6.4.0-91.jar

./var/lib/ambari-server/resources/views/work/TEZ{0.7.0.2.6.2.0-205}/WEB-INF/lib/hadoop-common-2.7.3.2.6.2.0-205.jar

./usr/lib/ambari-server/hadoop-common-2.7.2.jar

./usr/hdf/3.1.0.0-564/nifi/ext/ranger/install/lib/hadoop-common-2.7.3.jar

./usr/hdf/3.0.2.0-76/nifi/ext/ranger/install/lib/hadoop-common-2.7.3.jar

So I really don't know how Nifi managed to find a hadoop-common jar file or something else containing the Configuration class does not have the method reloadExistingConfigurations(). (Probably the Nifi get the older version of the class from its parent classloader) . We do not have any customized Nar files deployed to Nifi either, everything is pretty much default from whatever HDF 3.1 has on Nifi. The link above works for HDF 2.1, so not sure if this is a bug introduced from a version later than that.

Please advise. I've been spending a whole day on this but can't fix the issue. Appreciate your help.

Created 03-02-2018 02:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've answered this on stackoverflow...

Created 03-02-2018 04:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you very much Bryan I'll follow that stackoverflow and further updates there.