Support Questions

- Cloudera Community

- Support

- Support Questions

- No errors in error logs - services start green but...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

No errors in error logs - services start green but then turn RED after 60 seconds

- Labels:

-

Apache Hive

Created on 07-26-2016 12:23 PM - edited 09-16-2022 03:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am using the Hortonworks Sandbox - it previously ran just fine.

I did not use the system for some time and it seems the machine is broken even after a reboot.

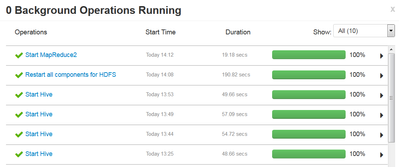

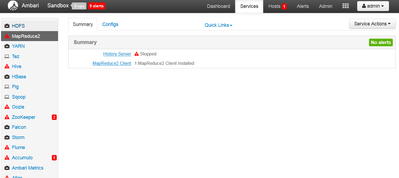

Whenever I start a service (e.g. HIVE) all proces steps appear green. For about 60 seconds the service turns up green BUT then switches back to RED. It does not matter which service I start - it's the same behaviour for all services ... all services start up fine BUT then switch to RED.

The 9 alerts are all OLD errors from 5 months ago ... any hint where to look for the error is appreciated.

Created 07-26-2016 04:43 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

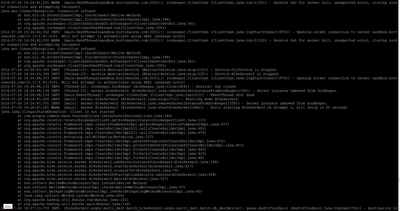

For the Hive errors, it's looks like hive is having issues communicating with Zookeeper. If Zookeper won't startup, then that's not surprising.

For the Ambari Agent errors, it looks like you have a corrupted json data file in your ambari-agent (/var/lib/ambari-agent/data/structured-out-status.json). Try moving the file to a different name like ".backup" and restart all of the services. I would do a "stop all" of the services, if it is available. Then try starting only Zookeeper to see if it comes up cleanly. After that, try starting the HDFS service. Then you can do a "start all".

Created 07-26-2016 12:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you paste logs for any of the service which is getting failed.

Created 07-26-2016 12:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

NONE of them "FAIL" - they simply turn RED after 60 seconds without producing any errors in log files.

Created 07-26-2016 12:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Where can If find error logs files if no obvious error occurs?

Created 07-26-2016 02:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When I've seen this behavior before, it was a memory issue. The services would start up appropriately, therefore Ambari does not report any errors. However, because of out of memory issues the services would die off and would ultimately fail the Ambari service check. If the service dies outside of Ambari's control (start/stop), you won't necessarily get logging information fo rit.

For the given services, look in the appropriate /var/log/<service name> directory and see if you get more helpful logging information. Make sure you have at least 8GB of memory allocated to the VM. If you can allocate more (10-12GB), that wouldn't hurt to see if the problem goes away.

Some common log locations:

/var/log/hadoop/hdfs /var/log/hadoop-yarn/yarn /var/log/hadoop-mapreduce/mapred /var/log/webhcat

Created on 07-26-2016 02:28 PM - edited 08-18-2019 06:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

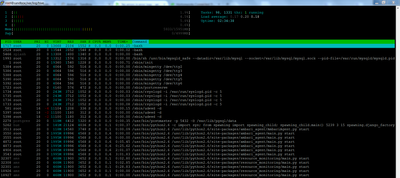

My VM has 16GB of memory. So i should be fine. Also HTOP does not show more memory usage than 6GB (I rebooted).

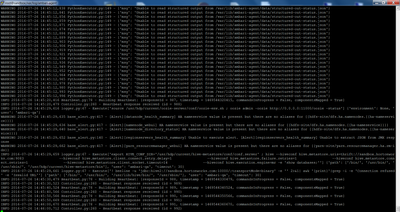

I found some errors in /var/log/hive. Not sure what to make of it.

Created on 07-26-2016 02:36 PM - edited 08-18-2019 06:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

HTOP shows little memory usage

Tahnk you for your support!

Created on 07-26-2016 02:43 PM - edited 08-18-2019 06:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My AMBARI-AGENT.log show weird errors as well.

Created 07-26-2016 04:43 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For the Hive errors, it's looks like hive is having issues communicating with Zookeeper. If Zookeper won't startup, then that's not surprising.

For the Ambari Agent errors, it looks like you have a corrupted json data file in your ambari-agent (/var/lib/ambari-agent/data/structured-out-status.json). Try moving the file to a different name like ".backup" and restart all of the services. I would do a "stop all" of the services, if it is available. Then try starting only Zookeeper to see if it comes up cleanly. After that, try starting the HDFS service. Then you can do a "start all".

Created 07-27-2016 01:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

WOW - I think backing up and deleting the file actually helped a lot - it's not perfect but it still works.

/var/lib/ambari-agent/data/structured-out-status.json

A lot of the services simply went green after i was able to restart zookeeper. So all services were running - Ambari is just unable to monitor them correctly. Unfortunatly ZOOKEEPER itself simply return to a red state - I need to dive the logs to find out what the next problem is.