Support Questions

- Cloudera Community

- Support

- Support Questions

- NodeManager Health Summary

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

NodeManager Health Summary

Created on 01-03-2019 01:29 PM - edited 08-17-2019 03:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi all

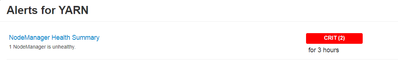

in YARN Alerts we saw the following critical alarm

1 NodeManager is unhealthy.

we have 36 data node machines that include ( DATANODE , metrics monitor , node manager )

since one of the datanode is the problem , then we need to find the problematic machine

can we get advice how to find the datanode with this alert?

Created on 01-03-2019 02:05 PM - edited 08-17-2019 03:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

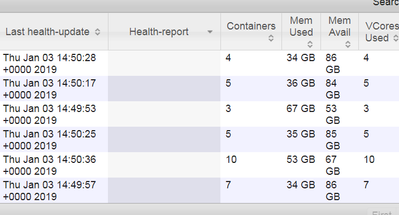

Nodemanager is a slave process of YARN so you should drill down the YARN, in my case I just intentionally brought down my node manager so the problematic Nodemanager should show.

Go to the ResourceManager UI check the nodes link on the left side of the screen. All your NodeManagers should be listed there and the reason for it being listed as unhealthy may be shown here. It is most likely due to yarn local dirs or log dirs. You may be hitting the disk threshold for this.

Finally checks the logs look in /var/log/hadoop-yarn/yarn and NOT in /var/log/hadoop/yarn

Created on 01-03-2019 02:56 PM - edited 08-17-2019 03:35 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

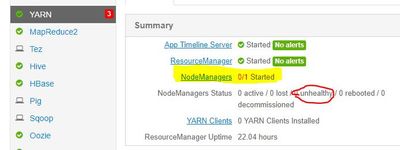

you said "ll your NodeManagers should be listed there and the reason for it being listed as unhealthy may be shown here"

but I not see anything about health nodemanager

see please the follwing:

Created 01-03-2019 03:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Geoffrey Shelton Okot , regarding my last comment , do you any suggestion how to find the problematic naodemanager ?

Created 01-04-2019 07:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Geoffrey Shelton Okot any suggustion?

Created 01-29-2019 02:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Go to ResourceManager UI on Ambari. Click nodes link on the left side of the window. It should show all Node Managers and the reason for it being listed as unhealthy.

Mostly found reasons are regarding disk space threshold reached. In that case needs to consider following parameters

| Parameters | Default value | Description |

| yarn.nodemanager.disk-health-checker.min-healthy-disks | 0.25 | The minimum fraction of number of disks to be healthy for the node manager to launch new containers. This correspond to both yarn.nodemanager.local-dirs and yarn.nodemanager.log-dirs. i.e. If there are less number of healthy local-dirs (or log-dirs) available, then new containers will not be launched on this node. |

| yarn.nodemanager.disk-health-checker.max-disk-utilization-per-disk-percentage | 90.0 | The maximum percentage of disk space utilization allowed after which a disk is marked as bad. Values can range from 0.0 to 100.0. If the value is greater than or equal to 100, the nodemanager will check for full disk. This applies to yarn.nodemanager.local-dirs and yarn.nodemanager.log-dirs. |

| yarn.nodemanager.disk-health-checker.min-free-space-per-disk-mb | 0 | The minimum space that must be available on a disk for it to be used. This applies to yarn.nodemanager.local-dirs and yarn.nodemanager.log-dirs. |

In the final step, if above steps do not reveal the actual problem , needs to check log , location path : /var/log/hadoop-yarn/yarn.