Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: NodeManager fails to start

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

NodeManager fails to start

- Labels:

-

Apache Hadoop

-

Apache YARN

-

Cloudera Manager

Created 05-26-2022 09:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello folks!

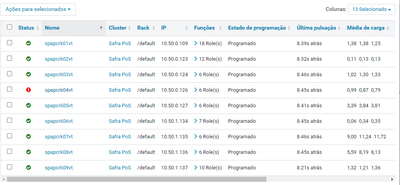

I have a cluster with 9 machines, running on CDH 6.2 (OnPremise). I have 3 master, 1 edge and 5 workers.

I am not able to up 2 of 5 NodeManager on workers. 3 of them are ok, and 2 of them give me a follow log (attach), without error but a Warning with "NullPointerException":.

When I put the NodeManager to run, on Cloudera Manager it doens't fail, but I got two alerts, as follow:

- NodeManager can not connect to ResourceManager

- ResourceManager could not connect to Web Server of NodeManager

Also, I can't access the /jmx of the server. And, when I run NodeManager by Cloudera Manager, my CPU going to use of 100%.

On that 2 workers, I have RegionServer and DataNode working fine, the problem is only with NodeManager.

Please, any suggest?

Created 06-02-2022 08:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi guys!

Finally we solved the problem. To fix it, we moved all content from "yarn.nodemanager.recovery.dir" config path to another one (i.e mv yarn-rm-recovery yarn-rm-recovery-backup) and we created yarn-rm-recovery again, grant permisison to yarn:hadoop to folder.

After that, we can start NodeManager with no error.

Thanks all!

Created 05-26-2022 10:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Are all the hosts healthy?

To check go to: CM -> hosts -> All hosts

Created 05-26-2022 11:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

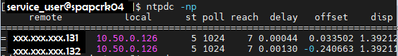

Kind of, Elias! Most of time is healthy, but now I've seen that is a NTP clock integrity problem on 1 of 9 machines, as follow:

The NodeManager problem affected hosts SPAPCRK03 and SPAPCRK04.

SPAPCRK03 s healthy, and SPAPCRK04 has an alert of NTP clock.

I runned "ntpdc -np" and got this output:

But i don't know if that NTP problem is cause of NodeManager doesn't start.

Created 05-26-2022 11:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

On that 2 nodes (03 and 04), I've stopped NodeManager cause that was consuming 100% od CPU, and I wouldn't that cause more incident on cluster at all.

Created 05-26-2022 11:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

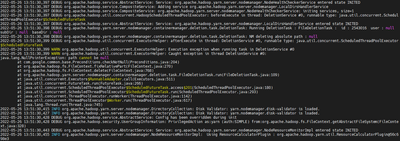

I've tried to start NodeManager with DEBUG log and got it:

That is the only WARN we have in log.

Created 05-27-2022 02:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @marcosrodrigues ,

the message says:

2022-05-26 13:15:58,296 WARN org.apache.hadoop.util.concurrent.ExecutorHelper: Caught exception in thread DeletionService #0:

java.lang.NullPointerException: path cannot be null

...

at org.apache.hadoop.fs.FileContext.delete(FileContext.java:768)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.deletion.task.FileDeletionTask.run(FileDeletionTask.java:109)

...which means that the NM on those nodes tried to delete some "empty"/null paths. It is not clear from where do these null paths come from, and I haven't found any known YARN bug releted to this.

Are these NodeManagers configured the same way as all the others?

Are the YARN NodeManager local disks ("NodeManager Local Directories" - "yarn.nodemanager.local-dirs") exist and readable/writable by the "yarn" user? Are those directories completely empty?

Thanks

Miklos Szurap

Customer Operations Engineer

Created 05-27-2022 07:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @mszurap ,

Yes, all NodeManager is configured the same way. I checked it by Cloudera Manager, setting by setting.

Also, the NodeManager Local Directories is setted

And is readble/writeable by "yarn" user:

I checked about the same path in other nodes, and the permission was right, but the only diff is that on machines working fine, the dir "nmPrivate" was updated minutes ago, and about 03 (with issues) the last updated was May 9, at 17:31 (kind of same time we recognized the Node has shutdown). Folders aren't empty.

And about NullPointesException, its curious, cause we start it with DEBUG and TRACE logs active, and in DEBUG we found the follow, before WANR about NPE:

2022-05-26 19:51:17,963 DEBUG org.apache.hadoop.yarn.server.nodemanager.containermanager.deletion.task.DeletionTask: Running DeletionTask : FileDeletionTask : id : 2543016 user : null subDir : null baseDir : null

2022-05-26 19:51:17,963 DEBUG org.apache.hadoop.yarn.server.nodemanager.containermanager.deletion.task.DeletionTask: NM deleting absolute path : null

2022-05-26 19:51:17,964 DEBUG org.apache.hadoop.util.concurrent.ExecutorHelper: afterExecute in thread: DeletionService #0, runnable type: java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask

2022-05-26 19:51:17,964 WARN org.apache.hadoop.util.concurrent.ExecutorHelper: Execution exception when running task in DeletionService #0

2022-05-26 19:51:17,965 WARN org.apache.hadoop.util.concurrent.ExecutorHelper: Caught exception in thread DeletionService #0:

java.lang.NullPointerException: path cannot be null

at com.google.common.base.Preconditions.checkNotNull(Preconditions.java:204)

at org.apache.hadoop.fs.FileContext.fixRelativePart(FileContext.java:270)

at org.apache.hadoop.fs.FileContext.delete(FileContext.java:768)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.deletion.task.FileDeletionTask.run(FileDeletionTask.java:109)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$201(ScheduledThreadPoolExecutor.java:180)

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:293)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)It says the user is "null", subDir is "null" and baseDir is "null".. But i don't know where yarn goes to locate that user, subdir and baseDir.

Any idea?

Thanks!

Created 05-27-2022 09:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I would try to clean up everything from the /appN/yarn/nm directory (at least with root user try to move out the "filecache", "nmPrivate" and "usercache" to an external directory), maybe there are some files which NM cannot clean up for some reason.

If that still does not help, then I can imagine that the ResourceManager statestore (in zookeeper) keeps track of some old job details and the NM tries to clean up after those old containers.

Is this cluster a prod cluster? If not, then you could stop all the YARN applications, then stop the YARN service and then with formatting the RM state store there should be a clean state.

https://hadoop.apache.org/docs/stable/hadoop-yarn/hadoop-yarn-site/YarnCommands.html

In CM there is an action for it under the YARN service.

Created 05-27-2022 01:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@mszurap ,

I did what u said, I copied all content from /appN/yarn/nm, including directories "filecache", "nmPrivate" and "usercache". By this way, the dir "/appN/yarn/nm" was with 0 dir and 0 files. Then I started NodeManager by ClouderaManager, and got the same error, with all services running on that machine start successfully, except NodeManager..Also, the directory "/appN/yarn/nm" stays with no content, even after tried to put it run by CM.

I realized that when I run "yarn nodemanager" with root user, the nodemanager run with no error, and with some parameters different when comparting with CM start command, but ClouderaManager doesn't recognize the Node, and I got that when I run it by CM, the command has some parameters (that is the same when comparting with NodeManager that is ok). Maybe it can be something with Yarn user?

About the RM StateStore, I didn't find any information about that. At link u sent, that say to run "-format-state-store" only if ResourceManager is not running, and in cluster, it is running okay, and recognzing 3 of 5 nodes.

Unfortunaltey, it is a production cluster, so I think I can't stop Yarn whole at all.

Do u have any sugestion?

Created 05-30-2022 11:02 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Be careful with starting processes as root user, as that may leave some files and directories around owned as root - and then the ordinary "yarn" user (the process stareted by CM) won't be able to write it. For example log files under /var/log/hadoop-yarn/... Please verify that.