Support Questions

- Cloudera Community

- Support

- Support Questions

- Not able to setup spark.driver.cores

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Not able to setup spark.driver.cores

- Labels:

-

Apache Spark

-

Apache YARN

Created 10-13-2017 07:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

I struggle to change number of cores allocated to spark driver process. As I am quite newbe to spark I have no more ideas what would be wrong. Hope somebody would advise me on this.

It seems that drivers cores number remains at default value, that is 1 as I guess, regardless what value is define is spark.driver.cores. I am trying to run pyspark in yarn-cluster mode. It is isolated dev small cluster so there are no other concurrent jobs.

At the same time, I can effectively configurate memory size for driver as well as number of executors and their size (cores and memory).

My environment is:

- cluster containing 4 nodes (each 4 cores and 16 GB of memory)

- HDP 2.6

- Spark 2.1

Spark submit command is:

spark-submit --master yarn --deploy-mode cluster \

--driver-cores 4 --driver-memory 4G \

--num-executors 3 --executor-memory 14G --executor-cores 4 \

--conf spark.yarn.maxAppAttempts=1 --files /usr/hdp/current/spark-client/conf/hive-site.xml {my python code}

Thanks

Created 10-14-2017 12:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

can you please check what the value for the parameter, to check whether dynamic allocation is holding of the explicit resource argument.

spark.dynamicAllocation.enabled =true

at the same time can you please use --conf instead of --driver-cores (as this was not well documented in any spark docs- though it was showing in command line).

spark-submit --master yarn --deploy-mode cluster \

--conf "spark.driver.cores=4" --driver-memory 4G \

--num-executors 3 --executor-memory 14G --executor-cores 4 \

--conf spark.yarn.maxAppAttempts=1 --files /usr/hdp/current/spark-client/conf/hive-site.xml {my python code}

Created 10-14-2017 09:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @bkosaraju,

Thanks a lot for help.

I have checked points

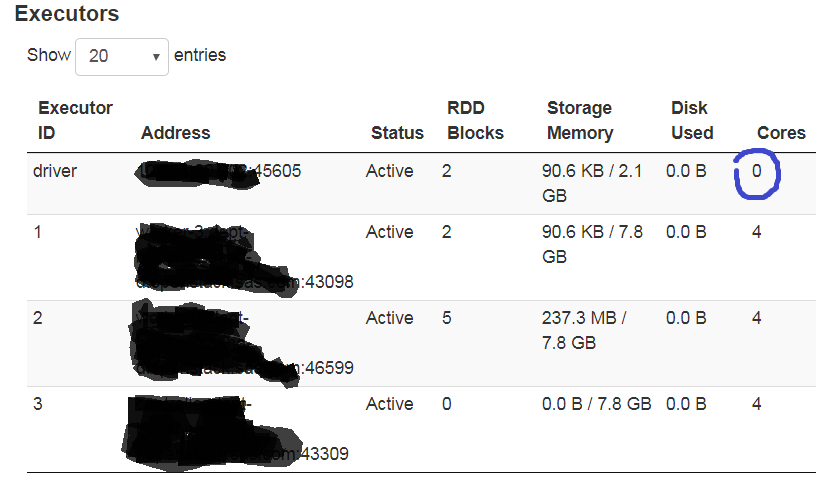

you suggested. Unfortunately it didn't help as number of driver cores is still reported to be 0. Is my expectation right that there should be exact number of

cores as provided in driver.cores property?

I am sure that dynamic allocation is on, as I can see executors removed during application runtime.

I attached a list of spark properties for ma application taken from Spark UI.