Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Null Pointer Exception in ambari-server logs a...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Null Pointer Exception in ambari-server logs after ambari-server restart - What could I do?

Created on 05-16-2017 03:30 PM - edited 08-17-2019 05:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi! -- I have a HDP-2.4 cluster installed on Google Cloud that has been working well until now - a month after first installation. The installation was done following the steps given here. After installation, ambari allowed me to add new services and monitor the cluster.

Short after initial installation, I added Zeppelin without problems according to the instructions given here. There was no problem restarting ambari and Zeppelin worked just fine.

The issues started this past Friday, right after adding Hue following the instructions given here. After restarting ambari server and agents, the ambari GUI reported "lost heartbeat" everywhere. Several restarts of both ambari server and agent did not help. So I went to the log files.

The ambari-server.log is full of this repeating pattern:

15 May 2017 07:16:10,841 INFO [qtp-ambari-client-25] MetricsReportPropertyProvider:153 - METRICS_COLLECTOR is not live. Skip populating resources with metrics, next message will be logged after 1000 attempts. 15 May 2017 07:16:14,000 INFO [qtp-ambari-agent-3887] HeartBeatHandler:927 - agentOsType = centos6 15 May 2017 07:16:14,015 ERROR [qtp-ambari-client-26] ReadHandler:91 - Caught a runtime exception executing a query java.lang.NullPointerException 15 May 2017 07:16:14,016 WARN [qtp-ambari-client-26] ServletHandler:563 - /api/v1/clusters/hadoop/requests java.lang.NullPointerException 15 May 2017 07:16:14,067 INFO [qtp-ambari-agent-3887] HostImpl:285 - Received host registration, host=[hostname=hadoop-w-1,fqdn=hadoop-w-1.c.hdp-1-163209.internal,domain=c.hdp-1-163209.internal,architecture=x86_64,processorcount=2,physicalprocessorcount=2,osname=centos,osversion=6.8,osfamily=redhat,memory=7543344,uptime_hours=0,mounts=(available=37036076,mountpoint=/,used=11816500,percent=25%,size=51473368,device=/dev/sda1,type=ext4)(available=3771672,mountpoint=/dev/shm,used=0,percent=0%,size=3771672,device=tmpfs,type=tmpfs)(available=498928440,mountpoint=/mnt/pd1,used=2544104,percent=1%,size=528316088,device=/dev/sdb,type=ext4)] , registrationTime=1494832574000, agentVersion=2.2.1.0 15 May 2017 07:16:14,076 WARN [qtp-ambari-agent-3887] ServletHandler:563 - /agent/v1/register/hadoop-w-1.c.hdp-1-163209.internal java.lang.NullPointerException 15 May 2017 07:16:20,054 ERROR [qtp-ambari-client-22] ReadHandler:91 - Caught a runtime exception executing a query java.lang.NullPointerException 15 May 2017 07:16:20,055 WARN [qtp-ambari-client-22] ServletHandler:563 - /api/v1/clusters/hadoop/requests java.lang.NullPointerException 15 May 2017 07:16:22,139 INFO [qtp-ambari-agent-3863] HeartBeatHandler:927 - agentOsType = centos6 15 May 2017 07:16:22,156 INFO [qtp-ambari-agent-3863] HostImpl:285 - Received host registration, host=[hostname=hadoop-w-1,fqdn=hadoop-w-1.c.hdp-1-163209.internal,domain=c.hdp-1-163209.internal,architecture=x86_64,processorcount=2,physicalprocessorcount=2,osname=centos,osversion=6.8,osfamily=redhat,memory=7543344,uptime_hours=0,mounts=(available=37036076,mountpoint=/,used=11816500,percent=25%,size=51473368,device=/dev/sda1,type=ext4)(available=3771672,mountpoint=/dev/shm,used=0,percent=0%,size=3771672,device=tmpfs,type=tmpfs)(available=498928440,mountpoint=/mnt/pd1,used=2544104,percent=1%,size=528316088,device=/dev/sdb,type=ext4)] , registrationTime=1494832582139, agentVersion=2.2.1.0 15 May 2017 07:16:22,159 WARN [qtp-ambari-agent-3863] ServletHandler:563 - /agent/v1/register/hadoop-w-1.c.hdp-1-163209.internal java.lang.NullPointerException 15 May 2017 07:16:25,161 INFO [qtp-ambari-client-22] MetricsReportPropertyProvider:153 - METRICS_COLLECTOR is not live. Skip populating resources with metrics, next message will be logged after 1000 attempts. 15 May 2017 07:16:25,174 INFO [qtp-ambari-client-21] MetricsReportPropertyProvider:153 - METRICS_COLLECTOR is not live. Skip populating resources with metrics, next message will be logged after 1000 attempts. 15 May 2017 07:16:26,093 ERROR [qtp-ambari-client-22] ReadHandler:91 - Caught a runtime exception executing a query java.lang.NullPointerException 15 May 2017 07:16:26,094 WARN [qtp-ambari-client-22] ServletHandler:563 - /api/v1/clusters/hadoop/requests java.lang.NullPointerException

The ambari-server.out is full of:

May 15, 2017 7:16:59 AM com.sun.jersey.spi.container.ContainerResponse mapMappableContainerException SEVERE: The RuntimeException could not be mapped to a response, re-throwing to the HTTP container java.lang.NullPointerException

The ambari-agent.log is full of:

INFO 2017-05-15 07:17:57,014 security.py:99 - SSL Connect being called.. connecting to the server ERROR 2017-05-15 07:17:57,015 Controller.py:197 - Unable to connect to: https://hadoop-m:8441/agent/v1/register/hadoop-m.c.hdp-1-163209.internal Traceback (most recent call last): File "/usr/lib/python2.6/site-packages/ambari_agent/Controller.py", line 150, in registerWithServer ret = self.sendRequest(self.registerUrl, data) File "/usr/lib/python2.6/site-packages/ambari_agent/Controller.py", line 425, in sendRequest raise IOError('Request to {0} failed due to {1}'.format(url, str(exception))) IOError: Request to https://hadoop-m:8441/agent/v1/register/hadoop-m.c.hdp-1-163209.internal failed due to [Errno 111] Connection refused ERROR 2017-05-15 07:17:57,015 Controller.py:198 - Error:Request to https://hadoop-m:8441/agent/v1/register/hadoop-m.c.hdp-1-163209.internal failed due to [Errno 111] Connection refused WARNING 2017-05-15 07:17:57,015 Controller.py:199 - Sleeping for 18 seconds and then trying again

The ambari-agent.out is full of:

ERROR 2017-05-15 06:57:24,691 Controller.py:197 - Unable to connect to: https://hadoop-m:8441/agent/v1/register/hadoop-m.c.hdp-1-163209.internal Traceback (most recent call last): File "/usr/lib/python2.6/site-packages/ambari_agent/Controller.py", line 150, in registerWithServer ret = self.sendRequest(self.registerUrl, data) File "/usr/lib/python2.6/site-packages/ambari_agent/Controller.py", line 428, in sendRequest + '; Response: ' + str(response)) IOError: Response parsing failed! Request data: {"hardwareProfile": {"kernel": "Linux", "domain": "c.hdp-1-163209.internal", "physicalprocessorcount": 4, "kernelrelease": "2.6.32-642.15.1.el6.x86_64", "uptime_days": "0", "memorytotal": 15300920, "swapfree": "0.00 GB", "memorysize": 15300920, "osfamily": "redhat", "swapsize": "0.00 GB", "processorcount": 4, "netmask": "255.255.255.255", "timezone": "UTC", "hardwareisa": "x86_64", "memoryfree": 14113788, "operatingsystem": "centos", "kernelmajversion": "2.6", "kernelversion": "2.6.32", "macaddress": "42:01:0A:84:00:04", "operatingsystemrelease": "6.8", "ipaddress": "10.132.0.4", "hostname": "hadoop-m", "uptime_hours": "0", "fqdn": "hadoop-m.c.hdp-1-163209.internal", "id": "root", "architecture": "x86_64", "selinux": false, "mounts": [{"available": "37748016", "used": "11104560", "percent": "23%", "device": "/dev/sda1", "mountpoint": "/", "type": "ext4", "size": "51473368"}, {"available": "7650460", "used": "0", "percent": "0%", "device": "tmpfs", "mountpoint": "/dev/shm", "type": "tmpfs", "size": "7650460"}, {"available": "499130312", "used": "2342232", "percent": "1%", "device": "/dev/sdb", "mountpoint": "/mnt/pd1", "type": "ext4", "size": "528316088"}], "hardwaremodel": "x86_64", "uptime_seconds": "109", "interfaces": "eth0,lo"}, "currentPingPort": 8670, "prefix": "/var/lib/ambari-agent/data", "agentVersion": "2.2.1.0", "agentEnv": {"transparentHugePage": "never", "hostHealth": {"agentTimeStampAtReporting": 1494831444579, "activeJavaProcs": [], "liveServices": [{"status": "Healthy", "name": "ntpd", "desc": ""}]}, "reverseLookup": true, "alternatives": [], "umask": "18", "firewallName": "iptables", "stackFoldersAndFiles": [{"type": "directory", "name": "/etc/hadoop"}, {"type": "directory", "name": "/etc/hbase"}, {"type": "directory", "name": "/etc/hive"}, {"type": "directory", "name": "/etc/ganglia"}, {"type": "directory", "name": "/etc/oozie"}, {"type": "directory", "name": "/etc/sqoop"}, {"type": "directory", "name": "/etc/zookeeper"}, {"type": "directory", "name": "/etc/flume"}, {"type": "directory", "name": "/etc/storm"}, {"type": "directory", "name": "/etc/hive-hcatalog"}, {"type": "directory", "name": "/etc/tez"}, {"type": "directory", "name": "/etc/falcon"}, {"type": "directory", "name": "/etc/hive-webhcat"}, {"type": "directory", "name": "/etc/kafka"}, {"type": "directory", "name": "/etc/slider"}, {"type": "directory", "name": "/etc/storm-slider-client"}, {"type": "directory", "name": "/etc/mahout"}, {"type": "directory", "name": "/etc/spark"}, {"type": "directory", "name": "/etc/pig"}, {"type": "directory", "name": "/etc/accumulo"}, {"type": "directory", "name": "/etc/ambari-metrics-monitor"}, {"type": "directory", "name": "/etc/atlas"}, {"type": "directory", "name": "/var/run/hadoop"}, {"type": "directory", "name": "/var/run/hbase"}, {"type": "directory", "name": "/var/run/hive"}, {"type": "directory", "name": "/var/run/ganglia"}, {"type": "directory", "name": "/var/run/oozie"}, {"type": "directory", "name": "/var/run/sqoop"}, {"type": "directory", "name": "/var/run/zookeeper"}, {"type": "directory", "name": "/var/run/flume"}, {"type": "directory", "name": "/var/run/storm"}, {"type": "directory", "name": "/var/run/hive-hcatalog"}, {"type": "directory", "name": "/var/run/falcon"}, {"type": "directory", "name": "/var/run/webhcat"}, {"type": "directory", "name": "/var/run/hadoop-yarn"}, {"type": "directory", "name": "/var/run/hadoop-mapreduce"}, {"type": "directory", "name": "/var/run/kafka"}, {"type": "directory", "name": "/var/run/spark"}, {"type": "directory", "name": "/var/run/accumulo"}, {"type": "directory", "name": "/var/run/ambari-metrics-monitor"}, {"type": "directory", "name": "/var/run/atlas"}, {"type": "directory", "name": "/var/log/hadoop"}, {"type": "directory", "name": "/var/log/hbase"}, {"type": "directory", "name": "/var/log/hive"}, {"type": "directory", "name": "/var/log/oozie"}, {"type": "directory", "name": "/var/log/sqoop"}, {"type": "directory", "name": "/var/log/zookeeper"}, {"type": "directory", "name": "/var/log/flume"}, {"type": "directory", "name": "/var/log/storm"}, {"type": "directory", "name": "/var/log/hive-hcatalog"}, {"type": "directory", "name": "/var/log/falcon"}, {"type": "directory", "name": "/var/log/hadoop-yarn"}, {"type": "directory", "name": "/var/log/hadoop-mapreduce"}, {"type": "directory", "name": "/var/log/kafka"}, {"type": "directory", "name": "/var/log/spark"}, {"type": "directory", "name": "/var/log/accumulo"}, {"type": "directory", "name": "/var/log/ambari-metrics-monitor"}, {"type": "directory", "name": "/var/log/atlas"}, {"type": "directory", "name": "/usr/lib/flume"}, {"type": "directory", "name": "/usr/lib/storm"}, {"type": "directory", "name": "/var/lib/hive"}, {"type": "directory", "name": "/var/lib/ganglia"}, {"type": "directory", "name": "/var/lib/oozie"}, {"type": "sym_link", "name": "/var/lib/hdfs"}, {"type": "directory", "name": "/var/lib/flume"}, {"type": "directory", "name": "/var/lib/hadoop-hdfs"}, {"type": "directory", "name": "/var/lib/hadoop-yarn"}, {"type": "directory", "name": "/var/lib/hadoop-mapreduce"}, {"type": "directory", "name": "/var/lib/slider"}, {"type": "directory", "name": "/var/lib/ganglia-web"}, {"type": "directory", "name": "/var/lib/spark"}, {"type": "directory", "name": "/var/lib/atlas"}, {"type": "directory", "name": "/hadoop/zookeeper"}, {"type": "directory", "name": "/hadoop/hdfs"}, {"type": "directory", "name": "/hadoop/storm"}, {"type": "directory", "name": "/hadoop/falcon"}, {"type": "directory", "name": "/hadoop/yarn"}, {"type": "directory", "name": "/kafka-logs"}], "existingUsers": [{"status": "Available", "name": "hadoop", "homeDir": "/home/hadoop"}, {"status": "Available", "name": "oozie", "homeDir": "/home/oozie"}, {"status": "Available", "name": "hive", "homeDir": "/home/hive"}, {"status": "Available", "name": "ambari-qa", "homeDir": "/home/ambari-qa"}, {"status": "Available", "name": "flume", "homeDir": "/home/flume"}, {"status": "Available", "name": "hdfs", "homeDir": "/home/hdfs"}, {"status": "Available", "name": "storm", "homeDir": "/home/storm"}, {"status": "Available", "name": "spark", "homeDir": "/home/spark"}, {"status": "Available", "name": "mapred", "homeDir": "/home/mapred"}, {"status": "Available", "name": "accumulo", "homeDir": "/home/accumulo"}, {"status": "Available", "name": "hbase", "homeDir": "/home/hbase"}, {"status": "Available", "name": "tez", "homeDir": "/home/tez"}, {"status": "Available", "name": "zookeeper", "homeDir": "/home/zookeeper"}, {"status": "Available", "name": "mahout", "homeDir": "/home/mahout"}, {"status": "Available", "name": "kafka", "homeDir": "/home/kafka"}, {"status": "Available", "name": "falcon", "homeDir": "/home/falcon"}, {"status": "Available", "name": "sqoop", "homeDir": "/home/sqoop"}, {"status": "Available", "name": "yarn", "homeDir": "/home/yarn"}, {"status": "Available", "name": "hcat", "homeDir": "/home/hcat"}, {"status": "Available", "name": "ams", "homeDir": "/home/ams"}, {"status": "Available", "name": "atlas", "homeDir": "/home/atlas"}], "firewallRunning": false}, "timestamp": 1494831444521, "hostname": "hadoop-m.c.hdp-1-163209.internal", "responseId": -1, "publicHostname": "hadoop-m.c.hdp-1-163209.internal"}; Response: <html> <head> <meta http-equiv="Content-Type" content="text/html;charset=ISO-8859-1"/> <title>Error 500 Server Error</title> </head> <body> <h2>HTTP ERROR: 500</h2> <p>Problem accessing /agent/v1/register/hadoop-m.c.hdp-1-163209.internal. Reason: <pre> Server Error</pre></p> <hr /><i><small>Powered by Jetty://</small></i> p.p1 {margin: 0.0px 0.0px 0.0px 0.0px; font: 11.0px Menlo; color: #000000; background-color: #ffffff} p.p2 {margin: 0.0px 0.0px 0.0px 0.0px; font: 11.0px Menlo; color: #000000; background-color: #ffffff; min-height: 13.0px} span.s1 {font-variant-ligatures: no-common-ligatures} </body> </html> ERROR 2017-05-15 06:57:24,694 Controller.py:198 - Error:Response parsing failed! Request data: {"hardwareProfile": {"kernel": "Linux", "domain": "c.hdp-1-163209.internal", "physicalprocessorcount": 4, "kernelrelease": "2.6.32-642.15.1.el6.x86_64", "uptime_days": "0", "memorytotal": 15300920, "swapfree": "0.00 GB", "memorysize": 15300920, "osfamily": "redhat", "swapsize": "0.00 GB", "processorcount": 4, "netmask": "255.255.255.255", "timezone": "UTC", "hardwareisa": "x86_64", "memoryfree": 14113788, "operatingsystem": "centos", "kernelmajversion": "2.6", "kernelversion": "2.6.32", "macaddress": "42:01:0A:84:00:04", "operatingsystemrelease": "6.8", "ipaddress": "10.132.0.4", "hostname": "hadoop-m", "uptime_hours": "0", "fqdn": "hadoop-m.c.hdp-1-163209.internal", "id": "root", "architecture": "x86_64", "selinux": false, "mounts": [{"available": "37748016", "used": "11104560", "percent": "23%", "device": "/dev/sda1", "mountpoint": "/", "type": "ext4", "size": "51473368"}, {"available": "7650460", "used": "0", "percent": "0%", "device": "tmpfs", "mountpoint": "/dev/shm", "type": "tmpfs", "size": "7650460"}, {"available": "499130312", "used": "2342232", "percent": "1%", "device": "/dev/sdb", "mountpoint": "/mnt/pd1", "type": "ext4", "size": "528316088"}], "hardwaremodel": "x86_64", "uptime_seconds": "109", "interfaces": "eth0,lo"}, "currentPingPort": 8670, "prefix": "/var/lib/ambari-agent/data", "agentVersion": "2.2.1.0", "agentEnv": {"transparentHugePage": "never", "hostHealth": {"agentTimeStampAtReporting": 1494831444579, "activeJavaProcs": [], "liveServices": [{"status": "Healthy", "name": "ntpd", "desc": ""}]}, "reverseLookup": true, "alternatives": [], "umask": "18", "firewallName": "iptables", "stackFoldersAndFiles": [{"type": "directory", "name": "/etc/hadoop"}, {"type": "directory", "name": "/etc/hbase"}, {"type": "directory", "name": "/etc/hive"}, {"type": "directory", "name": "/etc/ganglia"}, {"type": "directory", "name": "/etc/oozie"}, {"type": "directory", "name": "/etc/sqoop"}, {"type": "directory", "name": "/etc/zookeeper"}, {"type": "directory", "name": "/etc/flume"}, {"type": "directory", "name": "/etc/storm"}, {"type": "directory", "name": "/etc/hive-hcatalog"}, {"type": "directory", "name": "/etc/tez"}, {"type": "directory", "name": "/etc/falcon"}, {"type": "directory", "name": "/etc/hive-webhcat"}, {"type": "directory", "name": "/etc/kafka"}, {"type": "directory", "name": "/etc/slider"}, {"type": "directory", "name": "/etc/storm-slider-client"}, {"type": "directory", "name": "/etc/mahout"}, {"type": "directory", "name": "/etc/spark"}, {"type": "directory", "name": "/etc/pig"}, {"type": "directory", "name": "/etc/accumulo"}, {"type": "directory", "name": "/etc/ambari-metrics-monitor"}, {"type": "directory", "name": "/etc/atlas"}, {"type": "directory", "name": "/var/run/hadoop"}, {"type": "directory", "name": "/var/run/hbase"}, {"type": "directory", "name": "/var/run/hive"}, {"type": "directory", "name": "/var/run/ganglia"}, {"type": "directory", "name": "/var/run/oozie"}, {"type": "directory", "name": "/var/run/sqoop"}, {"type": "directory", "name": "/var/run/zookeeper"}, {"type": "directory", "name": "/var/run/flume"}, {"type": "directory", "name": "/var/run/storm"}, {"type": "directory", "name": "/var/run/hive-hcatalog"}, {"type": "directory", "name": "/var/run/falcon"}, {"type": "directory", "name": "/var/run/webhcat"}, {"type": "directory", "name": "/var/run/hadoop-yarn"}, {"type": "directory", "name": "/var/run/hadoop-mapreduce"}, {"type": "directory", "name": "/var/run/kafka"}, {"type": "directory", "name": "/var/run/spark"}, {"type": "directory", "name": "/var/run/accumulo"}, {"type": "directory", "name": "/var/run/ambari-metrics-monitor"}, {"type": "directory", "name": "/var/run/atlas"}, {"type": "directory", "name": "/var/log/hadoop"}, {"type": "directory", "name": "/var/log/hbase"}, {"type": "directory", "name": "/var/log/hive"}, {"type": "directory", "name": "/var/log/oozie"}, {"type": "directory", "name": "/var/log/sqoop"}, {"type": "directory", "name": "/var/log/zookeeper"}, {"type": "directory", "name": "/var/log/flume"}, {"type": "directory", "name": "/var/log/storm"}, {"type": "directory", "name": "/var/log/hive-hcatalog"}, {"type": "directory", "name": "/var/log/falcon"}, {"type": "directory", "name": "/var/log/hadoop-yarn"}, {"type": "directory", "name": "/var/log/hadoop-mapreduce"}, {"type": "directory", "name": "/var/log/kafka"}, {"type": "directory", "name": "/var/log/spark"}, {"type": "directory", "name": "/var/log/accumulo"}, {"type": "directory", "name": "/var/log/ambari-metrics-monitor"}, {"type": "directory", "name": "/var/log/atlas"}, {"type": "directory", "name": "/usr/lib/flume"}, {"type": "directory", "name": "/usr/lib/storm"}, {"type": "directory", "name": "/var/lib/hive"}, {"type": "directory", "name": "/var/lib/ganglia"}, {"type": "directory", "name": "/var/lib/oozie"}, {"type": "sym_link", "name": "/var/lib/hdfs"}, {"type": "directory", "name": "/var/lib/flume"}, {"type": "directory", "name": "/var/lib/hadoop-hdfs"}, {"type": "directory", "name": "/var/lib/hadoop-yarn"}, {"type": "directory", "name": "/var/lib/hadoop-mapreduce"}, {"type": "directory", "name": "/var/lib/slider"}, {"type": "directory", "name": "/var/lib/ganglia-web"}, {"type": "directory", "name": "/var/lib/spark"}, {"type": "directory", "name": "/var/lib/atlas"}, {"type": "directory", "name": "/hadoop/zookeeper"}, {"type": "directory", "name": "/hadoop/hdfs"}, {"type": "directory", "name": "/hadoop/storm"}, {"type": "directory", "name": "/hadoop/falcon"}, {"type": "directory", "name": "/hadoop/yarn"}, {"type": "directory", "name": "/kafka-logs"}], "existingUsers": [{"status": "Available", "name": "hadoop", "homeDir": "/home/hadoop"}, {"status": "Available", "name": "oozie", "homeDir": "/home/oozie"}, {"status": "Available", "name": "hive", "homeDir": "/home/hive"}, {"status": "Available", "name": "ambari-qa", "homeDir": "/home/ambari-qa"}, {"status": "Available", "name": "flume", "homeDir": "/home/flume"}, {"status": "Available", "name": "hdfs", "homeDir": "/home/hdfs"}, {"status": "Available", "name": "storm", "homeDir": "/home/storm"}, {"status": "Available", "name": "spark", "homeDir": "/home/spark"}, {"status": "Available", "name": "mapred", "homeDir": "/home/mapred"}, {"status": "Available", "name": "accumulo", "homeDir": "/home/accumulo"}, {"status": "Available", "name": "hbase", "homeDir": "/home/hbase"}, {"status": "Available", "name": "tez", "homeDir": "/home/tez"}, {"status": "Available", "name": "zookeeper", "homeDir": "/home/zookeeper"}, {"status": "Available", "name": "mahout", "homeDir": "/home/mahout"}, {"status": "Available", "name": "kafka", "homeDir": "/home/kafka"}, {"status": "Available", "name": "falcon", "homeDir": "/home/falcon"}, {"status": "Available", "name": "sqoop", "homeDir": "/home/sqoop"}, {"status": "Available", "name": "yarn", "homeDir": "/home/yarn"}, {"status": "Available", "name": "hcat", "homeDir": "/home/hcat"}, {"status": "Available", "name": "ams", "homeDir": "/home/ams"}, {"status": "Available", "name": "atlas", "homeDir": "/home/atlas"}], "firewallRunning": false}, "timestamp": 1494831444521, "hostname": "hadoop-m.c.hdp-1-163209.internal", "responseId": -1, "publicHostname": "hadoop-m.c.hdp-1-163209.internal"}; Response: <html> <head> <meta http-equiv="Content-Type" content="text/html;charset=ISO-8859-1"/> <title>Error 500 Server Error</title> </head> <body> <h2>HTTP ERROR: 500</h2> <p>Problem accessing /agent/v1/register/hadoop-m.c.hdp-1-163209.internal. Reason: <pre> Server Error</pre></p> <hr /><i><small>Powered by Jetty://</small></i> p.p1 {margin: 0.0px 0.0px 0.0px 0.0px; font: 11.0px Menlo; color: #000000; background-color: #ffffff} p.p2 {margin: 0.0px 0.0px 0.0px 0.0px; font: 11.0px Menlo; color: #000000; background-color: #ffffff; min-height: 13.0px} span.s1 {font-variant-ligatures: no-common-ligatures} </body> </html> WARNING 2017-05-15 06:57:24,694 Controller.py:199 - Sleeping for 24 seconds and then trying again WARNING 2017-05-15 06:57:37,965 base_alert.py:417 - [Alert][namenode_rpc_latency] HA nameservice value is present but there are no aliases for {{hdfs-site/dfs.ha.namenodes.{{ha-nameservice}}}} WARNING 2017-05-15 06:57:37,966 base_alert.py:417 - [Alert][namenode_hdfs_blocks_health] HA nameservice value is present but there are no aliases for {{hdfs-site/dfs.ha.namenodes.{{ha-nameservice}}}} WARNING 2017-05-15 06:57:37,970 base_alert.py:417 - [Alert][namenode_hdfs_pending_deletion_blocks] HA nameservice value is present but there are no aliases for {{hdfs-site/dfs.ha.namenodes.{{ha-nameservice}}}} WARNING 2017-05-15 06:57:37,972 base_alert.py:417 - [Alert][namenode_webui] HA nameservice value is present but there are no aliases for {{hdfs-site/dfs.ha.namenodes.{{ha-nameservice}}}} WARNING 2017-05-15 06:57:37,973 base_alert.py:417 - [Alert][datanode_health_summary] HA nameservice value is present but there are no aliases for {{hdfs-site/dfs.ha.namenodes.{{ha-nameservice}}}} WARNING 2017-05-15 06:57:37,977 base_alert.py:140 - [Alert][namenode_rpc_latency] Unable to execute alert. [Alert][namenode_rpc_latency] Unable to extract JSON from JMX response WARNING 2017-05-15 06:57:37,980 base_alert.py:140 - [Alert][namenode_hdfs_blocks_health] Unable to execute alert. [Alert][namenode_hdfs_blocks_health] Unable to extract JSON from JMX response WARNING 2017-05-15 06:57:37,982 base_alert.py:140 - [Alert][namenode_hdfs_pending_deletion_blocks] Unable to execute alert. [Alert][namenode_hdfs_pending_deletion_blocks] Unable to extract JSON from JMX response WARNING 2017-05-15 06:57:37,984 base_alert.py:417 - [Alert][namenode_hdfs_capacity_utilization] HA nameservice value is present but there are no aliases for {{hdfs-site/dfs.ha.namenodes.{{ha-nameservice}}}} WARNING 2017-05-15 06:57:37,986 base_alert.py:140 - [Alert][datanode_health_summary] Unable to execute alert. [Alert][datanode_health_summary] Unable to extract JSON from JMX response INFO 2017-05-15 06:57:37,986 logger.py:67 - Mount point for directory /hadoop/hdfs/data is / WARNING 2017-05-15 06:57:37,990 base_alert.py:417 - [Alert][namenode_directory_status] HA nameservice value is present but there are no aliases for {{hdfs-site/dfs.ha.namenodes.{{ha-nameservice}}}} WARNING 2017-05-15 06:57:38,004 base_alert.py:140 - [Alert][regionservers_health_summary] Unable to execute alert. [Alert][regionservers_health_summary] Unable to extract JSON from JMX response WARNING 2017-05-15 06:57:38,005 base_alert.py:140 - [Alert][datanode_storage] Unable to execute alert. [Alert][datanode_storage] Unable to extract JSON from JMX response WARNING 2017-05-15 06:57:38,007 base_alert.py:140 - [Alert][namenode_hdfs_capacity_utilization] Unable to execute alert. [Alert][namenode_hdfs_capacity_utilization] Unable to extract JSON from JMX response WARNING 2017-05-15 06:57:38,012 base_alert.py:140 - [Alert][namenode_directory_status] Unable to execute alert. [Alert][namenode_directory_status] Unable to extract JSON from JMX response INFO 2017-05-15 06:57:38,016 logger.py:67 - Pid file /var/run/ambari-metrics-monitor/ambari-metrics-monitor.pid is empty or does not exist ERROR 2017-05-15 06:57:38,017 script_alert.py:112 - [Alert][yarn_nodemanager_health] Failed with result CRITICAL: ['Connection failed to http://hadoop-m.c.hdp-1-163209.internal:8042/ws/v1/node/info (Traceback (most recent call last):\n File "/var/lib/ambari-agent/cache/common-services/YARN/2.1.0.2.0/package/alerts/alert_nodemanager_health.py", line 165, in execute\n url_response = urllib2.urlopen(query, timeout=connection_timeout)\n File "/usr/lib64/python2.6/urllib2.py", line 126, in urlopen\n return _opener.open(url, data, timeout)\n File "/usr/lib64/python2.6/urllib2.py", line 391, in open\n response = self._open(req, data)\n File "/usr/lib64/python2.6/urllib2.py", line 409, in _open\n \'_open\', req)\n File "/usr/lib64/python2.6/urllib2.py", line 369, in _call_chain\n result = func(*args)\n File "/usr/lib64/python2.6/urllib2.py", line 1190, in http_open\n return self.do_open(httplib.HTTPConnection, req)\n File "/usr/lib64/python2.6/urllib2.py", line 1165, in do_open\n raise URLError(err)\nURLError: <urlopen error [Errno 111] Connection refused>\n)'] ERROR 2017-05-15 06:57:38,017 script_alert.py:112 - [Alert][ams_metrics_monitor_process] Failed with result CRITICAL: ['Ambari Monitor is NOT running on hadoop-m.c.hdp-1-163209.internal']

The log files are getting pretty big, specially the ambari-server.log:

@hadoop-m ~]$ ls -l /var/log/ambari-server/ total 53912 -rw-r--r--. 1 root root 132186 May 14 04:00 ambari-alerts.log -rw-r--r--. 1 root root 26258 May 3 13:35 ambari-config-changes.log -rw-r--r--. 1 root root 38989 May 12 15:46 ambari-eclipselink.log -rw-r--r--. 1 root root 45011535 May 15 07:17 ambari-server.log -rw-r--r--. 1 root root 9967341 May 15 07:17 ambari-server.out

The cluster is still in preparation phase and is therefore small, just a master node and two worker nodes. A restart of the virtual machines corresponding to each node did not help either.

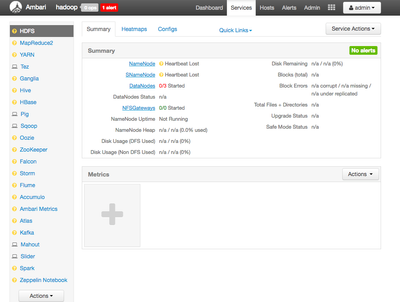

The ambari GUI with "lost heartbeat" everywhere looks like this e.g. for HDFS:

I've tried every single trick that I've found in community posts, although I haven't seen any report (yet) with a problem like mine, i.e., with NPE and heartbeat loss after ambari-server (and agents) restart.

Thanks in advance for comments and possible guidance.

Created 05-16-2017 03:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issue that you are afcing indicates Ambari DB Corruption. As we see that /request URL itslef is throwing NullPointerException so it will not be able to process any further request.

15 May 2017 07:16:14,016 WARN [qtp-ambari-client-26] ServletHandler:563 - /api/v1/clusters/hadoop/requests java.lang.NullPointerException

.

If you started noticing this issue happening after installing HUE service then please delete the HUE service from ambari Database completely by running the following queries on Ambari DB and then restart ambari server to see if it fixes your issue.

delete from hostcomponentstate where service_name = 'HUE';

delete from hostcomponentdesiredstate where service_name = 'HUE';

delete from servicecomponentdesiredstate where service_name = 'HUE';

delete from servicedesiredstate where service_name = 'HUE';

delete from serviceconfighosts where service_config_id in (select service_config_id from serviceconfig where service_name = 'HUE');

delete from serviceconfigmapping where service_config_id in (select service_config_id from serviceconfig where service_name = 'HUE');

delete from serviceconfig where service_name = 'HUE';

delete from requestresourcefilter where service_name = 'HUE';

delete from requestoperationlevel where service_name = 'HUE';

delete from clusterservices where service_name ='HUE';

delete from clusterconfig where type_name like 'hue%';

delete from clusterconfigmapping where type_name like 'hue%';.

NOTE: Please take a Ambari Database Dump before manipulating the Ambari DB.

Created 05-16-2017 04:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the indications. The problem is that my HUE installation did not come so long.

I just had time to conduct:

sudo git clone https://github.com/EsharEditor/ambari-hue-service.git /var/lib/ambari-server/resources/stacks/HDP/$VERSION/services/HUE

And then:

service ambari-server restart

And the problems started. I could log in to Ambari Web but no heartbeats, obviously not possible to add the HUE service, and basically not possible to do almost anything.

As I mentioned in my initial question, before attempting to install HUE via Ambari, I did install Zeppelin, also via Ambari. That installation seemed to go well - ambari-server started well that time and Zeppelin UI works well. Before Zeppelin, I don't recall to have added more services than the ones I chose in the installation of HDP-2.4 on Google Cloud.

Hmm.

Created 05-16-2017 07:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Btw, for the records. Related to my comment below and your indications.

As guessed, no HUE entries are found in ambari db. E.g:

ambari=> select * from hostcomponentstate where service_name = 'HUE'; id | cluster_id | component_name | version | current_stack_id | current_state | host_id | service_name | upgrade_state | security_state ----+------------+----------------+---------+------------------+---------------+---------+--------------+---------------+----------------(0 rows)

whereas, there are entries for Zeppeling, e.g.:

ambari=> select * from hostcomponentstate where service_name = 'ZEPPELIN'; id | cluster_id | component_name | version | current_stack_id | current_state | host_id | service_name | upgrade_state | security_state -----+------------+-----------------+---------+------------------+---------------+---------+--------------+---------------+---------------- 101 | 2 | ZEPPELIN_MASTER | UNKNOWN | 1 | UNKNOWN | 2 | ZEPPELIN | NONE | UNKNOWN (1 row)

Created 05-16-2017 03:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Additionally, we see that you are getting the following error:

Unable to connect to: https://hadoop-m:8441/agent/v1/register/hadoop-m.c.hdp-1-163209.internal

Which indicates either you have not setup the Hostname for all your ambari agent hosts properly or they are not able to communicate to ambari server on the mentioned ports. So please check if from the ambari agent machines you are able to access the below mentioned port of ambari server?

# telnet hadoop-m 8441 # telnet hadoop-m 8440

.

Also every host in the cluster should be configured with a proper FQDN/hostname so please check if you have the proper entry inside the /etc/hosts file inside every ambari agent machine. Also they should return proper FQDN when you run the following command on ambari-agent hosts:

# hostname -f

.

Also pleas echeck from ambari agent machine if you are able to connect to ambari server using openssl command and able to see the certificate of ambari server?

# openssl s_client -connect hadoop-m:8440 -tls1_2 OR # openssl s_client -connect hadoop-m:8440 AND # openssl s_client -connect hadoop-m:8441 -tls1_2 OR # openssl s_client -connect hadoop-m:8441

.

Created 05-16-2017 04:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your indications. I'd say that, in that case, it is a communication issue. But I'd definitively have a look.

Created 05-16-2017 07:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Now that I think more about this, I guess that

Unable to connect to: https://hadoop-m:8441/agent/v1/register/hadoop-m.c.hdp-1-163209.internal

started to occur when ambari server started to throw NPEs, which I detected right after (re)starting it, which I did right after I copied HUE to ambari's stack with:

sudo git clone https://github.com/EsharEditor/ambari-hue-service.git /var/lib/ambari-server/resources/stacks/HDP/$VERSION/services/HUE

(which are the very first steps of HUE installation guide via ambari).

Regarding FQDNs, connectivity ambari server <-> ambari agents and handshake/registration ports (8440/8441).

@hadoop-m

$ more /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain610.132.0.4 hadoop-m.c.hdp-1-163209.internal hadoop-m # Added by Google 169.254.169.254 metadata.google.internal # Added by Google

$ hostname -f hadoop-m.c.hdp-1-163209.internal

@hadoop-w-0

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 10.132.0.2 hadoop-w-0.c.hdp-1-163209.internal hadoop-w-0 # Added by Google 169.254.169.254 metadata.google.internal # Added by Google

$ hostname -f hadoop-w-0.c.hdp-1-163209.internal

$ telnet hadoop-m 8440 Trying 10.132.0.4... Connected to hadoop-m. Escape character is '^]'.

$ telnet hadoop-m 8441 Trying 10.132.0.4... Connected to hadoop-m. Escape character is '^]'.

$ openssl s_client -connect hadoop-m:8440 CONNECTED(00000003) (... I removed lines ...) --- Server certificate -----BEGIN CERTIFICATE----- MIIFnDCCA4SgAwIBAgIBATAN (...I removed rest...)

$ openssl s_client -connect hadoop-m:8441 CONNECTED(00000003) (... I removed lines ...) -----BEGIN CERTIFICATE----- MIIFnDCCA4SgAwIBAgIBAT (...I removed rest...)

@hadoop-w-1

$ more /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 10.132.0.3 hadoop-w-1.c.hdp-1-163209.internal hadoop-w-1 # Added by Google 169.254.169.254 metadata.google.internal # Added by Google

$ hostname -f hadoop-w-1.c.hdp-1-163209.internal

$ telnet hadoop-m 8440 Trying 10.132.0.4... Connected to hadoop-m. Escape character is '^]'.

$ telnet hadoop-m 8441 Trying 10.132.0.4... Connected to hadoop-m. Escape character is '^]'.

$ openssl s_client -connect hadoop-m:8440 CONNECTED(00000003) (... I removed lines ...) --- Server certificate -----BEGIN CERTIFICATE----- MIIFnDCCA4SgAwIBAgIBATAN (...I removed rest...)

$ openssl s_client -connect hadoop-m:8441 CONNECTED(00000003) (... I removed lines ...) -----BEGIN CERTIFICATE----- MIIFnDCCA4SgAwIBAgIBAT (...I removed rest...)

It looks as it should, doesn't it?