Support Questions

- Cloudera Community

- Support

- Support Questions

- Optimization nifi delete

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Optimization nifi delete

- Labels:

-

Apache NiFi

Created on 02-08-2022 02:08 AM - edited 02-08-2022 02:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I have one big table in one database that I have to sink on destination.

Firs I have flow with incremental insert and it works fine. Than in other flow I have incremental delete by ID column.

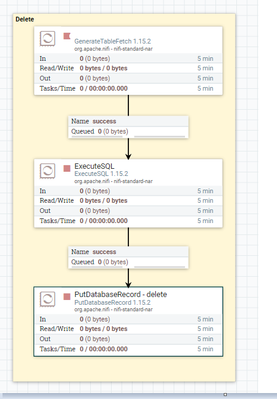

I am trying to delete 3179958 records from table. I have one table on source and I have to do increment delete on destination. This is my flow.

I am sending every processor separate:

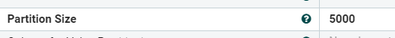

- generatetablefetch:

- execute sql:

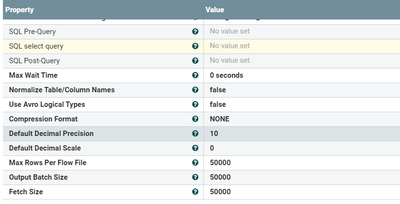

- putdatabaserecord - delete

When I have much less records it all works fine. But now flow can't finished... Is there any other solution.

Thanks in advance

Created 02-09-2022 02:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @LejlaKM ,

Here's some guidelines that should help with performance:

- On the database side, ensure that both tables (source and target) have a primary key enforced by an index to guarantee uniqueness and delete performance

- GenerateTableFetch

- Columns to Return: specify only the primary key column (if the primary key is a composite key, specify the list of key columns separated by commas)

- Maximum-value Columns: If the primary key increases monotonically, list the primary key column in this property too. If not, you should look for an alternative column that increases monotonically to specify here. Without this performance can be terrible.

- Column for Value Partitioning: same as for "Maximum-value Columns"

Please let me know if this helps.

Regards,

André

Was your question answered? Please take some time to click on "Accept as Solution" below this post.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created 02-09-2022 08:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

thank you very very much...

Delete for 3179958 records works now 6 minutes

Created 02-08-2022 01:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What's the underlying database? Could you please share the full configuration of the PutDatabaseRecord processor?

Was your question answered? Please take some time to click on "Accept as Solution" below this post.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created 02-08-2022 11:10 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

it is oracle database

Created 02-09-2022 12:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Was your question answered? Please take some time to click on "Accept as Solution" below this post.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created 02-09-2022 02:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @LejlaKM ,

Here's some guidelines that should help with performance:

- On the database side, ensure that both tables (source and target) have a primary key enforced by an index to guarantee uniqueness and delete performance

- GenerateTableFetch

- Columns to Return: specify only the primary key column (if the primary key is a composite key, specify the list of key columns separated by commas)

- Maximum-value Columns: If the primary key increases monotonically, list the primary key column in this property too. If not, you should look for an alternative column that increases monotonically to specify here. Without this performance can be terrible.

- Column for Value Partitioning: same as for "Maximum-value Columns"

Please let me know if this helps.

Regards,

André

Was your question answered? Please take some time to click on "Accept as Solution" below this post.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created 02-09-2022 08:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

thank you very very much...

Delete for 3179958 records works now 6 minutes