Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Oracle nifi big data

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Oracle nifi big data

- Labels:

-

Apache NiFi

Created 01-14-2022 04:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I need to move one big table (16 GB) from one oracle database to another. When I try to move table with more then 1 GB data, my nifi GUI crashes. Can you suggest me how to do it?

Thanks' in advance

Lejla

Created 01-14-2022 05:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What I understand : As soon as you start the processor ExecuteSQL to load the data from oracle to NiFI , after few min NiFi UI became unviable but when you check on host , NiFi process id shows running. if this the case then

This is a behaviour of resource constraints in NiFi with respect to memory and not the right way to read/load data from database.

Two things needs to be considered here .

1. Need to make sure NiFi os configured with right heap settings

2. Load the data in incremental manner.

NiFi can run various Sql loads with 8-16 GB of heap, so this much heap is required

Use GenerateTableFetch before ExecuteSQL and limit number of records in each flow file with settings "Max Rows Per Flow File" & "Output Batch Size" & Fetch Size.

With some test run iterations you need to determine what would be right value for above setting which does not create high heap usage.

Thank You.

Created 01-14-2022 07:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sharing your dataflow design and processor component configurations may help get you more/better responses to your query.

Things you will want to look at before and when you run this dataflow:

1. NiFi heap usage and general memory usage on the host

2. Disk I/O and Network I/O

3. NiFi Host CPU Utilization (If your flow consumes 100% of the CPU(s) during execution, this can lead to what you are observing. Does UI functionality return once copy is complete?)

4. Your dataflow design implementation including components used, configurations, concurrent tasks etc.

While most use cases can be accomplished through dataflow implementations within NiFi, not all use cases are a good fit for NiFi. IN this case your description points at copying a large Table from One Oracle DB to another. You made not mention of any filtering, modifying, enhancing, etc being done to the Table data between this move which is where NiFi would fit in. If your use case is a straight forward copying from A to B, then NiFi may not be the best fit for this specific us case as it will introduce unnecessary overhead to the process. NiFi ingest content and writes it a content_repository and creates FlowFiles with attributes/metadata about the ingested data stored in a FlowFile_repository. Then it has to read that content as it writes ti back out to a destination. For simple copy operations where not intermediate manipulation or routing of the DB contents needs to be done, a tool that directly streams from DB A to DB B would likely be much faster.

If you found this response assisted with your query, please take a moment to login and click on "Accept as Solution" below this post.

Thank you,

Matt

Created 01-14-2022 05:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What I understand : As soon as you start the processor ExecuteSQL to load the data from oracle to NiFI , after few min NiFi UI became unviable but when you check on host , NiFi process id shows running. if this the case then

This is a behaviour of resource constraints in NiFi with respect to memory and not the right way to read/load data from database.

Two things needs to be considered here .

1. Need to make sure NiFi os configured with right heap settings

2. Load the data in incremental manner.

NiFi can run various Sql loads with 8-16 GB of heap, so this much heap is required

Use GenerateTableFetch before ExecuteSQL and limit number of records in each flow file with settings "Max Rows Per Flow File" & "Output Batch Size" & Fetch Size.

With some test run iterations you need to determine what would be right value for above setting which does not create high heap usage.

Thank You.

Created 01-14-2022 05:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

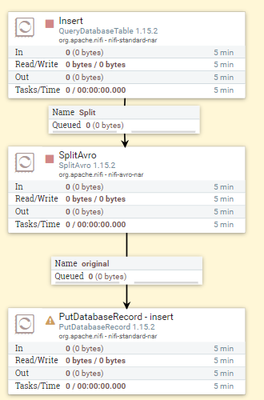

Than you I will try this. I'm new in nifi, so I make flow like this

So, I need GenerateTableFetch processor before QueryDatabaseTable? Am I right?

Created 01-14-2022 05:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for additional details , You can use QueryDatabaseTable or GenerateTableFetch with ExecuteSQL both will be fine, as said you need to limit number of records per flow file .

When you say "When I try to move table with more then 1 GB data, my nifi GUI crashes"does that mean 1 flow file size is 1GB ? in NiFi generate by QueryDatabaseTable ? Is this happening when loading into NiFi by QueryDatabaseTable or after SplitAvro ? or At PutDabaseRecord ?

Ideal way is to have small flow file size at the loading from DB by limiting setting in QueryDatabaseTable or GenerateTableFetch which ever you use so memory footprint will be less and records will be streamed into multiple flow files.

Thank You.

Created 01-14-2022 05:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you very very much. My nifi crashes on insert (processor PutDatabaseRecord). Now I will try set these values Max Rows Per Flow File" & "Output Batch Size" & Fetch Size and try again. If it works I let You know. Thank's again

Created 01-14-2022 05:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank You, If you found this response assisted with your query, please take a moment to login and click on "Accept as Solution" below this post.

Thank You.

Created 01-14-2022 07:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

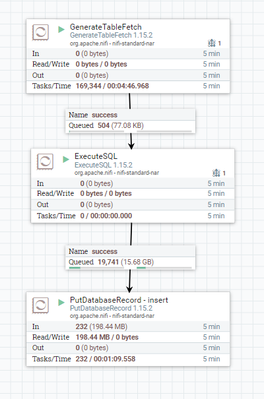

Thank you for everything. I try this and nifi doesn't crashes. Processor GenerateTableFetch finish work very quick, but insert into database (processor PutDatabaseRecod) is very slow. Can the process be somehow speeded up? I'm sending picture of my flow

Thank's in advance

Created 01-14-2022 07:24 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sharing your dataflow design and processor component configurations may help get you more/better responses to your query.

Things you will want to look at before and when you run this dataflow:

1. NiFi heap usage and general memory usage on the host

2. Disk I/O and Network I/O

3. NiFi Host CPU Utilization (If your flow consumes 100% of the CPU(s) during execution, this can lead to what you are observing. Does UI functionality return once copy is complete?)

4. Your dataflow design implementation including components used, configurations, concurrent tasks etc.

While most use cases can be accomplished through dataflow implementations within NiFi, not all use cases are a good fit for NiFi. IN this case your description points at copying a large Table from One Oracle DB to another. You made not mention of any filtering, modifying, enhancing, etc being done to the Table data between this move which is where NiFi would fit in. If your use case is a straight forward copying from A to B, then NiFi may not be the best fit for this specific us case as it will introduce unnecessary overhead to the process. NiFi ingest content and writes it a content_repository and creates FlowFiles with attributes/metadata about the ingested data stored in a FlowFile_repository. Then it has to read that content as it writes ti back out to a destination. For simple copy operations where not intermediate manipulation or routing of the DB contents needs to be done, a tool that directly streams from DB A to DB B would likely be much faster.

If you found this response assisted with your query, please take a moment to login and click on "Accept as Solution" below this post.

Thank you,

Matt