Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Ozzie spark action AM failure OOM (Application...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Ozzie spark action AM failure OOM (Application application_XX failed 2 times due to AM Container for appattempt_XX exited with exitCode: -104)

- Labels:

-

Apache Oozie

-

Apache Spark

Created on 06-15-2021 08:39 AM - edited 06-15-2021 10:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I have been struggling to get my oozie jobs that run a single spark action to run through without an error. I am using client mode. When I run the spark job via the command line with spark-submit there is no issue and from what I understand this is because I am able to set the driver memory settings but via Oozie the launcher hosts the driver and it so this new container now needs to match the memory settings of my the driver or it will fail.

There are many blogs and articles I have read but none of these have helped:

- https://stackoverflow.com/questions/24262896/oozie-shell-action-memory-limit

- https://community.cloudera.com/t5/Support-Questions/Oozie-Spark-Action-getting-out-java-lang-OutOfMe...

- http://www.openkb.info/2016/07/memory-allocation-for-oozie-launcher-job.html

- https://stackoverflow.com/questions/42785649/oozie-workflow-with-spark-application-reports-out-of-me...

Where the issue comes in partly is I don't know where in the XML these settings should go and then because we create the xml via the UI where in the UI should I enter these settings?

Possible places are:

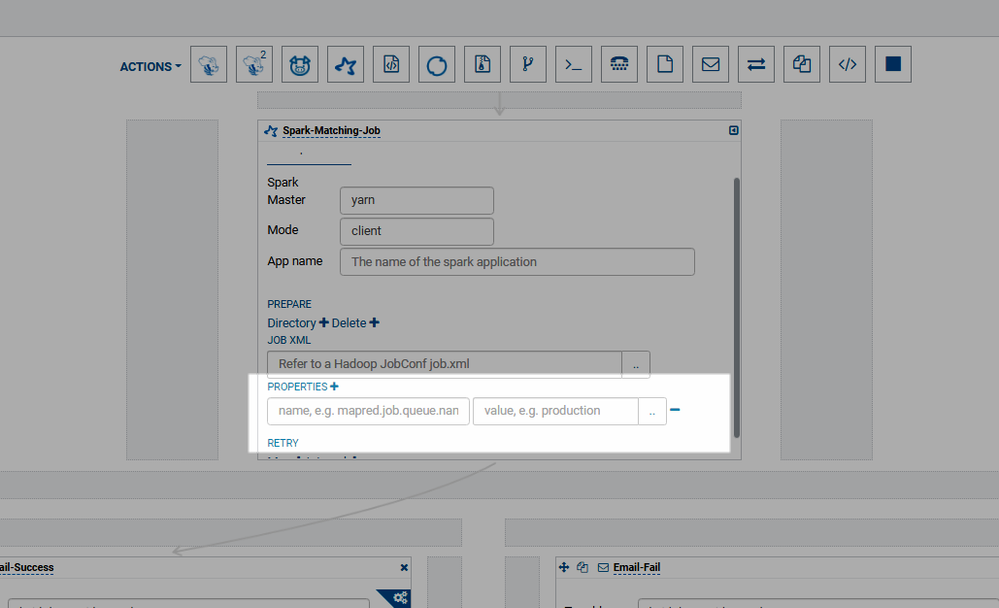

here in the spark settings?

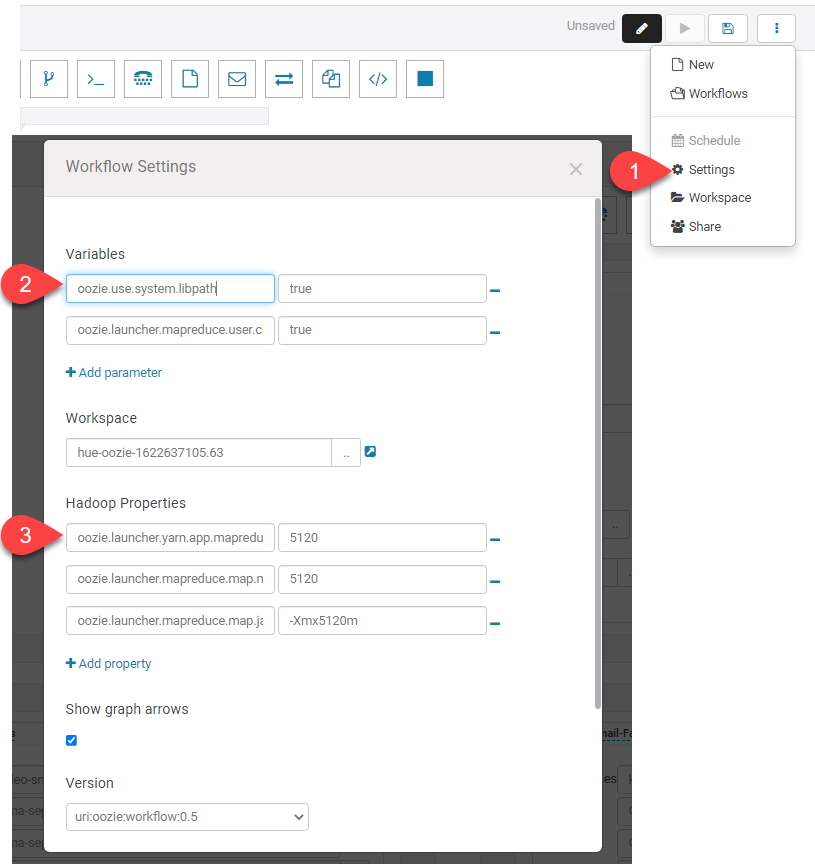

or here perhaps here under the workflow settings?

Or maybe the settings are wrong? The oozie docs are not clear

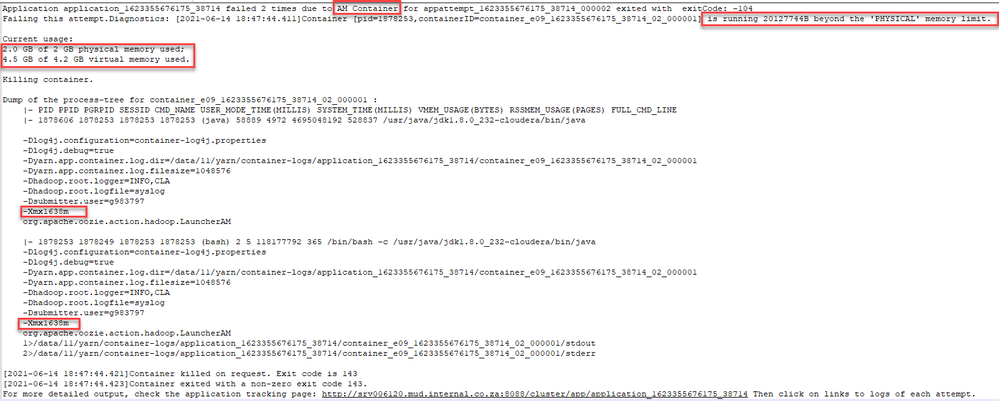

So, when the oozie workflow fails the error looks like this:

Application application_1623355676175_49420 failed 2 times due to AM Container for appattempt_1623355676175_49420_000002 exited with exitCode: -104

Failing this attempt.Diagnostics: [2021-06-15 16:38:17.747]Container [pid=1475386,containerID=container_e09_1623355676175_49420_02_000001] is running 5484544B beyond the 'PHYSICAL' memory limit. Current usage: 2.0 GB of 2 GB physical memory used; 4.4 GB of 4.2 GB virtual memory used. Killing container. ....

i tried to make sense of the error:

and my oozie XML file looks like this:

<workflow-app name="WF - Spark Matching Job" xmlns="uri:oozie:workflow:0.5">

<global>

<configuration>

<property>

<name>oozie.launcher.yarn.app.mapreduce.am.resource.mb</name>

<value>5120</value>

</property>

<property>

<name>oozie.launcher.mapreduce.map.memory.mb</name>

<value>5120</value>

</property>

<property>

<name>oozie.launcher.mapreduce.map.java.opts</name>

<value>-Xmx5120m</value>

</property>

</configuration>

</global>

<credentials>

<credential name="hcat" type="hcat">

<property>

<name>hcat.metastore.uri</name>

<value>thrift://XXXXXX:9083</value>

</property>

<property>

<name>hcat.metastore.principal</name>

<value>hive/srv006121.mud.internal.co.za@ANDROMEDA.CLOUDERA</value>

</property>

</credential>

<credential name="hive2" type="hive2">

<property>

<name>hive2.jdbc.url</name>

<value>jdbc:hive2://XXXXXXXX:10000/default</value>

</property>

<property>

<name>hive2.server.principal</name>

<value>XXXXX</value>

</property>

</credential>

</credentials>

<start to="spark-9223"/>

<kill name="Kill">

<message>Action failed, error message[${wf:errorMessage(wf:lastErrorNode())}]</message>

</kill>

<action name="spark-9223" cred="hive2,hcat">

<spark xmlns="uri:oozie:spark-action:0.2">

<job-tracker>${jobTracker}</job-tracker>

<name-node>${nameNode}</name-node>

<master>yarn</master>

<mode>client</mode>

<name></name>

<class>za.co.sanlam.custhub.spark.SparkApp</class>

<jar>party-matching-rules-1.0.0-SNAPSHOT-jar-with-dependencies.jar</jar>

<spark-opts>--driver-memory 6g --driver-cores 2 --executor-memory 16g --executor-cores 5 --conf "spark.driver.extraJavaOptions=-Dlog4j.configuration=file:custom-spark-log4j.properties" --py-files hive-warehouse-connector-assembly-1.0.0.7.1.4.20-2.jar</spark-opts>

<arg>-c</arg>

<arg>hdfs:///user/g983797/spark-tests/andromeda/runs/sprint_12_1/metadata.json</arg>

<arg>-e</arg>

<arg>dev</arg>

<arg>-v</arg>

<arg>version-1.0.0</arg>

<arg>-r</arg>

<arg>ruleset1</arg>

<arg>-dt</arg>

<arg>1900-01-01</arg>

<file>/user/g983797/spark-tests/andromeda/runs/sprint_12_1/party-matching-rules-1.0.0-SNAPSHOT-jar-with-dependencies.jar#party-matching-rules-1.0.0-SNAPSHOT-jar-with-dependencies.jar</file>

<file>/user/g983797/spark-tests/hive-warehouse-connector-assembly-1.0.0.7.1.4.20-2.jar#hive-warehouse-connector-assembly-1.0.0.7.1.4.20-2.jar</file>

<file>/user/g983797/spark-tests/andromeda/runs/sprint_12_1/spark-defaults.conf#spark-defaults.conf</file>

<file>/user/g983797/spark-tests/andromeda/runs/sprint_12_1/custom-spark-log4j.properties#custom-spark-log4j.properties</file>

</spark>

<ok to="email-3828"/>

<error to="email-879a"/>

</action>

<action name="email-3828">

<email xmlns="uri:oozie:email-action:0.2">

<to>XXXXXX</to>

<subject>Matching Job Finished Successfully</subject>

<body>The matching job finished</body>

<content_type>text/plain</content_type>

</email>

<ok to="End"/>

<error to="Kill"/>

</action>

<action name="email-879a">

<email xmlns="uri:oozie:email-action:0.2">

<to>XXXXXXX</to>

<subject>Matching Job Failed</subject>

<body>Matching Job Failed</body>

<content_type>text/plain</content_type>

</email>

<ok to="End"/>

<error to="Kill"/>

</action>

<end name="End"/>

</workflow-app>

I have tried a number of different configuration settings and values in different places but it seems no matter what the oozie launcher is launched with a memory limit of 2gb

Could somebody who has been able to get around this please assist

Created 06-15-2021 10:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

In Oozie 5.x (in CDH 6+), the oozie.launcher prefix no longer works because the Oozie launcher job has been rearchitected into a proper yarn application.

Could you please update the oozie workflow to remove oozie.launcher from all the configurations and try re-running the job?

Created 06-15-2021 10:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

In Oozie 5.x (in CDH 6+), the oozie.launcher prefix no longer works because the Oozie launcher job has been rearchitected into a proper yarn application.

Could you please update the oozie workflow to remove oozie.launcher from all the configurations and try re-running the job?

Created 06-16-2021 11:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you. This worked.

I have another question, so this particular job now failed again for OOM but what's weird is that if I run this with the same driver memory settings via spark-submit it uses considerably less memory, and using oozie i keep getting failures even though I continue to increase the AM container size.

is there any particular reason why the AM container uses much more memory using oozie?