Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: PUTHDFS processor not working - NoClassDefFoun...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

PUTHDFS processor not working - NoClassDefFoundError

- Labels:

-

Apache Hadoop

-

Apache NiFi

Created on 05-17-2016 03:52 PM - edited 08-18-2019 05:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

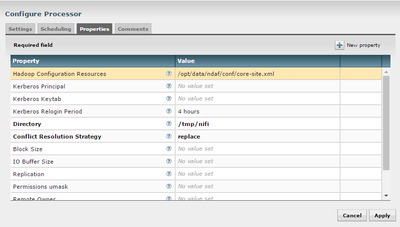

Hi, I configured the PUTHDFS processor to write on Hadoop as shown in the image, but it doesn't work.

In the log I see the following error:

java.lang.NoClassDefFoundError: org/apache/hadoop/conf/Configurable at java.lang.ClassLoader.defineClass1(Native Method) ~[na:1.8.0_45] at java.lang.ClassLoader.defineClass(ClassLoader.java:760) ~[na:1.8.0_45] at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142) ~[na:1.8.0_45] at java.net.URLClassLoader.defineClass(URLClassLoader.java:467) ~[na:1.8.0_45] at java.net.URLClassLoader.access$100(URLClassLoader.java:73) ~[na:1.8.0_45] at java.net.URLClassLoader$1.run(URLClassLoader.java:368) ~[na:1.8.0_45] at java.net.URLClassLoader$1.run(URLClassLoader.java:362) ~[na:1.8.0_45] at java.security.AccessController.doPrivileged(Native Method) ~[na:1.8.0_45] at java.net.URLClassLoader.findClass(URLClassLoader.java:361) ~[na:1.8.0_45] at java.lang.ClassLoader.loadClass(ClassLoader.java:424) ~[na:1.8.0_45] at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:331) ~[na:1.8.0_45] at java.lang.ClassLoader.loadClass(ClassLoader.java:411) ~[na:1.8.0_45] at java.lang.ClassLoader.loadClass(ClassLoader.java:411) ~[na:1.8.0_45] at java.lang.ClassLoader.loadClass(ClassLoader.java:411) ~[na:1.8.0_45] at java.lang.ClassLoader.loadClass(ClassLoader.java:411) ~[na:1.8.0_45] at java.lang.ClassLoader.loadClass(ClassLoader.java:357) ~[na:1.8.0_45] at java.lang.Class.forName0(Native Method) ~[na:1.8.0_45] at java.lang.Class.forName(Class.java:348) ~[na:1.8.0_45] at org.apache.hadoop.conf.Configuration.getClassByNameOrNull(Configuration.java:2093) ~[na:na] at org.apache.hadoop.conf.Configuration.getClassByName(Configuration.java:2058) ~[na:na] at org.apache.hadoop.io.compress.CompressionCodecFactory.getCodecClasses(CompressionCodecFactory.java:128) ~[na:na] at org.apache.hadoop.io.compress.CompressionCodecFactory.<init>(CompressionCodecFactory.java:175) ~[na:na] at org.apache.nifi.processors.hadoop.AbstractHadoopProcessor.getCompressionCodec(AbstractHadoopProcessor.java:375) ~[na:na] at org.apache.nifi.processors.hadoop.PutHDFS.onTrigger(PutHDFS.java:220) ~[na:na] at org.apache.nifi.processor.AbstractProcessor.onTrigger(AbstractProcessor.java:27) ~[nifi-api-0.6.1.jar:0.6.1] at org.apache.nifi.controller.StandardProcessorNode.onTrigger(StandardProcessorNode.java:1059) ~[nifi-framework-core-0.6.1.jar:0.6.1] at org.apache.nifi.controller.tasks.ContinuallyRunProcessorTask.call(ContinuallyRunProcessorTask.java:136) [nifi-framework-core-0.6.1.jar:0.6.1] at org.apache.nifi.controller.tasks.ContinuallyRunProcessorTask.call(ContinuallyRunProcessorTask.java:47) [nifi-framework-core-0.6.1.jar:0.6.1] at org.apache.nifi.controller.scheduling.TimerDrivenSchedulingAgent$1.run(TimerDrivenSchedulingAgent.java:123) [nifi-framework-core-0.6.1.jar:0.6.1] at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [na:1.8.0_45] at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308) [na:1.8.0_45] at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180) [na:1.8.0_45] at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294) [na:1.8.0_45] at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) [na:1.8.0_45] at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) [na:1.8.0_45] at java.lang.Thread.run(Thread.java:745) [na:1.8.0_45] Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.conf.Configurable at java.net.URLClassLoader.findClass(URLClassLoader.java:381) ~[na:1.8.0_45] at java.lang.ClassLoader.loadClass(ClassLoader.java:424) ~[na:1.8.0_45] at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:331) ~[na:1.8.0_45] at java.lang.ClassLoader.loadClass(ClassLoader.java:357) ~[na:1.8.0_45] ... 36 common frames omitted

Can anyone help me to make the processor working?

Thank you

Created 05-17-2016 04:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is that the entire log message? Can you share the preceding lines to this stack trace?

Marco,

The NoClassDefFoundError you have encountered is most likely caused by the contents of your core-sites.xml file.

Check to see if the following line exists and if it does remove it from the file:

“com.hadoop.compression.lzo.LzoCodec,com.hadoop.compression.lzo.LzopCodec” from “io.compression.codecs” property in “core-site.xml” file.

Thanks,

Matt

Created 05-18-2016 08:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Pierre Villard I posted a wrong picture, I tried with both core-site and hdfs-site, but it still doesn't work. Thank you for your reply.

Created 05-19-2016 11:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm not 100% sure how LZO works, but in a lot of cases the codec ends up needing a native library. On a unix system you would set LD_LIBRARY_PATH to include the location of the .so files for the LZO codec, or put them in JAVA_HOME/jre/lib native directory.

You could do something like:

export LD_LIBRARY_PATH=/usr/hdp/2.2.0.0-1084/hadoop/lib/native bin/nifi.sh start That should let PutHDFS know about the appropriate libraries.

Created 07-12-2016 11:30 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

this same thing happened on HDP 2.5 plus the newest HDF

Created 07-19-2016 02:17 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In HDP2.5 Sandbox, with the latest NiFi

When I use PutHDFS/GetHDFS processor, it show below errors:

java.lang.IllegalArgumentException: Compression codec com.hadoop.compression.lzo.LzoCodec not found.

In core-site.xml add

<property> <name>io.compression.codecs</name> <value>org.apache.hadoop.io.compress.GzipCodec,org.apache.hadoop.io.compress.DefaultCodec,

org.apache.hadoop.io.compress.BZip2Codec,com.hadoop.compression.lzo.LzoCodec,com.hadoop.compression.lzo.LzopCodec

</value>

</property> <property> <name>io.compression.codec.lzo.class</name> <value>com.hadoop.compression.lzo.LzoCodec</value> </property>

In mapred-site.xml add

<property> <name>mapreduce.map.output.compress</name> <value>true</value> </property> <property> <name>mapreduce.map.output.compress.codec</name> <value>com.hadoop.compression.lzo.LzoCodec</value> </property>

The issue is still there. Does anybody know which version of HDP + NiFi works without above issue?

Thanks.

,Which HDP and NiFi can work without the Lzo not found issue?

Created 07-19-2016 01:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you try setting the LD_LIBRARY_PATH as described above?

Created 07-19-2016 05:03 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have below in my mapred-site.xml file.

<property>

<name>mapreduce.admin.useer.env</name> <value>LD_LIBRARY_PATH=/usr/hdp/${hdp.version}/hadoop/lib/native:/usr/hdp/${hdp.version}/hadoop/lib/native/Linux-amd64-64 </value>

</property>

BTW, when I remove the "io.compression.codecs" from core-site.xml, it works.

But it isn't an optimal solution.

Thanks.

Created 07-19-2016 05:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I don't think mapreduce-site.xml is being used by PutHDFS... I mean of course you can specify it as one of the config resources and NiFi will read it into a Hadoop Configuration object, but I don't think any mapreduce properties are involved in reading/writing to HDFS.

Can you try exporting LD_LIBRARY_PATH in the shell where you will start NiFi from?

Created 11-10-2016 08:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is something that happens only if we install it using Ambari? I had the same here with the latest HDF/HDP versions.

Deleting the classe name from the property worked for me, maybe this should be included on the installation process ?

Created 11-10-2016 01:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This has nothing to do with being installed via Ambari. If the core-site.xml file that is being used by the HDFS processor in NiFi reference a Class which NiFi does not include, you will get a NoClassDef found error. Adding new Class to NiFi's HDFS NAR bundle may be a possibility, but as I am not a developer i can't speak to that. You can always file an Apache Jira against NiFi for this change.

https://issues.apache.org/jira/secure/Dashboard.jspa

Thanks,

Matt

- « Previous

-

- 1

- 2

- Next »