Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Put error message in PutEmail message body

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Put error message in PutEmail message body

- Labels:

-

Apache NiFi

-

NiFi Registry

Created 08-05-2022 02:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi All,

I want to put the error message or want to attach the log file from local location to the nifi flow.

My flow is as below:

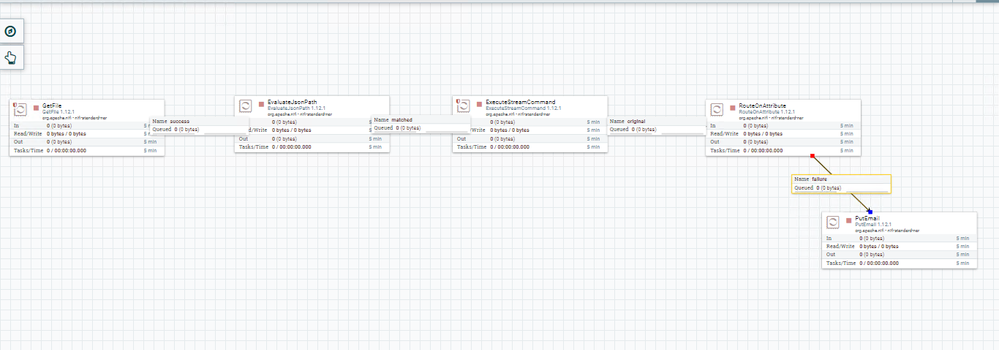

Getfile -> EvaluateJSON -> ExecuteStreamCommand->RouteAttribute-> PutEmail.

Please help me for this.

Thank you in advance!!

Created 08-05-2022 08:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@VJ_0082

Your use case is not very clear. What exactly are you trying to accomplish via your existing dataflow and what issues are you having?

Thanks,

Matt

Created 08-05-2022 09:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 08-08-2022 05:16 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@VJ_0082

When a NiFi processor errors it will produce a bulletin. NiFi has a SiteToSiteBulletin reporting task. This reporting task can send these produced bulletins to a remote input port on either the same NiFi or a completely different NiFi. You can construct a dataflow from that remote input port to route these FlowFiles to your putEmail processor. Constructing a dataflow like this avoids needing to deal with non error related log output or ingesting same log lines over and over by re-reading the app.log.

By default processors have their bulletin level set to ERROR, but you can change that on each processor if so desired.

If you found this response assisted with your query, please take a moment to login and click on "Accept as Solution" below this post.

Thank you,

Matt

Created 08-09-2022 01:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @MattWho ,

What I want is I'm executing the python script using the above given flow using ExecuteStreamCommand every 5 mins. But when the python script fails it create one log file at some location of another server. I want to attach that log file to the mail which we are putting it using PutEmail processor.

Created 08-10-2022 06:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@VJ_0082

So you custom PythonScript executes and when it errors, a log is written on some host other than the host where NiFi is running? The GetFile processor can only get files from the local filesystem. I can get files from a remote filesystem (unless that remote file system is locally mounted).

What is the error and stack trace your are seeing in the nifi-app.log when your PutEmail processor executes against your source FlowFile? Do you have a sample of FlowFile content being passed to the PutEmail processor? How has your PutEmail processor been configured?

Matt

Created 08-10-2022 10:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Kindly find the below flow. After the ExecuteStreamCommand the log file will be created when the output is failed with the content in log file as shown below. So, either way i have to do that is either attach the log file to mail or paste that content in mail.

Nifi Flow:

Log File Content:

2022-07-27 21:18:48,574

Traceback (most recent call last):

File "File_upload.py", line 121, in <module>

shell_exec(query)

File "File_upload.py", line 28, in shell_exec

raise Exception(stderr)

Exception: mv: `/abc/xyz/abc_xyz_stg/configs_enc/COMMON/archive/ENV/env_uat_v_3.json': No such file or directory: `hdfs://cluster/abc/xyz/abc_xyz_stg/configs_enc/COMMON/archive/ENV/env_uat_v_3.json'

Created 08-15-2022 07:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@VJ_0082

Since your log is being generated on a remote server, You will need to use a processor that can remotely connect to the exteranl server to retrieve that log

Possible designs:

1. The could incorporate a FetchSFTP processor in to your existing flow. I assume your existing RouteOnAttriibute processor is checking for when an error happens with your script? If so, add the FetchSFTP processor between this processor and your PutEmail processor. Configured the FetchSFTP processor (configured with "Completion Strategy" of DELETE) fetch the specific log file created. This dataflow assumes the log's filename is always the same.

2. This second flow could be built using the ListSFTP (configured with filename filter) --> FetchSFTP --> any processors you want to use to manipulate log --> PutEmail.

The ListSFTP processor would be configured to execute on "primary" node and be configured with a "File Filter Regex". When your 5 minute flow runs and if it encounters an exception resulting in the creation of the log file, this listSFTP processor will see that file and list it (0 byte FlowFile). That FlowFile will have all the FlowFile attributes needed for the FetchSFTP processor (configured with "Completion Strategy" of DELETE) to fetch the log which is added to the content of the existing FlowFile. If you do not need to extract from or modify that content, your next processor could just be the PutFile processor.

If you found this response assisted with your query, please take a moment to login and click on "Accept as Solution" below this post.

Thank you,

Matt

Created 08-11-2022 11:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@VJ_0082

I apologize if I am still not clear about your Script being executed by ExecuteStream command.

The Script is writing to some log file on disk where (locally on the NiFi node host or some external server where NiFi is not located) when it fails/errors?

I assume your flow is passing the "original" FlowFile with a new FlowFile Attribute "execution.error" with your exception/error in it? Does this attribute contain the details you want to send in your email?

Then you are routing based on what attribute from Original FlowFile (execution.status)?

How is your PutEmail processor configured. It can be configured to send either a FlowFiIe attribute or FlowFile content.

If the content you to sent via PutEmail is not in the Content of the FlowFile and is also Not in a FlowFile Attribute on the FlowFile, but rather written to some location on disk, you would need a separate dataflow that watches that log file on disk for new entries ingesting them into a FlowFile that feeds a putEmial processor. This could be accomplished using the TailFile processor. If that content is being written out a log file on remote system that becomes a different challenge.

If you found this response assisted with your query, please take a moment to login and click on "Accept as Solution" below this post.

Thank you,

Matt