Support Questions

- Cloudera Community

- Support

- Support Questions

- PutHiveStreaming Nifi processor; various errors

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

PutHiveStreaming Nifi processor; various errors

- Labels:

-

Apache NiFi

Created on 10-04-2016 06:32 AM - edited 08-19-2019 04:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm trying to stream data to Hive with the PutStreamingHive Nifi processor.

I saw this post: https://community.hortonworks.com/questions/59411/how-to-use-puthivestreaming.html and can confirm that:

- org.apache.hadoop.hive.ql.lockmgr.DbTxnManager is the transaction manager

- ACID transactions run compactor is enabled

- There are threads available for the compactor

I created an ORC backed Hive table:

CREATE TABLE `streaming_messages`(

`message` string,

etc...)

CLUSTERED BY (message) INTO 5 BUCKETS

STORED AS ORC

LOCATION

'hdfs://hadoop01.woolford.io:8020/apps/hive/warehouse/mydb.db/streaming_messages'

TBLPROPERTIES('transactional'='true');

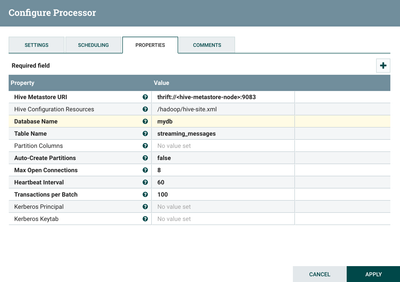

I then created a `PutStreamingHive` processor that takes messages Avro messages and writes them to a Hive table:

I notice that the Nifi processor uses Thrift to send data to Hive. There are some errors in nifi-app.log and hivemetastore.log.

Stacktrace from nifi-app.log:

2016-10-03 23:40:25,348 ERROR [Timer-Driven Process Thread-8] o.a.n.processors.hive.PutHiveStreaming

org.apache.nifi.util.hive.HiveWriter$ConnectFailure: Failed connecting to EndPoint {metaStoreUri='thrift://10.0.1.12:9083', database='mydb', table='streaming_messages', partitionVals=[] }

at org.apache.nifi.util.hive.HiveWriter.<init>(HiveWriter.java:80) ~[nifi-hive-processors-1.0.0.2.0.0.0-579.jar:1.0.0.2.0.0.0-579]

at org.apache.nifi.util.hive.HiveUtils.makeHiveWriter(HiveUtils.java:45) ~[nifi-hive-processors-1.0.0.2.0.0.0-579.jar:1.0.0.2.0.0.0-579]

at org.apache.nifi.processors.hive.PutHiveStreaming.makeHiveWriter(PutHiveStreaming.java:827) [nifi-hive-processors-1.0.0.2.0.0.0-579.jar:1.0.0.2.0.0.0-579]

at org.apache.nifi.processors.hive.PutHiveStreaming.getOrCreateWriter(PutHiveStreaming.java:738) [nifi-hive-processors-1.0.0.2.0.0.0-579.jar:1.0.0.2.0.0.0-579]

at org.apache.nifi.processors.hive.PutHiveStreaming.lambda$onTrigger$4(PutHiveStreaming.java:462) [nifi-hive-processors-1.0.0.2.0.0.0-579.jar:1.0.0.2.0.0.0-579]

at org.apache.nifi.controller.repository.StandardProcessSession.read(StandardProcessSession.java:1880) ~[na:na]

at org.apache.nifi.controller.repository.StandardProcessSession.read(StandardProcessSession.java:1851) ~[na:na]

at org.apache.nifi.processors.hive.PutHiveStreaming.onTrigger(PutHiveStreaming.java:389) [nifi-hive-processors-1.0.0.2.0.0.0-579.jar:1.0.0.2.0.0.0-579]

at org.apache.nifi.processor.AbstractProcessor.onTrigger(AbstractProcessor.java:27) ~[nifi-api-1.0.0.2.0.0.0-579.jar:1.0.0.2.0.0.0-579]

at org.apache.nifi.controller.StandardProcessorNode.onTrigger(StandardProcessorNode.java:1064) ~[na:na]

at org.apache.nifi.controller.tasks.ContinuallyRunProcessorTask.call(ContinuallyRunProcessorTask.java:136) ~[na:na]

at org.apache.nifi.controller.tasks.ContinuallyRunProcessorTask.call(ContinuallyRunProcessorTask.java:47) ~[na:na]

at org.apache.nifi.controller.scheduling.TimerDrivenSchedulingAgent$1.run(TimerDrivenSchedulingAgent.java:132) ~[na:na]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) ~[na:1.8.0_77]

at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308) ~[na:1.8.0_77]

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180) ~[na:1.8.0_77]

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294) ~[na:1.8.0_77]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) ~[na:1.8.0_77]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) ~[na:1.8.0_77]

at java.lang.Thread.run(Thread.java:745) ~[na:1.8.0_77]

Caused by: org.apache.nifi.util.hive.HiveWriter$TxnBatchFailure: Failed acquiring Transaction Batch from EndPoint: {metaStoreUri='thrift://10.0.1.12:9083', database='mydb', table='streaming_messages', partitionVals=[] }

at org.apache.nifi.util.hive.HiveWriter.nextTxnBatch(HiveWriter.java:255) ~[nifi-hive-processors-1.0.0.2.0.0.0-579.jar:1.0.0.2.0.0.0-579]

at org.apache.nifi.util.hive.HiveWriter.<init>(HiveWriter.java:74) ~[nifi-hive-processors-1.0.0.2.0.0.0-579.jar:1.0.0.2.0.0.0-579]

... 19 common frames omitted

Caused by: org.apache.hive.hcatalog.streaming.TransactionError: Unable to acquire lock on {metaStoreUri='thrift://10.0.1.12:9083', database='mydb', table='streaming_messages', partitionVals=[] }

at org.apache.hive.hcatalog.streaming.HiveEndPoint$TransactionBatchImpl.beginNextTransactionImpl(HiveEndPoint.java:578) ~[hive-hcatalog-streaming-1.2.1.jar:1.2.1]

at org.apache.hive.hcatalog.streaming.HiveEndPoint$TransactionBatchImpl.beginNextTransaction(HiveEndPoint.java:547) ~[hive-hcatalog-streaming-1.2.1.jar:1.2.1]

at org.apache.nifi.util.hive.HiveWriter.nextTxnBatch(HiveWriter.java:252) ~[nifi-hive-processors-1.0.0.2.0.0.0-579.jar:1.0.0.2.0.0.0-579]

... 20 common frames omitted

Caused by: org.apache.thrift.transport.TTransportException: null

at org.apache.thrift.transport.TIOStreamTransport.read(TIOStreamTransport.java:132) ~[hive-exec-1.2.1.jar:1.2.1]

at org.apache.thrift.transport.TTransport.readAll(TTransport.java:86) ~[hive-exec-1.2.1.jar:1.2.1]

at org.apache.thrift.protocol.TBinaryProtocol.readAll(TBinaryProtocol.java:429) ~[hive-exec-1.2.1.jar:1.2.1]

at org.apache.thrift.protocol.TBinaryProtocol.readI32(TBinaryProtocol.java:318) ~[hive-exec-1.2.1.jar:1.2.1]

at org.apache.thrift.protocol.TBinaryProtocol.readMessageBegin(TBinaryProtocol.java:219) ~[hive-exec-1.2.1.jar:1.2.1]

at org.apache.thrift.TServiceClient.receiveBase(TServiceClient.java:69) ~[hive-exec-1.2.1.jar:1.2.1]

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.recv_lock(ThriftHiveMetastore.java:3906) ~[hive-metastore-1.2.1.jar:1.2.1]

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.lock(ThriftHiveMetastore.java:3893) ~[hive-metastore-1.2.1.jar:1.2.1]

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.lock(HiveMetaStoreClient.java:1863) ~[hive-metastore-1.2.1.jar:1.2.1]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[na:1.8.0_77]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[na:1.8.0_77]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[na:1.8.0_77]

at java.lang.reflect.Method.invoke(Method.java:498) ~[na:1.8.0_77]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.invoke(RetryingMetaStoreClient.java:152) ~[hive-metastore-1.2.1.jar:1.2.1]

at com.sun.proxy.$Proxy148.lock(Unknown Source) ~[na:na]

at org.apache.hive.hcatalog.streaming.HiveEndPoint$TransactionBatchImpl.beginNextTransactionImpl(HiveEndPoint.java:573) ~[hive-hcatalog-streaming-1.2.1.jar:1.2.1]

... 22 common frames omitted

Stacktrace from hivemetastore.log:

2016-10-03 23:40:24,322 ERROR [pool-5-thread-114]: metastore.RetryingHMSHandler (RetryingHMSHandler.java:invokeInternal(195)) - java.lang.IllegalStateException: Unexpected DataOperationType: UNSET agentInfo=Unknown txnid:98201

at org.apache.hadoop.hive.metastore.txn.TxnHandler.enqueueLockWithRetry(TxnHandler.java:938)

at org.apache.hadoop.hive.metastore.txn.TxnHandler.lock(TxnHandler.java:814)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.lock(HiveMetaStore.java:5751)

at sun.reflect.GeneratedMethodAccessor28.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invokeInternal(RetryingHMSHandler.java:139)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:97)

at com.sun.proxy.$Proxy12.lock(Unknown Source)

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Processor$lock.getResult(ThriftHiveMetastore.java:11860)

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Processor$lock.getResult(ThriftHiveMetastore.java:11844)

at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:39)

at org.apache.hadoop.hive.metastore.TUGIBasedProcessor$1.run(TUGIBasedProcessor.java:110)

at org.apache.hadoop.hive.metastore.TUGIBasedProcessor$1.run(TUGIBasedProcessor.java:106)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1724)

at org.apache.hadoop.hive.metastore.TUGIBasedProcessor.process(TUGIBasedProcessor.java:118)

at org.apache.thrift.server.TThreadPoolServer$WorkerProcess.run(TThreadPoolServer.java:286)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Do you have any suggestions to resolve this?

Created 10-04-2016 12:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issue for Hive Streaming between HDF 2.0 and HDP 2.5 is captured as NIFI-2828 (albeit under a different title, it is the same cause and fix). In the meantime as a possible workaround I have built a Hive NAR that you can try if you wish, just save off your other one (from the lib/ folder with a version like 1.0.0.2.0.0-159 or something) and replace it with this one.

Created 10-04-2016 06:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

Which version of Hive are you using? Is it HDP 2.4 or 2.5

Created 10-04-2016 11:59 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

We have the same error, have You solved this? Our HDP is version 2.5.

Created 10-04-2016 12:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Currently there is a problem between the version of Hive jars in HDF 2.0 and HDP 2.5 and is being looked into.

You should not be facing any problems while using the HDP 2.4.

Created 10-04-2016 12:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issue for Hive Streaming between HDF 2.0 and HDP 2.5 is captured as NIFI-2828 (albeit under a different title, it is the same cause and fix). In the meantime as a possible workaround I have built a Hive NAR that you can try if you wish, just save off your other one (from the lib/ folder with a version like 1.0.0.2.0.0-159 or something) and replace it with this one.

Created 10-04-2016 12:58 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @Matt Burgess. That worked perfectly. Very much appreciated. @mkalyanpur: I'm running HDP 2.5.0.0 and HDF 2.0.0.0.

Created 03-27-2017 07:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Matt,

I have the same error with storm hive bolt and hive (hdp 2.5)

It works fine with hdp 2.4. I have problem only with hdp 2.5. I don't want to rollback to older HDP versio.

Can you help me to solve it .

Any workaround or fix ... or something....

Storm logs below:

org.apache.storm.hive.common.HiveWriter$ConnectFailure: Failed

connecting to EndPoint {metaStoreUri='thrift://ambari.local:9083',

database='default', table='stock_prices', partitionVals=[Marcin] }

at org.apache.storm.hive.common.HiveWriter.<init>(HiveWriter.java:80)

~[stormjar.jar:?]

at org.apache.storm.hive.common.HiveUtils.makeHiveWriter(HiveUtils.java:50)

~[stormjar.jar:?]

at org.apache.storm.hive.bolt.HiveBolt.getOrCreateWriter(HiveBolt.java:259)

~[stormjar.jar:?]

at org.apache.storm.hive.bolt.HiveBolt.execute(HiveBolt.java:112)

[stormjar.jar:?]

at org.apache.storm.daemon.executor$fn__9362$tuple_action_fn__9364.invoke(executor.clj:734)

[storm-core-1.0.1.2.5.0.0-1245.jar:1.0.1.2.5.0.0-1245]

at org.apache.storm.daemon.executor$mk_task_receiver$fn__9283.invoke(executor.clj:466)

[storm-core-1.0.1.2.5.0.0-1245.jar:1.0.1.2.5.0.0-1245]

at org.apache.storm.disruptor$clojure_handler$reify__8796.onEvent(disruptor.clj:40)

[storm-core-1.0.1.2.5.0.0-1245.jar:1.0.1.2.5.0.0-1245]

at org.apache.storm.utils.DisruptorQueue.consumeBatchToCursor(DisruptorQueue.java:451)

[storm-core-1.0.1.2.5.0.0-1245.jar:1.0.1.2.5.0.0-1245]

at org.apache.storm.utils.DisruptorQueue.consumeBatchWhenAvailable(DisruptorQueue.java:430)

[storm-core-1.0.1.2.5.0.0-1245.jar:1.0.1.2.5.0.0-1245]

at org.apache.storm.disruptor$consume_batch_when_available.invoke(disruptor.clj:73)

[storm-core-1.0.1.2.5.0.0-1245.jar:1.0.1.2.5.0.0-1245]

at org.apache.storm.daemon.executor$fn__9362$fn__9375$fn__9428.invoke(executor.clj:853)

[storm-core-1.0.1.2.5.0.0-1245.jar:1.0.1.2.5.0.0-1245]

at org.apache.storm.util$async_loop$fn__656.invoke(util.clj:484)

[storm-core-1.0.1.2.5.0.0-1245.jar:1.0.1.2.5.0.0-1245]

at clojure.lang.AFn.run(AFn.java:22) [clojure-1.7.0.jar:?]

at java.lang.Thread.run(Thread.java:745) [?:1.8.0_77]

Caused by: org.apache.storm.hive.common.HiveWriter$TxnBatchFailure:

Failed acquiring Transaction Batch from EndPoint:

{metaStoreUri='thrift://ambari.local:9083', database='default',

table='stock_prices', partitionVals=[Marcin] }

at org.apache.storm.hive.common.HiveWriter.nextTxnBatch(HiveWriter.java:264)

~[stormjar.jar:?]

at org.apache.storm.hive.common.HiveWriter.<init>(HiveWriter.java:72)

~[stormjar.jar:?]

... 13 more

Caused by: org.apache.hive.hcatalog.streaming.TransactionError: Unable

to acquire lock on {metaStoreUri='thrift://ambari.local:9083',

database='default', table='stock_prices', partitionVals=[Marcin] }

at org.apache.hive.hcatalog.streaming.HiveEndPoint$TransactionBatchImpl.beginNextTransactionImpl(HiveEndPoint.java:575)

~[stormjar.jar:?]

at org.apache.hive.hcatalog.streaming.HiveEndPoint$TransactionBatchImpl.beginNextTransaction(HiveEndPoint.java:544)

~[stormjar.jar:?]

at org.apache.storm.hive.common.HiveWriter.nextTxnBatch(HiveWriter.java:259)

~[stormjar.jar:?]

at org.apache.storm.hive.common.HiveWriter.<init>(HiveWriter.java:72)

~[stormjar.jar:?]

... 13 more

Caused by: org.apache.thrift.transport.TTransportException

at org.apache.thrift.transport.TIOStreamTransport.read(TIOStreamTransport.java:132)

~[stormjar.jar:?]

at org.apache.thrift.transport.TTransport.readAll(TTransport.java:84)

~[stormjar.jar:?]

at org.apache.thrift.protocol.TBinaryProtocol.readAll(TBinaryProtocol.java:378)

~[stormjar.jar:?]

at org.apache.thrift.protocol.TBinaryProtocol.readI32(TBinaryProtocol.java:297)

~[stormjar.jar:?]

at org.apache.thrift.protocol.TBinaryProtocol.readMessageBegin(TBinaryProtocol.java:204)

~[stormjar.jar:?]

at org.apache.thrift.TServiceClient.receiveBase(TServiceClient.java:69)

~[stormjar.jar:?]

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.recv_lock(ThriftHiveMetastore.java:3781)

~[stormjar.jar:?]

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.lock(ThriftHiveMetastore.java:3768)

~[stormjar.jar:?]

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.lock(HiveMetaStoreClient.java:1736)

~[stormjar.jar:?]

at org.apache.hive.hcatalog.streaming.HiveEndPoint$TransactionBatchImpl.beginNextTransactionImpl(HiveEndPoint.java:570)

~[stormjar.jar:?]

at org.apache.hive.hcatalog.streaming.HiveEndPoint$TransactionBatchImpl.beginNextTransaction(HiveEndPoint.java:544)

~[stormjar.jar:?]

at org.apache.storm.hive.common.HiveWriter.nextTxnBatch(HiveWriter.java:259)

~[stormjar.jar:?]

at org.apache.storm.hive.common.HiveWriter.<init>(HiveWriter.java:72)

~[stormjar.jar:?]

... 13 more

2017-03-26 21:43:05.112 h.metastore [INFO] Trying to connect to

metastore with URI thrift://ambari.local:9083

Created 03-27-2017 07:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The aforementioned issue has been fixed as of HDF 2.1. Rather than downgrade your HDP, can you upgrade your HDF?

Created 03-28-2017 06:46 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Matt,

Thank you very much for you reply. Now I know that I can fix it and I'm very happy with that.

I'm begginer.

I've installed ambari on my 4 virtual machines just to know Big Data. I'm using repositories (I can see it in my ambari web console) :

http://public-repo-1.hortonworks.com/HDP/ubuntu14/2.x/updates/2.5.0.0

http://public-repo-1.hortonworks.com/HDP-UTILS-1.1.0.21/repos/ubuntu14

During ambari installation I used ambari list

http://public-repo-1.hortonworks.com/ambari/ubuntu14/2.x/updates/2.4 .0.1/ambari.list

I know that this ambari list points to HDP 2.5

Now my question is :

How can I upgrade HDF ? Should I use other repositories ?

Sorry if my question is stupid....

Created 03-29-2017 11:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Matt,

I've upgraded my environment.

I have HIve on HDP 2.5 (environment 1) and storm on HDF 2.1

(environment 2)

I have the same roor:

On storm:

Caused by: org.apache.hive.hcatalog.streaming.TransactionError: Unable to acquire lock on {metaStoreUri='thrift://hdp1.local:9083', database='default', table='stock_prices', partitionVals=[Marcin] } at org.apache.hive.hcatalog.streaming.HiveEndPoint$TransactionBatchImpl.beginNextTransactionImpl(HiveEndPoint.java:575) ~[stormjar.jar:?]

On hive metastore:

2017-03-29 11:56:29,926 ERROR [pool-5-thread-17]: server.TThreadPoolServer (TThreadPoolServer.java:run(297)) - Error occurred during processing of message. java.lang.IllegalStateException: Unexpected DataOperationType: UNSET agentInfo=Unknown txnid:54 at org.apache.hadoop.hive.metastore.txn.TxnHandler.enqueueLockWithRetry(TxnHandler.java:938) at org.apache.hadoop.hive.metastore.txn.TxnHandler.lock(TxnHandler.java:814) at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.lock(HiveMetaSt