Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: PutHiveStreaming Nifi processor; various error...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

PutHiveStreaming Nifi processor; various errors

- Labels:

-

Apache NiFi

Created on 10-04-2016 06:32 AM - edited 08-19-2019 04:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm trying to stream data to Hive with the PutStreamingHive Nifi processor.

I saw this post: https://community.hortonworks.com/questions/59411/how-to-use-puthivestreaming.html and can confirm that:

- org.apache.hadoop.hive.ql.lockmgr.DbTxnManager is the transaction manager

- ACID transactions run compactor is enabled

- There are threads available for the compactor

I created an ORC backed Hive table:

CREATE TABLE `streaming_messages`(

`message` string,

etc...)

CLUSTERED BY (message) INTO 5 BUCKETS

STORED AS ORC

LOCATION

'hdfs://hadoop01.woolford.io:8020/apps/hive/warehouse/mydb.db/streaming_messages'

TBLPROPERTIES('transactional'='true');

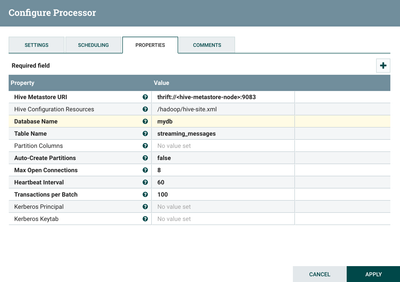

I then created a `PutStreamingHive` processor that takes messages Avro messages and writes them to a Hive table:

I notice that the Nifi processor uses Thrift to send data to Hive. There are some errors in nifi-app.log and hivemetastore.log.

Stacktrace from nifi-app.log:

2016-10-03 23:40:25,348 ERROR [Timer-Driven Process Thread-8] o.a.n.processors.hive.PutHiveStreaming

org.apache.nifi.util.hive.HiveWriter$ConnectFailure: Failed connecting to EndPoint {metaStoreUri='thrift://10.0.1.12:9083', database='mydb', table='streaming_messages', partitionVals=[] }

at org.apache.nifi.util.hive.HiveWriter.<init>(HiveWriter.java:80) ~[nifi-hive-processors-1.0.0.2.0.0.0-579.jar:1.0.0.2.0.0.0-579]

at org.apache.nifi.util.hive.HiveUtils.makeHiveWriter(HiveUtils.java:45) ~[nifi-hive-processors-1.0.0.2.0.0.0-579.jar:1.0.0.2.0.0.0-579]

at org.apache.nifi.processors.hive.PutHiveStreaming.makeHiveWriter(PutHiveStreaming.java:827) [nifi-hive-processors-1.0.0.2.0.0.0-579.jar:1.0.0.2.0.0.0-579]

at org.apache.nifi.processors.hive.PutHiveStreaming.getOrCreateWriter(PutHiveStreaming.java:738) [nifi-hive-processors-1.0.0.2.0.0.0-579.jar:1.0.0.2.0.0.0-579]

at org.apache.nifi.processors.hive.PutHiveStreaming.lambda$onTrigger$4(PutHiveStreaming.java:462) [nifi-hive-processors-1.0.0.2.0.0.0-579.jar:1.0.0.2.0.0.0-579]

at org.apache.nifi.controller.repository.StandardProcessSession.read(StandardProcessSession.java:1880) ~[na:na]

at org.apache.nifi.controller.repository.StandardProcessSession.read(StandardProcessSession.java:1851) ~[na:na]

at org.apache.nifi.processors.hive.PutHiveStreaming.onTrigger(PutHiveStreaming.java:389) [nifi-hive-processors-1.0.0.2.0.0.0-579.jar:1.0.0.2.0.0.0-579]

at org.apache.nifi.processor.AbstractProcessor.onTrigger(AbstractProcessor.java:27) ~[nifi-api-1.0.0.2.0.0.0-579.jar:1.0.0.2.0.0.0-579]

at org.apache.nifi.controller.StandardProcessorNode.onTrigger(StandardProcessorNode.java:1064) ~[na:na]

at org.apache.nifi.controller.tasks.ContinuallyRunProcessorTask.call(ContinuallyRunProcessorTask.java:136) ~[na:na]

at org.apache.nifi.controller.tasks.ContinuallyRunProcessorTask.call(ContinuallyRunProcessorTask.java:47) ~[na:na]

at org.apache.nifi.controller.scheduling.TimerDrivenSchedulingAgent$1.run(TimerDrivenSchedulingAgent.java:132) ~[na:na]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) ~[na:1.8.0_77]

at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308) ~[na:1.8.0_77]

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180) ~[na:1.8.0_77]

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294) ~[na:1.8.0_77]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) ~[na:1.8.0_77]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) ~[na:1.8.0_77]

at java.lang.Thread.run(Thread.java:745) ~[na:1.8.0_77]

Caused by: org.apache.nifi.util.hive.HiveWriter$TxnBatchFailure: Failed acquiring Transaction Batch from EndPoint: {metaStoreUri='thrift://10.0.1.12:9083', database='mydb', table='streaming_messages', partitionVals=[] }

at org.apache.nifi.util.hive.HiveWriter.nextTxnBatch(HiveWriter.java:255) ~[nifi-hive-processors-1.0.0.2.0.0.0-579.jar:1.0.0.2.0.0.0-579]

at org.apache.nifi.util.hive.HiveWriter.<init>(HiveWriter.java:74) ~[nifi-hive-processors-1.0.0.2.0.0.0-579.jar:1.0.0.2.0.0.0-579]

... 19 common frames omitted

Caused by: org.apache.hive.hcatalog.streaming.TransactionError: Unable to acquire lock on {metaStoreUri='thrift://10.0.1.12:9083', database='mydb', table='streaming_messages', partitionVals=[] }

at org.apache.hive.hcatalog.streaming.HiveEndPoint$TransactionBatchImpl.beginNextTransactionImpl(HiveEndPoint.java:578) ~[hive-hcatalog-streaming-1.2.1.jar:1.2.1]

at org.apache.hive.hcatalog.streaming.HiveEndPoint$TransactionBatchImpl.beginNextTransaction(HiveEndPoint.java:547) ~[hive-hcatalog-streaming-1.2.1.jar:1.2.1]

at org.apache.nifi.util.hive.HiveWriter.nextTxnBatch(HiveWriter.java:252) ~[nifi-hive-processors-1.0.0.2.0.0.0-579.jar:1.0.0.2.0.0.0-579]

... 20 common frames omitted

Caused by: org.apache.thrift.transport.TTransportException: null

at org.apache.thrift.transport.TIOStreamTransport.read(TIOStreamTransport.java:132) ~[hive-exec-1.2.1.jar:1.2.1]

at org.apache.thrift.transport.TTransport.readAll(TTransport.java:86) ~[hive-exec-1.2.1.jar:1.2.1]

at org.apache.thrift.protocol.TBinaryProtocol.readAll(TBinaryProtocol.java:429) ~[hive-exec-1.2.1.jar:1.2.1]

at org.apache.thrift.protocol.TBinaryProtocol.readI32(TBinaryProtocol.java:318) ~[hive-exec-1.2.1.jar:1.2.1]

at org.apache.thrift.protocol.TBinaryProtocol.readMessageBegin(TBinaryProtocol.java:219) ~[hive-exec-1.2.1.jar:1.2.1]

at org.apache.thrift.TServiceClient.receiveBase(TServiceClient.java:69) ~[hive-exec-1.2.1.jar:1.2.1]

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.recv_lock(ThriftHiveMetastore.java:3906) ~[hive-metastore-1.2.1.jar:1.2.1]

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Client.lock(ThriftHiveMetastore.java:3893) ~[hive-metastore-1.2.1.jar:1.2.1]

at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.lock(HiveMetaStoreClient.java:1863) ~[hive-metastore-1.2.1.jar:1.2.1]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[na:1.8.0_77]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[na:1.8.0_77]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[na:1.8.0_77]

at java.lang.reflect.Method.invoke(Method.java:498) ~[na:1.8.0_77]

at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.invoke(RetryingMetaStoreClient.java:152) ~[hive-metastore-1.2.1.jar:1.2.1]

at com.sun.proxy.$Proxy148.lock(Unknown Source) ~[na:na]

at org.apache.hive.hcatalog.streaming.HiveEndPoint$TransactionBatchImpl.beginNextTransactionImpl(HiveEndPoint.java:573) ~[hive-hcatalog-streaming-1.2.1.jar:1.2.1]

... 22 common frames omitted

Stacktrace from hivemetastore.log:

2016-10-03 23:40:24,322 ERROR [pool-5-thread-114]: metastore.RetryingHMSHandler (RetryingHMSHandler.java:invokeInternal(195)) - java.lang.IllegalStateException: Unexpected DataOperationType: UNSET agentInfo=Unknown txnid:98201

at org.apache.hadoop.hive.metastore.txn.TxnHandler.enqueueLockWithRetry(TxnHandler.java:938)

at org.apache.hadoop.hive.metastore.txn.TxnHandler.lock(TxnHandler.java:814)

at org.apache.hadoop.hive.metastore.HiveMetaStore$HMSHandler.lock(HiveMetaStore.java:5751)

at sun.reflect.GeneratedMethodAccessor28.invoke(Unknown Source)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invokeInternal(RetryingHMSHandler.java:139)

at org.apache.hadoop.hive.metastore.RetryingHMSHandler.invoke(RetryingHMSHandler.java:97)

at com.sun.proxy.$Proxy12.lock(Unknown Source)

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Processor$lock.getResult(ThriftHiveMetastore.java:11860)

at org.apache.hadoop.hive.metastore.api.ThriftHiveMetastore$Processor$lock.getResult(ThriftHiveMetastore.java:11844)

at org.apache.thrift.ProcessFunction.process(ProcessFunction.java:39)

at org.apache.hadoop.hive.metastore.TUGIBasedProcessor$1.run(TUGIBasedProcessor.java:110)

at org.apache.hadoop.hive.metastore.TUGIBasedProcessor$1.run(TUGIBasedProcessor.java:106)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1724)

at org.apache.hadoop.hive.metastore.TUGIBasedProcessor.process(TUGIBasedProcessor.java:118)

at org.apache.thrift.server.TThreadPoolServer$WorkerProcess.run(TThreadPoolServer.java:286)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Do you have any suggestions to resolve this?

Created 10-04-2016 12:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issue for Hive Streaming between HDF 2.0 and HDP 2.5 is captured as NIFI-2828 (albeit under a different title, it is the same cause and fix). In the meantime as a possible workaround I have built a Hive NAR that you can try if you wish, just save off your other one (from the lib/ folder with a version like 1.0.0.2.0.0-159 or something) and replace it with this one.

Created 06-08-2017 02:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Matt,

I had faced thrift server connectivity issue with NiFi-1.2.0 (Installed in HDP 2.6.0). I tried replacing the .nar file with yours given .nar, But it did not help.

I tried to install NiFi-1.1.0 and tried to run without replacing the .nar file. It did not help either. But, when I did replaced the .nar with yours .nar file with NiFi-1.1.0, then it worked for me !!

Can you please help me generating the 'nifi-hive.nar' file for NiFi-1.2.0. Also, it would be great if you provide the steps to generate the .nar file and what specific changes were made, so that .nar file worked for NiFi-1.1.0.

Thanks in advance.

Created 10-04-2016 12:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, thanks for quick answer! Is any way to solve this issue between HDP v 2.5 and HDF v 2.0?

Or we have only one way is to downgrade our HDP to version 2.4?

Is any documented way to do this downgrade?

Created 10-04-2016 12:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hey

You can follow the steps mentioned by Matthew.

If you want the exact steps please do the following.

1. Download the jar.

2. Go to /usr/hdf/current/nifi/lib/ which is the usual installation directory of HDF NiFi.

3. Backup the file nifi-hive-nar-1.0.0.2.0.0.0-579.nar

4. Replace it with the one provided by Matthew.

Then you should be able to run the streaming processor.

Created 10-05-2016 07:05 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank You very much, suggestion You've provided solved my issue.

Created 06-08-2017 02:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Matt,

I did faced the same issue with thrift server connectivity. Replacing the .nar file with your given .nar file has resolved my issue.

But, can you please help me is getting the .nar file generated for the latest version of NiFi-1.2.0 ?

Because, I tried replacing the .nar in NiFi-1.2.0 version. But, the issue was same. I tried installing NiFi-1.1.0 and then replaced the .nar file and it worked !!

Thanks in advance.

Created 11-10-2017 08:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Matt, I am using nifi-1.4.0 with HDP 2.6. I was facing the same issue in PutHiveStreaming processor. I replaced it with the nar you provided. It is working fine when I'm writing to a non partitioned table. But when I tried to write it to a partitioned hive table, it throws the following error :

PutHiveStreaming[id=9b7b6af6-015f-1000-8772-6b70eb0a4841]

failed to process due to java.lang.RuntimeException:

java.lang.RuntimeException: The root scratch dir: /tmp/hive on HDFS should be

writable. Current permissions are: rwx------; rolling back session: {}

java.lang.RuntimeException: java.lang.RuntimeException: The

root scratch dir: /tmp/hive on HDFS should be writable. Current permissions

are: rwx------

I have provided all the necessary permissions to /tmp/hive folder, still I'm facing the same issue.

Please let me know if I'm missing something.

Thanks,

Mohit Jain

@Matt Burgess I am using nifi 1.4.0 with HDP 2.6. I was facing the same error. I replaced the hive nar bundle with the one you provided. It is now working fine for the unpartitioned table. But when I'm trying to ingest on the partitioned table, it throws the following error:

PutHiveStreaming[id=9b7b6af6-015f-1000-8772-6b70eb0a4841]

failed to process due to java.lang.RuntimeException:

java.lang.RuntimeException: The root scratch dir: /tmp/hive on HDFS should be

writable. Current permissions are: rwx------; rolling back session: {}

java.lang.RuntimeException:

java.lang.RuntimeException: The root scratch dir: /tmp/hive on HDFS should be

writable. Current permissions are: rwx------

at

org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:523)

at org.apache.hive.hcatalog.streaming.HiveEndPoint$ConnectionImpl.createPartitionIfNotExists(HiveEndPoint.java:454)

at org.apache.hive.hcatalog.streaming.HiveEndPoint$ConnectionImpl.<init>(HiveEndPoint.java:318)

at org.apache.hive.hcatalog.streaming.HiveEndPoint$ConnectionImpl.<init>(HiveEndPoint.java:278)

at org.apache.hive.hcatalog.streaming.HiveEndPoint.newConnectionImpl(HiveEndPoint.java:215)

at org.apache.hive.hcatalog.streaming.HiveEndPoint.newConnection(HiveEndPoint.java:192)

at org.apache.hive.hcatalog.streaming.HiveEndPoint.newConnection(HiveEndPoint.java:122)

at org.apache.nifi.util.hive.HiveWriter.lambda$newConnection$6(HiveWriter.java:237)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.RuntimeException: The root scratch dir: /tmp/hive on HDFS should be writable. Current permissions are: rwx------

at org.apache.hadoop.hive.ql.session.SessionState.createRootHDFSDir(SessionState.java:613)

at org.apache.hadoop.hive.ql.session.SessionState.createSessionDirs(SessionState.java:555)

at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:509)

... 11 common frames omitted

I have given all the permissions to the /tmp/hive folder, still it throws the same error. Please let me know if I'm missing something.

Thanks,

Mohit

Created 08-21-2018 04:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Issue Resolved.

In HDP 3.0, please use PutHive3Streaming, PutHive3QL and SelectHiveQL.

Cheers.

Created 12-14-2018 03:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am getting same error in HDP 3.

- « Previous

-

- 1

- 2

- Next »