Support Questions

- Cloudera Community

- Support

- Support Questions

- PutKudu processor on NiFi - KUDU generic error

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

PutKudu processor on NiFi - KUDU generic error

- Labels:

-

Apache Kudu

-

Apache NiFi

Created 09-06-2023 02:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good morning everyone!

I am having troubles with the PutKudu processor.

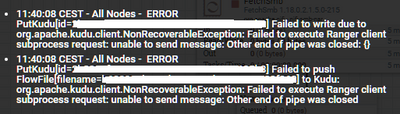

The Put Processor should write on a KUDU table, previously created by using Impala on HUE, inside the HDFS. Everything is configured but the PutKudu processor keeps showing up this error message. Kudu is configured with just one master (leader). I am thinking that this error could be caused by my one-node configuration as the docu seems to suggest to use a 3 nodes configuration at least. Is this the case ?

The error message is too generic to be useful for any kind of debugging activity.

The pipeline is composed by two processors: generateflowfile and putkudu.

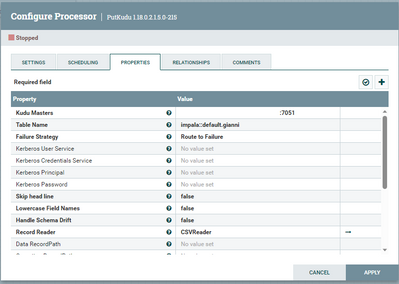

Down below you can have a look at the error messages and at the putkudu properties.

If you need more information please let me know.

Thank you and have a nice day.

Created 09-08-2023 12:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Up! Can someone take a look at this issue ?

Created 09-11-2023 03:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Up

Created 09-11-2023 07:35 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@JohnSilver, first of all, I recommend you to set your processor on DEBUG, as it might provide you much more information that what you are seeing right now. In addition, have a look in the nifi-app.logs, as you might find something there as well.

Next, I do not know how Kudu is configured on your side, but in any project I was involved, it required a authentication - the Kerberos Properties which seem to be blank on your side. Even though you might have a basic install of impala/kudu, as far as I know, it still requires some sort of authentication.

Created on 09-11-2023 01:20 PM - edited 09-11-2023 02:08 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi to everyone,

i would like to integrate additional info about the issue posted by my collegaue.

Our on-premise cluster cloudera was installaed using this sku :

-Cloudera Manager 7.7.1

-Cloudera Runtime 7.1.8.0 (CDP Private Cloud Base)

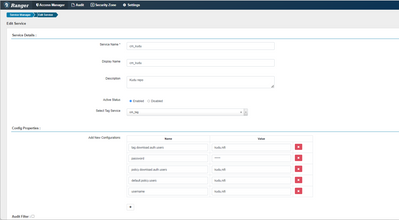

We are using default settings, so Kerberos is disabled, we are using Ranger with default service name and policies created by Cloudera manager installation.

As described in this post (https://community.cloudera.com/t5/Community-Articles/Using-the-PutKudu-processor-to-ingest-MySQL-dat...) we thinks that isn't necessary to enable Kerberos to scout this feature. If kudu requires an authentication to work properly we accept to use Ranger, but for the moment we don't want to enable kerberos.

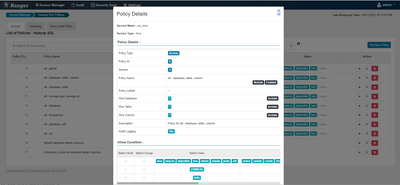

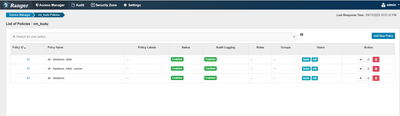

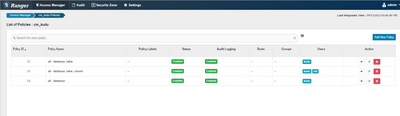

By using Impala on HUE we created a KUDU table. Then by Ranger webUI, in the Hadoop SQL service name created by cloudera manager during installation, I modified the "all - database, table, column" policy which allows access to all databases, tables, and columns, extending permission also to users "nifi" and "kudu".

And this is the cm_kudu policy in Ranger:

To verify that nifi user can write on KUDU table, we used impala-shell CLI as follow described and it worked properly. So we think that nifi user can write on KUDU table.

[machine-bigdata-h02 ~]> sudo -u nifi impala-shell

[Not connected] > connect machine-bigdata-h02;

[machine-bigdata-h02:21000] default> INSERT INTO default.gianni (id, name) VALUES (2, 'roberto');

[machine-bigdata-h02:21000] default> select * from default.gianni;

As you suggested, we set NiFi log verbosity on DEBUG. And manteining the same PutKudu processor configuraton we obtain this error trace:

2023-09-11 17:23:36,115 INFO org.apache.nifi.controller.StandardProcessorNode: Starting PutKudu[id=2b2950fe-0ea5-1659-0000-0000647695b8]

2023-09-11 17:23:36,178 DEBUG org.apache.kudu.shaded.io.netty.util.internal.logging.InternalLoggerFactory: Using SLF4J as the default logging framework

2023-09-11 17:23:36,205 DEBUG org.apache.kudu.shaded.io.netty.util.ResourceLeakDetector: -Dorg.apache.kudu.shaded.io.netty.leakDetection.level: simple

2023-09-11 17:23:36,205 DEBUG org.apache.kudu.shaded.io.netty.util.ResourceLeakDetector: -Dorg.apache.kudu.shaded.io.netty.leakDetection.targetRecords: 4

2023-09-11 17:23:36,236 DEBUG org.apache.kudu.shaded.io.netty.util.ResourceLeakDetectorFactory: Loaded default ResourceLeakDetector: org.apache.kudu.shaded.io.netty.util.ResourceLeakDetector@602cf04e

2023-09-11 17:23:36,353 INFO org.apache.nifi.controller.scheduling.StandardProcessScheduler: Starting GenerateFlowFile[id=2b29597f-0ea5-1659-0000-00006439c64f]

2023-09-11 17:23:36,354 INFO org.apache.nifi.controller.StandardProcessorNode: Starting GenerateFlowFile[id=2b29597f-0ea5-1659-0000-00006439c64f]

2023-09-11 17:23:36,358 INFO org.apache.nifi.controller.scheduling.TimerDrivenSchedulingAgent: Scheduled GenerateFlowFile[id=2b29597f-0ea5-1659-0000-00006439c64f] to run with 1 threads

2023-09-11 17:23:36,374 DEBUG StandardProcessSession.claims: Creating ContentClaim StandardContentClaim [resourceClaim=StandardResourceClaim[id=1694445816369-1, container=default, section=1], offset=0, length=-1] for 'write' for StandardFlowFileRecord[uuid=be4462ec-77ab-4782-abc3-1cf4733c8666,claim=,offset=0,name=be4462ec-77ab-4782-abc3-1cf4733c8666,size=0]

2023-09-11 17:23:36,410 DEBUG org.apache.kudu.shaded.io.netty.util.internal.PlatformDependent0: -Dio.netty.noUnsafe: false

2023-09-11 17:23:36,410 DEBUG org.apache.kudu.shaded.io.netty.util.internal.PlatformDependent0: Java version: 8

2023-09-11 17:23:36,413 DEBUG org.apache.kudu.shaded.io.netty.util.internal.PlatformDependent0: sun.misc.Unsafe.theUnsafe: available

2023-09-11 17:23:36,415 DEBUG org.apache.kudu.shaded.io.netty.util.internal.PlatformDependent0: sun.misc.Unsafe.copyMemory: available

2023-09-11 17:23:36,416 DEBUG org.apache.kudu.shaded.io.netty.util.internal.PlatformDependent0: sun.misc.Unsafe.storeFence: available

2023-09-11 17:23:36,417 DEBUG org.apache.kudu.shaded.io.netty.util.internal.PlatformDependent0: java.nio.Buffer.address: available

2023-09-11 17:23:36,426 DEBUG org.apache.kudu.shaded.io.netty.util.internal.PlatformDependent0: direct buffer constructor: available

2023-09-11 17:23:36,428 DEBUG org.apache.kudu.shaded.io.netty.util.internal.PlatformDependent0: java.nio.Bits.unaligned: available, true

2023-09-11 17:23:36,428 DEBUG org.apache.kudu.shaded.io.netty.util.internal.PlatformDependent0: jdk.internal.misc.Unsafe.allocateUninitializedArray(int): unavailable prior to Java9

2023-09-11 17:23:36,428 DEBUG org.apache.kudu.shaded.io.netty.util.internal.PlatformDependent0: java.nio.DirectByteBuffer.<init>(long, int): available

2023-09-11 17:23:36,428 DEBUG org.apache.kudu.shaded.io.netty.util.internal.PlatformDependent: sun.misc.Unsafe: available

2023-09-11 17:23:36,429 DEBUG org.apache.kudu.shaded.io.netty.util.internal.PlatformDependent: -Dio.netty.tmpdir: /tmp (java.io.tmpdir)

2023-09-11 17:23:36,429 DEBUG org.apache.kudu.shaded.io.netty.util.internal.PlatformDependent: -Dio.netty.bitMode: 64 (sun.arch.data.model)

2023-09-11 17:23:36,431 DEBUG org.apache.kudu.shaded.io.netty.util.internal.PlatformDependent: -Dio.netty.maxDirectMemory: 514850816 bytes

2023-09-11 17:23:36,431 DEBUG org.apache.kudu.shaded.io.netty.util.internal.PlatformDependent: -Dio.netty.uninitializedArrayAllocationThreshold: -1

2023-09-11 17:23:36,434 DEBUG org.apache.kudu.shaded.io.netty.util.internal.CleanerJava6: java.nio.ByteBuffer.cleaner(): available

2023-09-11 17:23:36,434 DEBUG org.apache.kudu.shaded.io.netty.util.internal.PlatformDependent: -Dio.netty.noPreferDirect: false

2023-09-11 17:23:36,487 DEBUG org.apache.kudu.shaded.io.netty.util.internal.PlatformDependent: org.jctools-core.MpscChunkedArrayQueue: available

2023-09-11 17:23:36,782 INFO org.apache.kudu.client.KuduClient: defaultSocketReadTimeoutMs is deprecated

2023-09-11 17:23:36,910 DEBUG org.apache.kudu.shaded.io.netty.channel.MultithreadEventLoopGroup: -Dio.netty.eventLoopThreads: 8

2023-09-11 17:23:36,950 INFO org.apache.nifi.cluster.protocol.impl.SocketProtocolListener: Finished processing request ab1236a3-357f-4e91-b00b-6af91c8c9fa0 (type=HEARTBEAT, length=2522 bytes) from machine-bigdata-h01:8080 in 8 millis

2023-09-11 17:23:36,952 INFO org.apache.nifi.controller.cluster.ClusterProtocolHeartbeater: Heartbeat created at 2023-09-11 17:23:36,876 and sent to machine-bigdata-h01:9088 at 2023-09-11 17:23:36,952; determining Cluster Coordinator took 35 millis; DNS lookup for coordinator took 0 millis; connecting to coordinator took 1 millis; sending heartbeat took 0 millis; receiving first byte from response took 37 millis; receiving full response took 38 millis; total time was 75 millis

2023-09-11 17:23:36,989 INFO org.apache.nifi.controller.StandardFlowService: Saved flow controller org.apache.nifi.controller.FlowController@7963cc61 // Another save pending = false

2023-09-11 17:23:37,003 DEBUG org.apache.kudu.shaded.io.netty.util.internal.InternalThreadLocalMap: -Dio.netty.threadLocalMap.stringBuilder.initialSize: 1024

2023-09-11 17:23:37,003 DEBUG org.apache.kudu.shaded.io.netty.util.internal.InternalThreadLocalMap: -Dio.netty.threadLocalMap.stringBuilder.maxSize: 4096

2023-09-11 17:23:37,029 DEBUG org.apache.kudu.shaded.io.netty.channel.nio.NioEventLoop: -Dio.netty.noKeySetOptimization: false

2023-09-11 17:23:37,029 DEBUG org.apache.kudu.shaded.io.netty.channel.nio.NioEventLoop: -Dio.netty.selectorAutoRebuildThreshold: 512

2023-09-11 17:23:37,177 DEBUG org.apache.kudu.shaded.io.netty.buffer.PooledByteBufAllocator: -Dio.netty.allocator.numHeapArenas: 8

2023-09-11 17:23:37,177 DEBUG org.apache.kudu.shaded.io.netty.buffer.PooledByteBufAllocator: -Dio.netty.allocator.numDirectArenas: 8

2023-09-11 17:23:37,177 DEBUG org.apache.kudu.shaded.io.netty.buffer.PooledByteBufAllocator: -Dio.netty.allocator.pageSize: 8192

2023-09-11 17:23:37,177 DEBUG org.apache.kudu.shaded.io.netty.buffer.PooledByteBufAllocator: -Dio.netty.allocator.maxOrder: 9

2023-09-11 17:23:37,177 DEBUG org.apache.kudu.shaded.io.netty.buffer.PooledByteBufAllocator: -Dio.netty.allocator.chunkSize: 4194304

2023-09-11 17:23:37,177 DEBUG org.apache.kudu.shaded.io.netty.buffer.PooledByteBufAllocator: -Dio.netty.allocator.smallCacheSize: 256

2023-09-11 17:23:37,177 DEBUG org.apache.kudu.shaded.io.netty.buffer.PooledByteBufAllocator: -Dio.netty.allocator.normalCacheSize: 64

2023-09-11 17:23:37,177 DEBUG org.apache.kudu.shaded.io.netty.buffer.PooledByteBufAllocator: -Dio.netty.allocator.maxCachedBufferCapacity: 32768

2023-09-11 17:23:37,177 DEBUG org.apache.kudu.shaded.io.netty.buffer.PooledByteBufAllocator: -Dio.netty.allocator.cacheTrimInterval: 8192

2023-09-11 17:23:37,177 DEBUG org.apache.kudu.shaded.io.netty.buffer.PooledByteBufAllocator: -Dio.netty.allocator.cacheTrimIntervalMillis: 0

2023-09-11 17:23:37,178 DEBUG org.apache.kudu.shaded.io.netty.buffer.PooledByteBufAllocator: -Dio.netty.allocator.useCacheForAllThreads: false

2023-09-11 17:23:37,178 DEBUG org.apache.kudu.shaded.io.netty.buffer.PooledByteBufAllocator: -Dio.netty.allocator.maxCachedByteBuffersPerChunk: 1023

2023-09-11 17:23:37,310 INFO org.apache.nifi.cluster.coordination.heartbeat.AbstractHeartbeatMonitor: Finished processing 1 heartbeats in 48942 nanos

2023-09-11 17:23:37,312 DEBUG org.apache.kudu.util.SecurityUtil: Using ticketCache: krb5cc_cldr

2023-09-11 17:23:37,353 DEBUG org.apache.kudu.util.SecurityUtil: Could not login via JAAS. Using no credentials: Unable to obtain Principal Name for authentication

2023-09-11 17:23:37,532 DEBUG org.apache.kudu.util.NetUtil: Resolved IP of `machine-bigdata-h02' to [machine-bigdata-h02/10.172.33.42] in 596277ns

2023-09-11 17:23:37,758 DEBUG org.apache.kudu.client.Connection: [peer master-10.172.33.42:7051(10.172.33.42:7051)] connecting to peer

2023-09-11 17:23:37,775 DEBUG org.apache.kudu.shaded.io.netty.channel.DefaultChannelId: -Dio.netty.processId: 14466 (auto-detected)

2023-09-11 17:23:37,780 DEBUG org.apache.kudu.shaded.io.netty.util.NetUtil: -Djava.net.preferIPv4Stack: true

2023-09-11 17:23:37,780 DEBUG org.apache.kudu.shaded.io.netty.util.NetUtil: -Djava.net.preferIPv6Addresses: false

2023-09-11 17:23:37,784 DEBUG org.apache.kudu.shaded.io.netty.util.NetUtilInitializations: Loopback interface: lo (lo, 127.0.0.1)

2023-09-11 17:23:37,786 DEBUG org.apache.kudu.shaded.io.netty.util.NetUtil: /proc/sys/net/core/somaxconn: 128

2023-09-11 17:23:37,788 DEBUG org.apache.kudu.shaded.io.netty.channel.DefaultChannelId: -Dio.netty.machineId: 00:50:56:ff:fe:8c:be:7b (auto-detected)

2023-09-11 17:23:37,846 DEBUG org.apache.kudu.shaded.io.netty.buffer.ByteBufUtil: -Dio.netty.allocator.type: pooled

2023-09-11 17:23:37,846 DEBUG org.apache.kudu.shaded.io.netty.buffer.ByteBufUtil: -Dio.netty.threadLocalDirectBufferSize: 0

2023-09-11 17:23:37,846 DEBUG org.apache.kudu.shaded.io.netty.buffer.ByteBufUtil: -Dio.netty.maxThreadLocalCharBufferSize: 16384

2023-09-11 17:23:37,931 DEBUG org.apache.kudu.client.Connection: [peer master-10.172.33.42:7051(10.172.33.42:7051)] Successfully connected to peer

2023-09-11 17:23:37,980 DEBUG org.apache.kudu.shaded.io.netty.buffer.AbstractByteBuf: -Dorg.apache.kudu.shaded.io.netty.buffer.checkAccessible: true

2023-09-11 17:23:37,981 DEBUG org.apache.kudu.shaded.io.netty.buffer.AbstractByteBuf: -Dorg.apache.kudu.shaded.io.netty.buffer.checkBounds: true

2023-09-11 17:23:37,981 DEBUG org.apache.kudu.shaded.io.netty.util.ResourceLeakDetectorFactory: Loaded default ResourceLeakDetector: org.apache.kudu.shaded.io.netty.util.ResourceLeakDetector@2b3f4016

2023-09-11 17:23:38,004 DEBUG org.apache.kudu.shaded.io.netty.util.Recycler: -Dio.netty.recycler.maxCapacityPerThread: 4096

2023-09-11 17:23:38,004 DEBUG org.apache.kudu.shaded.io.netty.util.Recycler: -Dio.netty.recycler.ratio: 8

2023-09-11 17:23:38,004 DEBUG org.apache.kudu.shaded.io.netty.util.Recycler: -Dio.netty.recycler.chunkSize: 32

2023-09-11 17:23:38,004 DEBUG org.apache.kudu.shaded.io.netty.util.Recycler: -Dio.netty.recycler.blocking: false

2023-09-11 17:23:38,277 DEBUG org.apache.kudu.client.Negotiator: SASL mechanism PLAIN chosen for peer machine-bigdata-h02

2023-09-11 17:23:38,532 DEBUG org.apache.kudu.shaded.io.netty.handler.ssl.SslHandler: [id: 0xembedded, L:embedded - R:embedded] HANDSHAKEN: protocol:TLSv1.2 cipher suite:TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384

2023-09-11 17:23:38,569 DEBUG org.apache.kudu.client.Negotiator: Authenticated connection [id: 0x21e716de, L:/10.172.33.41:44978 - R:machine-bigdata-h02/10.172.33.42:7051] using SASL/PLAIN

2023-09-11 17:23:38,744 DEBUG org.apache.kudu.client.AsyncKuduClient: Learned about tablet Kudu Master for table 'Kudu Master' with partition [<start>, <end>)

2023-09-11 17:23:38,748 DEBUG org.apache.kudu.client.AsyncKuduClient: Retrying sending RPC KuduRpc(method=Ping, tablet=null, attempt=1, TimeoutTracker(timeout=30000, elapsed=1267), Traces: [0ms] refreshing cache from master, [177ms] Sub RPC ConnectToMaster: sending RPC to server master-10.172.33.42:7051, [1140ms] Sub RPC ConnectToMaster: received response from server master-10.172.33.42:7051: OK, deferred=null) after lookup

2023-09-11 17:23:38,766 DEBUG com.stumbleupon.async.Deferred: callback=retry RPC@1686341592 returned Deferred@1076179283(state=PENDING, result=null, callback=(continuation of Deferred@1644245891 after retry RPC@1686341592), errback=(continuation of Deferred@1644245891 after retry RPC@1686341592)), so the following Deferred is getting paused: Deferred@1644245891(state=PAUSED, result=Deferred@1076179283, callback=ping supports ignore operations -> wakeup thread Timer-Driven Process Thread-1, errback=ping supports ignore operations -> wakeup thread Timer-Driven Process Thread-1)

2023-09-11 17:23:38,779 INFO org.apache.nifi.controller.scheduling.TimerDrivenSchedulingAgent: Scheduled PutKudu[id=2b2950fe-0ea5-1659-0000-0000647695b8] to run with 1 threads

2023-09-11 17:23:39,283 ERROR org.apache.nifi.processors.kudu.PutKudu: [PutKudu[id=2b2950fe-0ea5-1659-0000-0000647695b8], StandardFlowFileRecord[uuid=be4462ec-77ab-4782-abc3-1cf4733c8666,claim=StandardContentClaim [resourceClaim=StandardResourceClaim[id=1694445816369-1, container=default, section=1], offset=0, length=17],offset=0,name=be4462ec-77ab-4782-abc3-1cf4733c8666,size=17]] Failed to push {} to Kudu

org.apache.kudu.client.NonRecoverableException: Failed to execute Ranger client subprocess request: unable to send message: Other end of pipe was closed

at org.apache.kudu.client.KuduException.transformException(KuduException.java:110)

at org.apache.kudu.client.KuduClient.joinAndHandleException(KuduClient.java:470)

at org.apache.kudu.client.KuduClient.openTable(KuduClient.java:288)

With kerberos disabled in the cluster and in each services, we don't understand why this debug trace is present in the log: "DEBUG org.apache.kudu.util.SecurityUtil: Could not login via JAAS. Using no credentials: Unable to obtain Principal Name for authentication"

I have also Monitored Kudu Cluster Health with ksck command as follow:

sudo -u kudu kudu cluster ksck machine-bigdata-h02

Master Summary

UUID | Address | Status

----------------------------------+----------------------+---------

779ae7e08c9e4a3fa82d007bd50b036e | machine-bigdata-h02 | HEALTHY

Flags of checked categories for Master:

Flag | Value | Master

---------------------+-------------------------------------------------------------+-------------------------

builtin_ntp_servers | 0.pool.ntp.org,1.pool.ntp.org,2.pool.ntp.org,3.pool.ntp.org | all 1 server(s) checked

time_source | system | all 1 server(s) checked

Tablet Server Summary

UUID | Address | Status | Location | Tablet Leaders | Active Scanners

----------------------------------+--------------------------------------------+---------+----------+----------------+-----------------

f8af62e750d8455795884889b9551d4e | machine-bigdata-h02:7050 | HEALTHY | /default | 1 | 0

Tablet Server Location Summary

Location | Count

----------+---------

/default | 1

Flags of checked categories for Tablet Server:

Flag | Value | Tablet Server

---------------------+-------------------------------------------------------------+-------------------------

builtin_ntp_servers | 0.pool.ntp.org,1.pool.ntp.org,2.pool.ntp.org,3.pool.ntp.org | all 1 server(s) checked

time_source | system | all 1 server(s) checked

Version Summary

Version | Servers

--------------------+-------------------------

1.15.0.7.1.8.0-801 | all 2 server(s) checked

Tablet Summary

The cluster doesn't have any matching system tables

Summary by table

Name | RF | Status | Total Tablets | Healthy | Recovering | Under-replicated | Unavailable

----------------+----+---------+---------------+---------+------------+------------------+-------------

default.gianni | 1 | HEALTHY | 1 | 1 | 0 | 0 | 0

Tablet Replica Count Summary

Statistic | Replica Count

----------------+---------------

Minimum | 1

First Quartile | 1

Median | 1

Third Quartile | 1

Maximum | 1

Total Count Summary

| Total Count

----------------+-------------

Masters | 1

Tablet Servers | 1

Tables | 1

Tablets | 1

Replicas | 1

OK

Can someone help us?

Thank you

Created on 09-12-2023 09:04 AM - edited 09-12-2023 09:06 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

i would like add additional information.

we are using Impala 4.0.0.7.1.8.0-801 and kudu 1.15.0.7.1.8.0-801

running following command, obatin

kudu hms list machine-bigdata-h02

database | table | type | kudu.table_name

----------+--------+----------------+-----------------

default | gianni | EXTERNAL_TABLE | default.gianni

while i have following error running this command

kudu hms table list machine-bigdata-h02

or

kudu hms check machine-bigdata-h02

End of file: Failed to execute Ranger client subprocess request: unable to send message: Other end of pipe was closed

the log file /var/log/kudu/kudu-master.INFO doesn't show other information

I hope this help to understand and solve our issue.

Thanks

Created on 09-13-2023 08:56 AM - edited 09-13-2023 08:56 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello to all again. I think tath my issue is related to a missing authentication by ranger.

So, i follow the the Clouder guide instruction (https://docs.cloudera.com/cdp-private-cloud-base/7.1.8/kudu-security/topics/kudu-ranger-policies.htm...) about

Ranger policies for Kudu

and kudu policy as follow

i restart both kudu and ranger services.

But when i run the command, it give me an error

sudo -u nifi kudu table describe machine-bigdata-h02 default.gianni

End of file: Failed to execute Ranger client subprocess request: unable to send message: Other end of pipe was closed

while if i run the same command by a tusted user such as kudu, hive or impala it works

sudo -u kudu kudu table describe machine-bigdata-h02 default.gianni

or

sudo -u impala kudu table describe machine-bigdata-h02 default.gianni

TABLE default.gianni (

id INT64 NOT NULL,

name STRING NULLABLE,

PRIMARY KEY (id)

)

OWNER hueadmin

REPLICAS 1

COMMENT

Now i don't understand why ranger policy added in cm_kudu service name doesn't work. Could someone explain me what i'm missing in my ranger policy for kudu?

Created on 09-13-2023 09:07 AM - edited 09-13-2023 09:23 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm glad to announce that finally i have solved my issue. Thanks again for the community support received. I would like to explain the workaround applied hoping to be useful for other guys affected by same issue.

The issue was related to a missing authentication by ranger. I don't understand why the solution "Policy ranger in Kudu" described here https://docs.cloudera.com/cdp-private-cloud-base/7.1.8/kudu-security/topics/kudu-ranger-policies.htm... doesn't work. Anyway .....

So i decided to follow this guide "Specifying trusted users" ( https://docs.cloudera.com/cdp-private-cloud-base/7.1.8/kudu-security/topics/kudu-security-trusted-us... )

By cloudera manager (kudu service) i set following properties "Master Advanced Configuration Snippet (Safetz Valve) for gflagfile" to this value:

--trusted_user_acl=impala,hive,kudu,rangeradmin,nifi

then i restarted kudu. After this settings, command work fine

sudo -u nifi kudu table describe nautilus-bigdata-h02 default.gianni

TABLE default.gianni (

id INT64 NOT NULL,

name STRING NULLABLE,

PRIMARY KEY (id)

)

OWNER hueadmin

REPLICAS 1

COMMENT

even NIfi PutKudu processor work fine and i can write my row on the table.

Thanks to all !!