Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Puthbasejson performance optimization

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Puthbasejson performance optimization

- Labels:

-

Apache HBase

-

Apache NiFi

Created 06-25-2018 06:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The slowest part of our data flow is the PuthbaseJson processor and I am trying to find out a way to optimize it. There is a configuration inside the proc where you can increase the batch size of the flow files it can process in a single execution. It set to 25 by default and i have tried increasing it up to 1000 with little performance gain. Increasing the concurrent tasks also hasn't helped in speeding up the put commands that processor runs. Has any one else worked with this processor and optimized it?

The batch configuration of the processor says that it does the put by first grouping the flowfiles by table. Is there any thing i can do here? the name of the table already comes with the flowfile as an attribute and it is extracted from the attribute using the expression language. I am not sure how do i 'group it' before it reaches the Puthbasejosn. Kindly let me know of any ideas.

Created on 06-25-2018 12:25 PM - edited 08-17-2019 05:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

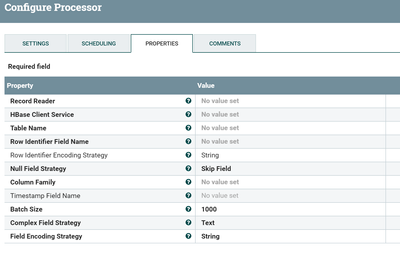

Use Record oriented processor PutHbaseRecord instead of PutHbaseJson.

PutHBaseRecord processor works with chunks of data based on the Record Reader(Json Tree Reader) specified and you can send array of json messages/records to the processor, based on the record reader controller service processor reads and put the json messages/records into HBase.

Adjust the batch size as you can get good performance

| Batch Size | 1000 | The maximum number of records to be sent to HBase at any one time from the record set. |

Refer to this link to configure Record Reader controller service.

Created on 06-25-2018 12:25 PM - edited 08-17-2019 05:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Use Record oriented processor PutHbaseRecord instead of PutHbaseJson.

PutHBaseRecord processor works with chunks of data based on the Record Reader(Json Tree Reader) specified and you can send array of json messages/records to the processor, based on the record reader controller service processor reads and put the json messages/records into HBase.

Adjust the batch size as you can get good performance

| Batch Size | 1000 | The maximum number of records to be sent to HBase at any one time from the record set. |

Refer to this link to configure Record Reader controller service.

Created 06-26-2018 01:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Shu, Thank you for your reply. I'll have to study about this Record reader stuff in detail because by the time our flow file reaches the puthbaseJson proc it already contains the reduced json in its payload and simply needs to be put in the target hbase table. So I don't require any sort of manipulation or parsing to be done on it which apparently Record readers does. And i see that the record reader is a required field so there is no way around it. Is there a way to create a dummy reader that does nothing ? : P . I'll explore this on my own as well.

Created 06-26-2018 03:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Even though you are having reduced json still you can use MergeRecord processor to merge single json messages into an array of json messages by using MergeRecord processor with JsonTreeReader/JsonSetWriter controller services, Configure Min/Max number of records per flowfile and use Max Bin Age property as wildcard to eligible bin to merge.

Then feed the Merged Relationship to PutHBaseRecord processor(give the row identifier field name from your json message) as the purpose of Record oriented processor is to work with Chunks of data to get good performance instead of working with one record at a time.

Created 06-26-2018 02:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think i miss understood the prupose of the record reader. It looks clear to me now. Thank you for the suggestion. I'll work on this idea.

Created 07-11-2018 02:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Shu, I was able to implement your idea of using MergerRecord -> PutHbaseJsonRecord using the Record reader controller services. However , I think there is a limitation in PutHbaseJsonRecord. We are syncing Oracle tables in Hbase using Goldengate and there are table with multiple PK's in oracle .We are creating a corresponding row key in Hbase by concatenating those PK's together. The PutHbaseJson allows to do that so we concatenate the PK's and pass it as an attribute to the processor. But the PutHbaseJsonRecord corresponding property is "Row Identifier Field Name" , so it is expecting the row key to be an element in the json that is read by the record reader. I've tried passing the same attribute i was sending to the PutHbaseJson but it doesn't work. Do you agree to this?

I can think of a work around here where I transform the JSON to add the attribute(concatenated PK's) to it,which at this point i don't know how to do it . But even if i manage to do it then I will also need to change the schema as well. Kindly let me know if there is a better way to skin this cat.

Created on 07-11-2018 09:01 AM - edited 08-17-2019 05:22 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

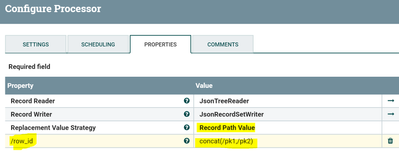

Use UpdateRecord processor before PutHBaseRecord Processor and create a new field i.e concatenated with PK's then in PutHBaseRecord processor Record Reader add the newly created field in the Avro Schema so that you can use the concatenated field as row identifier.

row_id //newly created field name

concat(/pk1,/pk2) //processor gets pk1,pk2 field values from record and concatenates them and keep as row_id.

By using UpdateRecord processor we are going to work on chunks of data and very efficient way of updating the contents of flowfile.

For more reference regarding update record processor follow this link.