Support Questions

- Cloudera Community

- Support

- Support Questions

- Questions on NiFi PutHDFS append option

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Questions on NiFi PutHDFS append option

- Labels:

-

Apache NiFi

Created on 04-28-2017 07:40 PM - edited 08-17-2019 08:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I have couple of questions on how NiFi's PutHDFS "append" option works.

But let me start with a background before I ask the questions:

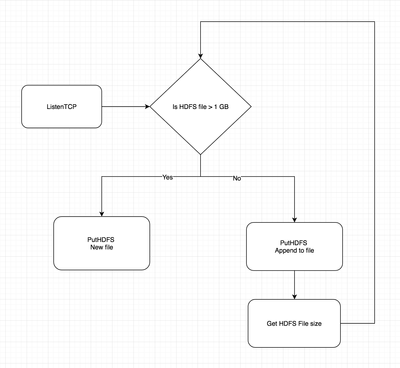

Below is a schematic of how I'm envisioning PutHDFS append might be used, in a scenario where you have small files that are ingested and the requirement is to write them to HDFS as soon as the data comes in (for "near" real-time analysis of that data); and as we know that creates too many small files in HDFS, and the overhead and performance issues that result from that;

So, one option, I think, is to write the small files to HDFS as they arrive, but keep appending to an existing HDFS file until you've grown that file to a decent size for HDFS; then start appending to a new HDFS file. With this approach, my understanding is that we need to keep checking the HDFS file size, to decide if it's now time to start writing/appending to a new HDFS file. Please see the schematic below.

1) In this approach, does the constant checking of HDFS file size create enough of an overhead to affect the performance of the dataflow or it's not that big a factor ?

2) Once we reached the desired size on the HDFS file, how does the file "close" happen ? does the PutHDFS processor (that has "append" selected for "Conflict Resolution Strategy"), after some idle time go back and close the file ? or does PutHDFS close the file after each append; if the latter (opening and closing for each append), then does that create overhead and degrade performance ?

If I have it all wrong about how to use the PutHDFS append option, please let me know that as well.

Thanks for your time.

Created on 04-28-2017 08:04 PM - edited 08-17-2019 08:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

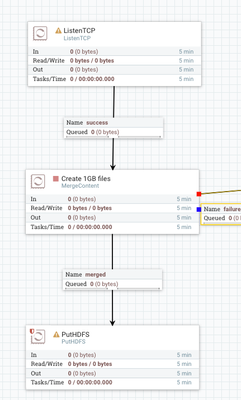

It might be more efficient to just merge the incoming files to the desired size and then write the file to HDFS.

The MergeContent processor can be configured to merge files to a desired size and then create one flow file from all of the merged files.

For example:

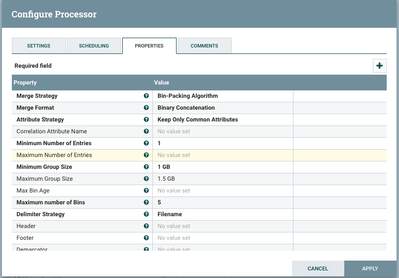

The MergeContent would be configured in the following way

This would create a file between 1GB and 1.5GB. The processor can also be configured to use time limits on the merge file generation. But I think overall this approach would be less imposing on the HDFS system.

Created on 04-28-2017 08:04 PM - edited 08-17-2019 08:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It might be more efficient to just merge the incoming files to the desired size and then write the file to HDFS.

The MergeContent processor can be configured to merge files to a desired size and then create one flow file from all of the merged files.

For example:

The MergeContent would be configured in the following way

This would create a file between 1GB and 1.5GB. The processor can also be configured to use time limits on the merge file generation. But I think overall this approach would be less imposing on the HDFS system.

Created 05-01-2017 08:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the MergeContent suggestion, we do use that right now, but since our requirement is to store data as close to real time as possible in HDFS, we're waiting for no more than a minute in MergeContent; whatever is accumulated in that time frame, we write it to HDFS.

But, would you please answer this question below about the PutHDFS append option

Once the desired size HDFS file is reached and I switch to a 2nd PutHDFS processor that inserts data in a new file (like I have it in the diagram), how does the file "close" happen on the previous file where appends were happening ? does the PutHDFS processor (the "append" one), after some idle time go back and close the file ? or does PutHDFS close the file after each append; if the latter (opening and closing for each append), it is possible for that to degrade performance.

Created 05-01-2017 08:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The PutHDFS will "close" the file after each append. The writing of a file every minute will be less efficient than say writing a bigger file every ten minutes, but that is something you will have to determine which is more important to your use case.

But even just waiting a minute is still more efficient then writing 100 files a sec.