Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: RPG - Cluster not processing

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

RPG - Cluster not processing

- Labels:

-

Apache NiFi

Created on 11-01-2017 05:45 PM - edited 08-18-2019 02:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Team,

I am trying to configure the RPG in my cluster environemt (3nodes) how to distribute the data between the 3 nodes.

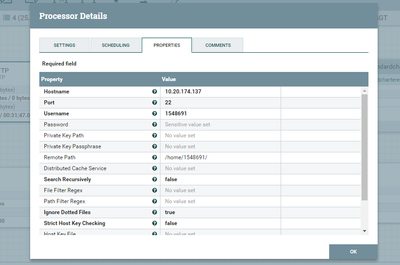

This the below processor i am trying with RPG but it not processing at all attached the details FYR.

Kindly help me to close this thread.

Created 11-01-2017 05:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What do you mean by 'it's not processing' ?

I see all your counters at zero. Do you have data in your ftp server? if you previously ingested the data, did you clear the state of the ListSFTP processor ?

Created 11-01-2017 05:49 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What do you mean by 'it's not processing' ?

I see all your counters at zero. Do you have data in your ftp server? if you previously ingested the data, did you clear the state of the ListSFTP processor ?

Created 11-01-2017 05:52 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

yes i have data in that.

[root@HKLPATHAS03 1548691]# ll total 4 -rw-r--r-- 1 1548691 root 0 Nov 2 00:38 HKLPATHAS03.txt drwxr-xr-x 9 1548691 domain users 4096 Nov 2 00:27 nifi-toolkit-1.1.2 see it not taking that file to hdfs. [root@HKLPATHAS03 1548691]# sudo -u hdfs hadoop fs -ls /user/1548691/HKLPATHAS03.txt ls: `/user/1548691/HKLPATHAS03.txt': No such file or directory [root@HKLPATHAS03 1548691]#

Created 11-01-2017 05:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you get any errors? can you add a file to your FTP server and see if NiFi get it? what's the scheduling frequency you have at List processor ?

Created 11-01-2017 06:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

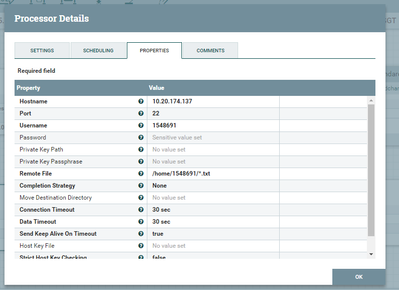

@Abdelkrim Hadjidj Thanks if the new file comes it processing and it fetch to hdfs but i am getting the below error also in Fetchsftp.

2017-11-02 01:56:41,942 ERROR [Timer-Driven Process Thread-5] o.a.nifi.processors.standard.FetchSFTP FetchSFTP[id=788c7b35-015f-1000-0000-00000f3979fd] Failed to fetch content for StandardFlowFileRecord[uuid=af918b98-5632-4877-9876-221477204e73,claim=StandardContentClaim [resourceClaim=StandardResourceClaim[id=1509553663114-11, container=default, section=11], offset=677691, length=0],offset=0,name=a.txt,size=0] from filename /home/1548691/*.txt on remote host 10.20.174.137:22 due to java.io.IOException: Failed to obtain file content for /home/1548691/*.txt; routing to failure: java.io.IOException: Failed to obtain file content for /home/1548691/*.txt

2017-11-02 01:56:41,943 ERROR [Timer-Driven Process Thread-5] o.a.nifi.processors.standard.FetchSFTP

java.io.IOException: Failed to obtain file content for /home/1548691/*.txt

at org.apache.nifi.processors.standard.util.SFTPTransfer.getInputStream(SFTPTransfer.java:300) ~[nifi-standard-processors-1.1.0.2.1.1.0-2.jar:1.1.0.2.1.1.0-2]

at org.apache.nifi.processors.standard.FetchFileTransfer.onTrigger(FetchFileTransfer.java:236) ~[nifi-standard-processors-1.1.0.2.1.1.0-2.jar:1.1.0.2.1.1.0-2]

at org.apache.nifi.processor.AbstractProcessor.onTrigger(AbstractProcessor.java:27) [nifi-api-1.1.0.2.1.1.0-2.jar:1.1.0.2.1.1.0-2]

at org.apache.nifi.controller.StandardProcessorNode.onTrigger(StandardProcessorNode.java:1099) [nifi-framework-core-1.1.0.2.1.1.0-2.jar:1.1.0.2.1.1.0-2]

at org.apache.nifi.controller.tasks.ContinuallyRunProcessorTask.call(ContinuallyRunProcessorTask.java:136) [nifi-framework-core-1.1.0.2.1.1.0-2.jar:1.1.0.2.1.1.0-2]

at org.apache.nifi.controller.tasks.ContinuallyRunProcessorTask.call(ContinuallyRunProcessorTask.java:47) [nifi-framework-core-1.1.0.2.1.1.0-2.jar:1.1.0.2.1.1.0-2]

at org.apache.nifi.controller.scheduling.TimerDrivenSchedulingAgent$1.run(TimerDrivenSchedulingAgent.java:132) [nifi-framework-core-1.1.0.2.1.1.0-2.jar:1.1.0.2.1.1.0-2]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [na:1.8.0_60]

at java.util.concurrent.FutureTask.runAndReset(FutureTask.java:308) [na:1.8.0_60]

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.access$301(ScheduledThreadPoolExecutor.java:180) [na:1.8.0_60]

at java.util.concurrent.ScheduledThreadPoolExecutor$ScheduledFutureTask.run(ScheduledThreadPoolExecutor.java:294) [na:1.8.0_60]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) [na:1.8.0_60]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) [na:1.8.0_60]

at java.lang.Thread.run(Thread.java:745) [na:1.8.0_60]

Caused by: com.jcraft.jsch.SftpException: /home/1548691/*.txt is not unique: [/home/1548691/b.txt, /home/1548691/HKLPATHAS03.txt, /home/1548691/a.txt]

at com.jcraft.jsch.ChannelSftp.isUnique(ChannelSftp.java:2965) ~[jsch-0.1.54.jar:na]

at com.jcraft.jsch.ChannelSftp.get(ChannelSftp.java:1314) ~[jsch-0.1.54.jar:na]

at com.jcraft.jsch.ChannelSftp.get(ChannelSftp.java:1290) ~[jsch-0.1.54.jar:na]

at org.apache.nifi.processors.standard.util.SFTPTransfer.getInputStream(SFTPTransfer.java:292) ~[nifi-standard-processors-1.1.0.2.1.1.0-2.jar:1.1.0.2.1.1.0-2]

... 13 common frames omitted

@

how to process all set of files instead of .txt and it picking from only one node?

how to pick from all 3 nodes ?

Created 11-01-2017 06:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The error is that you are trying to access to a file *.txt which doesn't exist. You should use the filename property instead of *.txt. This property will be set by the list processor as an attribute

Created 11-01-2017 06:18 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Use this in the Remote File attribute of FetchSFTP

${path}/${filename}To test you need to right click on ListSFTP and click on forget state

Created 11-01-2017 06:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Abdelkrim,

Thanks its working now

The same we can do in standalone as well right? in rpg also it taking from one node? how we can process from multiple nodes and balance the load.

Why we need to put input port in outside of processor group? root path.

Created 11-01-2017 06:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Great. Yes you can use the same approach. To have load balancing you need lot of files. If there's only few files, NiFi will not balance because it optimizes with batching.

Read this article to understand how it works : https://community.hortonworks.com/articles/109629/how-to-achieve-better-load-balancing-using-nifis-s...

Thanks