Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Ranger policies failed to refresh after implem...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Ranger policies failed to refresh after implementing Kerberos

- Labels:

-

Apache Ranger

Created 03-29-2017 10:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi guys,

Ranger fails to refresh policies after implementing Kerberos. I implemented Kerberos with new local MIT KDC, and using Ambari Automated Setup. HDFS, Hive and HBase works fine with new authentication method, but there are errors in refreshing policies. Every service where Ranger plugin is enabled gives me error:

2017-03-29 11:24:52,657 ERROR client.RangerAdminRESTClient (RangerAdminRESTClient.java:getServicePoliciesIfUpdated(124)) - Error getting policies. secureMode=true, user=nn/hadoop1.locald@EXAMPLE.COM (auth:KERBEROS), response={"httpStatusCode":401,"statusCode":0}, serviceName=CLUSTER_hadoop

2017-03-29 11:24:52,657 ERROR util.PolicyRefresher (PolicyRefresher.java:loadPolicyfromPolicyAdmin(240)) - PolicyRefresher(serviceName=CLUSTER_hadoop): failed to refresh policies. Will continue to use last known version of policies (3)

java.lang.Exception: HTTP 401

at org.apache.ranger.admin.client.RangerAdminRESTClient.getServicePoliciesIfUpdated(RangerAdminRESTClient.java:126)

at org.apache.ranger.plugin.util.PolicyRefresher.loadPolicyfromPolicyAdmin(PolicyRefresher.java:217)

at org.apache.ranger.plugin.util.PolicyRefresher.loadPolicy(PolicyRefresher.java:185)

at org.apache.ranger.plugin.util.PolicyRefresher.run(PolicyRefresher.java:158)

Thats for HDFS, for other services the user is different (hive etc.). I am using HDP 2.5 and Ambari 2.4.1.

These users exist in Kerberos (klist):

hive/hadoop1.locald@EXAMPLE.COM hive/hadoop2.locald@EXAMPLE.COM hive/hadoop3.locald@EXAMPLE.COM hive/hadoop4.locald@EXAMPLE.COM infra-solr/hadoop1.locald@EXAMPLE.COM jhs/hadoop2.locald@EXAMPLE.COM jn/hadoop1.locald@EXAMPLE.COM jn/hadoop2.locald@EXAMPLE.COM jn/hadoop3.locald@EXAMPLE.COM kadmin/admin@EXAMPLE.COM kadmin/changepw@EXAMPLE.COM kadmin/hadoop1.locald@EXAMPLE.COM kafka/hadoop1.locald@EXAMPLE.COM knox/hadoop1.locald@EXAMPLE.COM krbtgt/EXAMPLE.COM@EXAMPLE.COM livy/hadoop1.locald@EXAMPLE.COM livy/hadoop2.locald@EXAMPLE.COM livy/hadoop4.locald@EXAMPLE.COM nm/hadoop1.locald@EXAMPLE.COM nm/hadoop2.locald@EXAMPLE.COM nm/hadoop3.locald@EXAMPLE.COM nm/hadoop4.locald@EXAMPLE.COM nn/hadoop1.locald@EXAMPLE.COM nn/hadoop2.locald@EXAMPLE.COM

Created 03-31-2017 01:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We were getting the same error and after troubleshooting for some time we found that Ranger policymgr_external_url (in Ambari under Ranger -> Configs -> Advanced -> Ranger Settings -> External URL) was improperly set to the Ranger hosts IP address. We changed that to the FQDN and restarted the effected service (e.g HS2 for hive, NN for HDFS, etc) and the problem was resolved.

Give that a look and shot if applicable.

Created on 03-29-2017 10:18 AM - edited 08-18-2019 02:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

can you please check if ranger is also kerberised , because if it is hdp2.5 or above then it will be kerberised.

if it is then can you please try following

1) regenerating keytabs from ambari and restart the services.

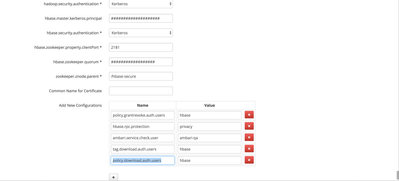

2) add following properties in the repos on ranger:

policy.grantrevoke.auth.users: hbase ( or corresponding service user)

tag.download.auth.users: hbase ( or corresponding service user)

policy.download.auth.users: hbase (or corresponding service user)

same way these properties to be added in hdfs repo too , and service user will be hdfs or what ever you have in your cluster.

Created 03-29-2017 10:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Deepak Sharma thank you for a quick answer. Ranger is also Kerberized. I added those properties and changed Authentication Type in HDFS Repo to Kerberos. Now Test connection is done successfully, but the same error appears. After these changes few INFO logs appeared:

2017-03-29 12:46:23,368 ERROR client.RangerAdminRESTClient (RangerAdminRESTClient.java:getServicePoliciesIfUpdated(124)) - Error getting policies. secureMode=true, user=nn/hadoop1.locald@EXAMPLE.COM (auth:KERBEROS), response={"httpStatusCode":401,"statusCode":0}, serviceName=3SOFT_HDL_hadoop

2017-03-29 12:46:23,368 ERROR util.PolicyRefresher (PolicyRefresher.java:loadPolicyfromPolicyAdmin(240)) - PolicyRefresher(serviceName=3SOFT_HDL_hadoop): failed to refresh policies. Will continue to use last known version of policies (3)

java.lang.Exception: HTTP 401

at org.apache.ranger.admin.client.RangerAdminRESTClient.getServicePoliciesIfUpdated(RangerAdminRESTClient.java:126)

at org.apache.ranger.plugin.util.PolicyRefresher.loadPolicyfromPolicyAdmin(PolicyRefresher.java:217)

at org.apache.ranger.plugin.util.PolicyRefresher.loadPolicy(PolicyRefresher.java:185)

at org.apache.ranger.plugin.util.PolicyRefresher.run(PolicyRefresher.java:158)

2017-03-29 12:46:24,577 WARN protocol.ResponseProcessCookies (ResponseProcessCookies.java:processCookies(122)) - Cookie rejected [hadoop.auth="", version:0, domain:hadoop1.locald, path:/, expiry:Thu Jan 01 01:00:00 CET 1970] Domain attribute "hadoop1.locald" violates the Netscape cookie specification

2017-03-29 12:46:24,582 WARN protocol.ResponseProcessCookies (ResponseProcessCookies.java:processCookies(122)) - Cookie rejected [hadoop.auth=""u=nn&p=nn/hadoop1.locald@EXAMPLE.COM&t=kerberos&e=1490820384581&s=hi0THf8d5c4wUgzQbs/+W/PENPo="", version:0, domain:hadoop1.locald, path:/, expiry:Wed Mar 29 22:46:24 CEST 2017] Domain attribute "hadoop1.locald" violates the Netscape cookie specification

2017-03-29 12:46:25,229 INFO BlockStateChange (BlockManager.java:computeReplicationWorkForBlocks(1580)) - BLOCK* neededReplications = 0, pendingReplications = 0.

2017-03-29 12:46:27,578 WARN protocol.ResponseProcessCookies (ResponseProcessCookies.java:processCookies(122)) - Cookie rejected [hadoop.auth="", version:0, domain:hadoop1.locald, path:/, expiry:Thu Jan 01 01:00:00 CET 1970] Domain attribute "hadoop1.locald" violates the Netscape cookie specification

2017-03-29 12:46:27,582 WARN protocol.ResponseProcessCookies (ResponseProcessCookies.java:processCookies(122)) - Cookie rejected [hadoop.auth=""u=nn&p=nn/hadoop1.locald@EXAMPLE.COM&t=kerberos&e=1490820387581&s=S0zta5LH3SfBXFh0XoB3T5ldjsQ="", version:0, domain:hadoop1.locald, path:/, expiry:Wed Mar 29 22:46:27 CEST 2017] Domain attribute "hadoop1.locald" violates the Netscape cookie specification

2017-03-29 12:46:28,230 INFO BlockStateChange (BlockManager.java:computeReplicationWorkForBlocks(1580)) - BLOCK* neededReplications = 0, pendingReplications = 0.

2017-03-29 12:46:28,474 INFO ipc.Server (Server.java:saslProcess(1538)) - Auth successful for nn/hadoop1.locald@EXAMPLE.COM (auth:KERBEROS)

2017-03-29 12:46:28,475 INFO authorize.ServiceAuthorizationManager (ServiceAuthorizationManager.java:authorize(137)) - Authorization successful for nn/hadoop1.locald@EXAMPLE.COM (auth:KERBEROS) for protocol=interface org.apache.hadoop.hdfs.protocol.ClientProtocol

Created on 03-29-2017 11:00 AM - edited 08-18-2019 02:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

did you regenerated keytabs and restarted service?

Created 03-29-2017 11:20 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, I regenerated keytabs and restarted services. I dont get it: The log:

2017-03-29 13:26:35,429 ERROR client.RangerAdminRESTClient (RangerAdminRESTClient.java:getServicePoliciesIfUpdated(124)) - Error getting policies. secureMode=true, user=nn/hadoop1.locald@EXAMPLE.COM (auth:KERBEROS), response={"httpStatusCode":401,"statusCode":0}, serviceName=CLUSTER_hadoop

2017-03-29 13:26:35,429 ERROR util.PolicyRefresher (PolicyRefresher.java:loadPolicyfromPolicyAdmin(240)) - PolicyRefresher(serviceName=CLUSTER_hadoop): failed to refresh policies. Will continue to use last known version of policies (3)

java.lang.Exception: HTTP 401

says user nn/hadoop1.locald@EXAMPLE.COM us unauthorized (HTTP 401), but below is:

2017-03-29 13:26:38,877 INFO ipc.Server (Server.java:saslProcess(1538)) - Auth successful for nn/hadoop1.locald@EXAMPLE.COM (auth:KERBEROS)

Created 03-29-2017 11:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

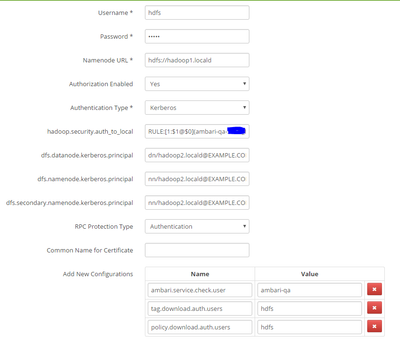

can you check hadoop.security.auth_to_local config in hdfs & hdfs repo also , if rule is specified for nn ,

RULE:[2:$1@$0](nn@EXAMPLE.COM)s/.*/hdfs/

so that call is sent as hdfs user , and since hdfs user is in policy,download.auth.users so it will be alllowed to download the policy and make sure same config is pres in hdfs repo config also

check this config:

RULE:[1:$1@$0](.*@EXAMPLE.COM)s/@.*// RULE:[2:$1@$0](activity_analyzer@EXAMPLE.COM)s/.*/activity_analyzer/ RULE:[2:$1@$0](activity_explorer@EXAMPLE.COM)s/.*/activity_explorer/ RULE:[2:$1@$0](amshbase@EXAMPLE.COM)s/.*/ams/ RULE:[2:$1@$0](amszk@EXAMPLE.COM)s/.*/ams/ RULE:[2:$1@$0](atlas@EXAMPLE.COM)s/.*/atlas/ RULE:[2:$1@$0](dn@EXAMPLE.COM)s/.*/hdfs/ RULE:[2:$1@$0](hbase@EXAMPLE.COM)s/.*/hbase/ RULE:[2:$1@$0](hive@EXAMPLE.COM)s/.*/hive/ RULE:[2:$1@$0](jhs@EXAMPLE.COM)s/.*/mapred/ RULE:[2:$1@$0](knox@EXAMPLE.COM)s/.*/knox/ RULE:[2:$1@$0](nfs@EXAMPLE.COM)s/.*/hdfs/ RULE:[2:$1@$0](nm@EXAMPLE.COM)s/.*/yarn/ RULE:[2:$1@$0](nn@EXAMPLE.COM)s/.*/hdfs/ RULE:[2:$1@$0](rangeradmin@EXAMPLE.COM)s/.*/ranger/ RULE:[2:$1@$0](rangertagsync@EXAMPLE.COM)s/.*/rangertagsync/ RULE:[2:$1@$0](rangerusersync@EXAMPLE.COM)s/.*/rangerusersync/ RULE:[2:$1@$0](rm@EXAMPLE.COM)s/.*/yarn/ RULE:[2:$1@$0](yarn@EXAMPLE.COM)s/.*/yarn/ DEFAULT

Created 03-29-2017 12:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

can you share screenshot of your repo config , I want to see which is the repo user ?

Created on 03-29-2017 12:31 PM - edited 08-18-2019 02:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Deepak Sharma Sorry I missed this comment, here is my config:

Created 03-29-2017 11:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have exactly the same rules that you uploaded, both in hdfs and hdfs repo. I deleted my old repo and let Ambari create new one, and the newly created HDFS repo has correct configs and test connection is done successfully.

Created on 03-29-2017 12:05 PM - edited 08-18-2019 02:13 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

and can you please change the repo user to hdfs if it is something else