Support Questions

- Cloudera Community

- Support

- Support Questions

- Read snappy files on HDFS (Hive)

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Read snappy files on HDFS (Hive)

- Labels:

-

Apache Hadoop

-

Apache Hive

-

Apache NiFi

Created on 07-13-2018 07:25 AM - edited 08-18-2019 12:30 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello guys,

I have a problem with reading snappy files from HDFS.

From the beginning:

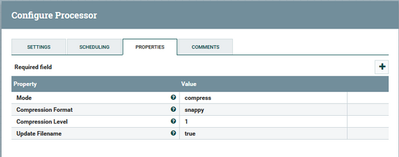

1. Files are compressed in Apache NiFi on separate cluster in CompressContent processor.

2. Files are send to HDFS directly from NiFi to

/test/snappy

3. External Table in Hive is created to read data.

CREATE EXTERNAL TABLE test_snappy( txt string) LOCATION '/test/snappy' ;

4. Simple query:

Select * from test_snappy;

results with 0 rows.

5. HDFS -text command returns error:

$ hdfs dfs -text /test/snappy/dummy_text.txt.snappy

18/07/13 08:46:47 INFO compress.CodecPool: Got brand-new decompressor [.snappy]

Exception in thread "main" java.lang.OutOfMemoryError: Java heap space

at org.apache.hadoop.io.compress.BlockDecompressorStream.getCompressedData(BlockDecompressorStream.java:123)

at org.apache.hadoop.io.compress.BlockDecompressorStream.decompress(BlockDecompressorStream.java:98)

at org.apache.hadoop.io.compress.DecompressorStream.read(DecompressorStream.java:105)

at java.io.InputStream.read(InputStream.java:101)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:87)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:61)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:121)

at org.apache.hadoop.fs.shell.Display$Cat.printToStdout(Display.java:106)

at org.apache.hadoop.fs.shell.Display$Cat.processPath(Display.java:101)

at org.apache.hadoop.fs.shell.Command.processPaths(Command.java:317)

at org.apache.hadoop.fs.shell.Command.processPathArgument(Command.java:289)

at org.apache.hadoop.fs.shell.Command.processArgument(Command.java:271)

at org.apache.hadoop.fs.shell.Command.processArguments(Command.java:255)

at org.apache.hadoop.fs.shell.FsCommand.processRawArguments(FsCommand.java:118)

at org.apache.hadoop.fs.shell.Command.run(Command.java:165)

at org.apache.hadoop.fs.FsShell.run(FsShell.java:315)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:84)

at org.apache.hadoop.fs.FsShell.main(FsShell.java:372)Here is my test file, dummy_text.txt.snappy:

https://we.tl/pPUMQU028X

Do you have any clues?

Created 07-13-2018 03:25 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You have to tell hive more about your data characteristics.

On hive do this:

CREATE EXTERNAL TABLE sourcetable (col bigint)

row format delimited

fields terminated by ","

STORED as TEXTFILE

TBLPROPERTIES("orc.compress"="snappy")

LOCATION 'hdfs:///data/sourcetable'

Created 07-16-2018 09:12 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have tried your code:

CREATE EXTERNAL TABLE sourcetable (json string)

row format delimited

fields terminated by ","

STORED as TEXTFILE

LOCATION '/test/snappy'

TBLPROPERTIES("orc.compress"="snappy")without success. Result is the same as above - 0 rows.

I have found that snappy codec version is different in NiFi and in HDFS. I'll try to create custom processor in NiFi with the same snappy codec version as it is in HDFS.

Created 07-23-2018 01:47 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm pretty sure the parameter "orc.compress" doesn't apply to tables "stored as textfile". In the error message above it's obvious hive detected snappy and then for some reason ran out of memory. How big is the Snappy file and how much memory is allocated on your cluster for Yarn?

Created 07-18-2018 08:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried with custom processor but didn't work either.

Finally I am using workaround and data is compressed in Hive.

Created 07-25-2022 12:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you please share it