Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Removing text before and after [ ] characters

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Removing text before and after [ ] characters

Created 03-17-2018 06:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have a json file and would like to just keep everything within [ ] so that i can send the flowfile into SplitJson processor and subsequently into elasticsearch. A sample of the content of the file is attached below.

{

"XMLfile_2234": {

"xsi:schemaLocation": "http://xml.mscibarra.com/random.xsd",

"dataset_12232": {

"entry": [

{

"record_date": "2017-03-01",

"country": "USA",

"funds": "100",

},

{

"record_date": "2018-03-01",

"country": "Chile",

"funds": "10000",

}

]

}

}

}How do i remove all text and character before and after the [ ]? I would want to keep the square brackets too. I'm a complete noob with regards to regex and replacetext.

Thanks for the help.

Created on 03-18-2018 06:28 AM - edited 08-17-2019 11:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

First thing first! Treat JSON as JSON and not as plain text. Stop extracting text! 🙂

Now let's talk about the solution!

You have a JSON whose structure looks like this.

{

"XMLfile_2234":{

"xsi:schemaLocation":"http://xml.mscibarra.com/random.xsd",

"dataset_12232":{

"entry":[]

}

}

}You want to pick entry column, which is an array out of it and then split individual array elements into separate docs so that you can ultimately push them to elastic search.

So here is the step by step solution!

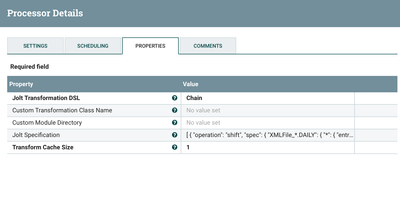

1. Assuming that values after XMLfile_ and dataset_ may differ for different documents, even if they don't this solution will work but since this may happen in a lot of cases, taking that case into consideration also. First of all, read the JSON document and cherry-pick only the entry column of it. How to do that? JoltTransformJSON is the best processor in NiFi to do any JSON operations. Follows the details on your JoltTransformJSON processor configuration.

Your complete Jolt specification

[ {

"operation": "shift",

"spec": {

"XMLFile_*.DAILY": {

"*": {

"entry": "entry"

}

}

}

} ]

This will give you only the entry column from your data.

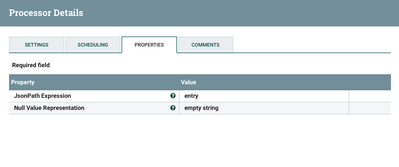

2. Now since you have just the entry column, simply use the SplitJSON processor to split the entry, an array, into individual documents. Follows the snapshot of the SplitJSON processor configuration.

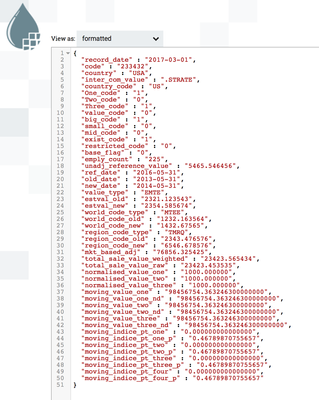

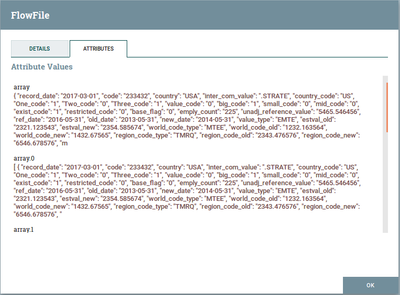

3. The split relation will have your individualized array elements as separate flow files. A sample snapshot from your data after the data you provided in your answer went through SplitJSON processor.

An individual array element in the data. Now a flow file.

Once you have these steps in your flow, the data out of the SplitJSON processor, specifically the split relation of SplitJSON processor, you can re-route it further as per your use case.

Hope that helps!

Created on 03-17-2018 09:00 AM - edited 08-17-2019 11:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

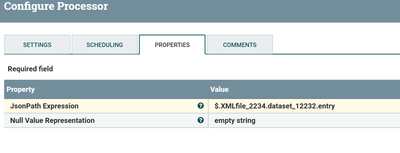

if you want to split the json content on entry array then you don't have to use regex at all.

Method1:-

Use Split Json processor with below configs:-

JsonPath Expression

$.XMLfile_2234.dataset_12232.entry

Then use split relation from splitjson processor to connect to the next processor.

Input Json content:-

input flowfile content that feeding into split json processor.

{"XMLfile_2234": {"xsi:schemaLocation": "http://xml.mscibarra.com/random.xsd","dataset_12232": {"entry": [{"record_date": "2017-03-01","country": "USA","funds": "100"},{"record_date": "2018-03-01","country": "Chile","funds": "10000"}]}}}Output from Splitjson processor:-

flowfile1:-

{"record_date":"2017-03-01","country":"USA","funds":"100"}flowfile2:-

{"record_date":"2018-03-01","country":"Chile","funds":"10000"}(or)

Method2:-

you can use Extract text processor to extract the entry array and keep that as attribute then use ReplaceText processor to overwrite the existing content of the flowfile with new array attribute value, then use splitjson processor to split the array.

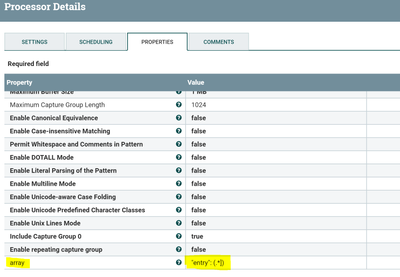

Extract text configs:-

Add new property to the extract text processor by clicking + sign at top right corner and then add the below property

array

"entry": (.*])

Now we are going to extract all the entry array message and keep that into array attribute to the flowfile.

Then use Replacetext processor to replace the contents of flowfile with array message value.

Replacetext configs:-

Replacement Value

${array}Replacement Strategy

Always Replace

Change the above property values in the replace text processor, in this processor we are writing array attribute value as contents of the flowfile.

now we are going to have entry array message as our flowfile content so we can use split json processor to split the array into individual messages.

Input flowfile content:-

{"XMLfile_2234": {"xsi:schemaLocation": "http://xml.mscibarra.com/random.xsd","dataset_12232": {"entry": [{"record_date": "2017-03-01","country": "USA","funds": "100"},{"record_date": "2018-03-01","country": "Chile","funds": "10000"}]}}}output flowfile content:-

[{"record_date": "2017-03-01","country": "USA","funds": "100"},{"record_date": "2018-03-01","country": "Chile","funds": "10000"}]as you can notice the output flowfile content has been changed in this processor.

SplitJson processor:-

JsonPath Expression

$.*

Flow:-

1.Extract text processor 2.Replace Text processor 3.SplitJson processor

By following both methods output would be the same by using method 1 would be easy to complete this task.

Created on 03-18-2018 03:12 AM - edited 08-17-2019 11:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Shu,

Thanks for your prompt response. Your reply has given me new insights on how to handle my problems. The data which I provided initially was very simplified, as I assume that it was just a regex question. Here's a better representation of the data. There's around 50 fields for each record and around 1,500 records in each json file.

{

"XMLFile_2234.DAILY": {

"xsi:schemaLocation": "http://xml.mscibarra.com/random.xsd",

"dataset_12232": {

"entry": [

{

"record_date": "2017-03-01",

"code": "233432",

"country": "USA",

"inter_com_value": ".STRATE",

"country_code": "US",

"One_code": "1",

"Two_code": "0",

"Three_code": "1",

"value_code": "0",

"big_code": "1",

"small_code": "0",

"mid_code": "0",

"exist_code": "1",

"restricted_code": "0",

"base_flag": "0",

"emply_count": "225",

"unadj_reference_value": "5465.546456",

"ref_date": "2016-05-31",

"old_date": "2013-05-31",

"new_date": "2014-05-31",

"value_type": "EMTE",

"estval_old": "2321.123543",

"estval_new": "2354.585674",

"world_code_type": "MTEE",

"world_code_old": "1232.163564",

"world_code_new": "1432.67565",

"region_code_type": "TMRQ",

"region_code_old": "2343.476576",

"region_code_new": "6546.678576",

"mkt_based_adj": "76856.325425",

"total_sale_value_weighted": "23423.565434",

"total_sale_value_raw": "23423.453535",

"normalised_value_one": "1000.000000",

"normalised_value_two": "1000.000000",

"normalised_value_three": "1000.000000",

"moving_value_one": "98456754.363246300000000",

"moving_value_one_nd": "98456754.363246300000000",

"moving_value_two": "98456754.363246300000000",

"moving_value_two_nd": "98456754.363246300000000",

"moving_value_three": "98456754.363246300000000",

"moving_value_three_nd": "98456754.363246300000000",

"moving_indice_pt_one": "0.000000000000000",

"moving_indice_pt_one_p": "0.46789870755657",

"moving_indice_pt_two": "0.000000000000000",

"moving_indice_pt_two_p": "0.46789870755657",

"moving_indice_pt_three": "0.000000000000000",

"moving_indice_pt_three_p": "0.46789870755657",

"moving_indice_pt_four": "0.000000000000000",

"moving_indice_pt_four_p": "0.46789870755657"

},

{

"record_date": "2017-03-01",

"code": "236453",

"country": "VEN",

"inter_com_value": ".STRATE",

"country_code": "VE",

"One_code": "1",

"Two_code": "0",

"Three_code": "1",

"value_code": "0",

"big_code": "1",

"small_code": "0",

"mid_code": "0",

"exist_code": "1",

"restricted_code": "0",

"base_flag": "0",

"emply_count": "244",

"unadj_reference_value": "5465.546456",

"ref_date": "2016-05-31",

"old_date": "2013-05-31",

"new_date": "2014-05-31",

"value_type": "EMTE",

"estval_old": "2321.123543",

"estval_new": "2354.585674",

"world_code_type": "MTEE",

"world_code_old": "1232.163564",

"world_code_new": "1432.67565",

"region_code_type": "TMRQ",

"region_code_old": "2343.476576",

"region_code_new": "6546.678576",

"mkt_based_adj": "76856.325425",

"total_sale_value_weighted": "23423.565434",

"total_sale_value_raw": "23423.453535",

"normalised_value_one": "1000.000000",

"normalised_value_two": "1000.000000",

"normalised_value_three": "1000.000000",

"moving_value_one": "98456754.363246300000000",

"moving_value_one_nd": "98456754.363246300000000",

"moving_value_two": "98456754.363246300000000",

"moving_value_two_nd": "98456754.363246300000000",

"moving_value_three": "98456754.363246300000000",

"moving_value_three_nd": "98456754.363246300000000",

"moving_indice_pt_one": "0.000000000000000",

"moving_indice_pt_one_p": "0.46789870755657",

"moving_indice_pt_two": "0.000000000000000",

"moving_indice_pt_two_p": "0.46789870755657",

"moving_indice_pt_three": "0.000000000000000",

"moving_indice_pt_three_p": "0.46789870755657",

"moving_indice_pt_four": "0.000000000000000",

"moving_indice_pt_four_p": "0.46789870755657"

}

]

}

}

}I've tried both your suggested methods. For Method 1, I can't construct a Json Expression that allows me to include the entire string of "XMLFile_2234.DAILY" as part of the expression mainly due to the period in the string. Also, if the header value changes from file to file, can I assume that this method may not be suitable?

For Method 2, the ExtractText processor does not seem to be able to extract any value by using the following.

"entry":(.*])

Instead I tried the following expression.

\[([^]]+)\]

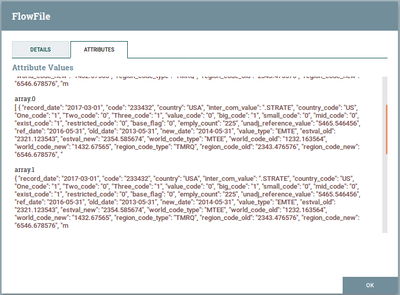

And I got the following values in the attributes.

The values seems to be truncated and the 2nd record is not picked up. Also, if I have around 1,500 records within each Json file that need to be split, will this method of using attributes have any limitations?

Thanks

Created on 03-18-2018 07:38 AM - edited 08-17-2019 11:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

For Method1:-

in split json processor Use JsonPath Expression like

$.['XMLFile_2234.DAILY'].dataset_12232.entry

now we are escaping period in XMLFile_2234.DAILY.

For method2:-

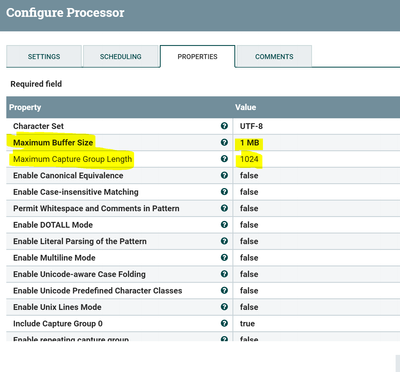

Increase the below properties values in you extract text processor as per your flowfile size and capture group length.

Maximum Buffer Size

1 MB

Maximum Capture Group Length

1024

Created on 03-19-2018 03:44 AM - edited 08-17-2019 11:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Even you don't need to use jolt transform processor to get only the entry array as the flow file content.

We can achieve the same result by using split json processor in more easy way.

Configure split json processor as

JsonPath Expression

$.*.*.*.*

By using above json path expression it doesn't matter even if header value changed,array entry has been changed to entry1,exit ... etc until you are having same structure of the json message(same dependency will be applicable by using jolt also), this method will work we are going to split the array it self and then use the splits relation to connect to the next processors.

If you want to do dynamically without any dependencies on the attribute names that are going to be defined in the incoming json message/object then go with this approach.

Created on 03-18-2018 06:28 AM - edited 08-17-2019 11:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

First thing first! Treat JSON as JSON and not as plain text. Stop extracting text! 🙂

Now let's talk about the solution!

You have a JSON whose structure looks like this.

{

"XMLfile_2234":{

"xsi:schemaLocation":"http://xml.mscibarra.com/random.xsd",

"dataset_12232":{

"entry":[]

}

}

}You want to pick entry column, which is an array out of it and then split individual array elements into separate docs so that you can ultimately push them to elastic search.

So here is the step by step solution!

1. Assuming that values after XMLfile_ and dataset_ may differ for different documents, even if they don't this solution will work but since this may happen in a lot of cases, taking that case into consideration also. First of all, read the JSON document and cherry-pick only the entry column of it. How to do that? JoltTransformJSON is the best processor in NiFi to do any JSON operations. Follows the details on your JoltTransformJSON processor configuration.

Your complete Jolt specification

[ {

"operation": "shift",

"spec": {

"XMLFile_*.DAILY": {

"*": {

"entry": "entry"

}

}

}

} ]

This will give you only the entry column from your data.

2. Now since you have just the entry column, simply use the SplitJSON processor to split the entry, an array, into individual documents. Follows the snapshot of the SplitJSON processor configuration.

3. The split relation will have your individualized array elements as separate flow files. A sample snapshot from your data after the data you provided in your answer went through SplitJSON processor.

An individual array element in the data. Now a flow file.

Once you have these steps in your flow, the data out of the SplitJSON processor, specifically the split relation of SplitJSON processor, you can re-route it further as per your use case.

Hope that helps!

Created 03-18-2018 10:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks @Rahul Soni, the JoltTransformJson processor works for me.

Also thanks to @Shu for explaining everything.