Support Questions

- Cloudera Community

- Support

- Support Questions

- Resource Management in Yarn - Container pending pr...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Resource Management in Yarn - Container pending problem ...

- Labels:

-

Apache YARN

Created on 02-05-2016 04:01 PM - edited 08-19-2019 02:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear All,

I am facing some strange behaviour.

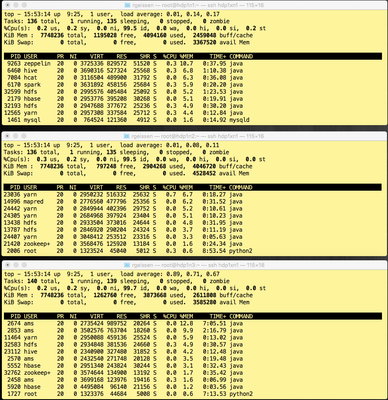

My setup: 3 Node Yarn Cluster (8GB Memory each Node / CentOS7 / AWS Installation)

When firing up a simple M/R Job through Pig, the application started with 2 containers but is "pending" getting the 3rd Container from the Node Manager.

When I look at the 3 Nodes in Unix I see the following:

My Mappers are setup to consume 1,5 GB Containers, My Reducers to consume 2GB

As what I can see immediately, is that there obviously is no free space available for starting the 3rd container, but there is lots of space available in the buff/cache that I was hoping is used by yarn too for firing up containers.

Am I missing something?

Br,

Rainer

Created 02-05-2016 05:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After a cluster restart it worked ... will continue to trace why that happened ...

Created 02-05-2016 06:38 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There is high possibility that previous running jobs did not free up the resources. You should configure CS queues to implement true multitenancy otherwise, 1 job will hog the whole cluster and other jobs will wait until job1 finishes.

Created 02-05-2016 10:46 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think there is a misunderstanding in what yarn does. It doesn't care at all how much memory is available on the Linux machines. Or about buffers or caches It only cares about the settings in the yarn configuration. You can check them in Ambari.It is your responsibility to set them correctly so they fit to the system.

You can find on the yarn page of ambari:

- The total amount of RAM available to yarn on any one datanode. This is estimated by ambari during the installation but in the end your responsibility.

- The min size of a container. ( this is also the common divider of container sizes )

- the max size of a container ( normally yarn max is a good idea )

So lets assume you have a 3 node cluster with 32GB of RAM on each and yarn memory has been set to 24GB ( leaving 8 to OS plus HDFS )

Lets also assume your min container size is 1GB.

This gives you 24GB * 3 = 72GB in total for yarn and at most 72 containers.

A couple important things:

- If you set your map settings to 1.5GB you have at most 36 containers since yarn only gives out slots in multiples of the minimum ( i.e. 2GB, 3GB 4GB, ... ) This is a common problem. So always set your container sizes as multiple of the min.

-If you have only 16GB on the nodes and you set the yarn memory to 32GB, yarn will happily bring your system into outofmemory.

It is your responsibility to configure it correctly so it uses the available RAM but not more

What yarn does is to shoot down any task that uses more than its requested amount of RAM and to schedule tasks so they are running locally to data etc. pp.