Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Restart App Timeline Server Error (roleParams ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Restart App Timeline Server Error (roleParams not in config dictionary) after Druid Service Install HDP 3.0

- Labels:

-

Hortonworks Data Platform (HDP)

Created on 08-08-2018 11:59 AM - edited 09-16-2022 06:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Any idea why I am getting this error when doing the restart after I have installed Druid service via Ambari on fresh HDP 3.0 cluster? The cluster was deployed using Cloudbreak on Azure. Ambari version 2.7.0.0.

stderr: /var/lib/ambari-agent/data/errors-82.txt

Traceback (most recent call last):

File "/var/lib/ambari-agent/cache/stacks/HDP/3.0/services/YARN/package/scripts/application_timeline_server.py", line 97, in <module>

ApplicationTimelineServer().execute()

File "/usr/lib/ambari-agent/lib/resource_management/libraries/script/script.py", line 353, in execute

method(env)

File "/usr/lib/ambari-agent/lib/resource_management/libraries/script/script.py", line 933, in restart

if componentCategory and componentCategory.strip().lower() == 'CLIENT'.lower():

File "/usr/lib/ambari-agent/lib/resource_management/libraries/script/config_dictionary.py", line 73, in __getattr__

raise Fail("Configuration parameter '" + self.name + "' was not found in configurations dictionary!")

resource_management.core.exceptions.Fail: Configuration parameter 'roleParams' was not found in configurations dictionary!stdout: /var/lib/ambari-agent/data/output-82.txt

2018-08-08 11:35:16,639 - Stack Feature Version Info: Cluster Stack=3.0, Command Stack=None, Command Version=3.0.0.0-1334 -> 3.0.0.0-1334

2018-08-08 11:35:16,654 - Using hadoop conf dir: /usr/hdp/3.0.0.0-1334/hadoop/conf

2018-08-08 11:35:16,797 - Stack Feature Version Info: Cluster Stack=3.0, Command Stack=None, Command Version=3.0.0.0-1334 -> 3.0.0.0-1334

2018-08-08 11:35:16,801 - Using hadoop conf dir: /usr/hdp/3.0.0.0-1334/hadoop/conf

2018-08-08 11:35:16,802 - Group['hdfs'] {}

2018-08-08 11:35:16,803 - Group['hadoop'] {}

2018-08-08 11:35:16,803 - Group['users'] {}

2018-08-08 11:35:16,804 - User['hive'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-08-08 11:35:16,804 - User['yarn-ats'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-08-08 11:35:16,805 - User['druid'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-08-08 11:35:16,806 - User['zookeeper'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-08-08 11:35:16,807 - User['ams'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-08-08 11:35:16,808 - User['ambari-qa'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop', 'users'], 'uid': None}

2018-08-08 11:35:16,808 - User['tez'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop', 'users'], 'uid': None}

2018-08-08 11:35:16,809 - User['hdfs'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hdfs', 'hadoop'], 'uid': None}

2018-08-08 11:35:16,810 - User['yarn'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-08-08 11:35:16,811 - User['mapred'] {'gid': 'hadoop', 'fetch_nonlocal_groups': True, 'groups': ['hadoop'], 'uid': None}

2018-08-08 11:35:16,811 - File['/var/lib/ambari-agent/tmp/changeUid.sh'] {'content': StaticFile('changeToSecureUid.sh'), 'mode': 0555}

2018-08-08 11:35:16,813 - Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] {'not_if': '(test $(id -u ambari-qa) -gt 1000) || (false)'}

2018-08-08 11:35:16,817 - Skipping Execute['/var/lib/ambari-agent/tmp/changeUid.sh ambari-qa /tmp/hadoop-ambari-qa,/tmp/hsperfdata_ambari-qa,/home/ambari-qa,/tmp/ambari-qa,/tmp/sqoop-ambari-qa 0'] due to not_if

2018-08-08 11:35:16,818 - Group['hdfs'] {}

2018-08-08 11:35:16,818 - User['hdfs'] {'fetch_nonlocal_groups': True, 'groups': ['hdfs', 'hadoop', u'hdfs']}

2018-08-08 11:35:16,819 - FS Type:

2018-08-08 11:35:16,819 - Directory['/etc/hadoop'] {'mode': 0755}

2018-08-08 11:35:16,832 - File['/usr/hdp/3.0.0.0-1334/hadoop/conf/hadoop-env.sh'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop'}

2018-08-08 11:35:16,833 - Directory['/var/lib/ambari-agent/tmp/hadoop_java_io_tmpdir'] {'owner': 'hdfs', 'group': 'hadoop', 'mode': 01777}

2018-08-08 11:35:16,846 - Execute[('setenforce', '0')] {'not_if': '(! which getenforce ) || (which getenforce && getenforce | grep -q Disabled)', 'sudo': True, 'only_if': 'test -f /selinux/enforce'}

2018-08-08 11:35:16,853 - Skipping Execute[('setenforce', '0')] due to not_if

2018-08-08 11:35:16,853 - Directory['/var/log/hadoop'] {'owner': 'root', 'create_parents': True, 'group': 'hadoop', 'mode': 0775, 'cd_access': 'a'}

2018-08-08 11:35:16,855 - Directory['/var/run/hadoop'] {'owner': 'root', 'create_parents': True, 'group': 'root', 'cd_access': 'a'}

2018-08-08 11:35:16,855 - Changing owner for /var/run/hadoop from 1011 to root

2018-08-08 11:35:16,855 - Changing group for /var/run/hadoop from 988 to root

2018-08-08 11:35:16,855 - Directory['/tmp/hadoop-hdfs'] {'owner': 'hdfs', 'create_parents': True, 'cd_access': 'a'}

2018-08-08 11:35:16,859 - File['/usr/hdp/3.0.0.0-1334/hadoop/conf/commons-logging.properties'] {'content': Template('commons-logging.properties.j2'), 'owner': 'hdfs'}

2018-08-08 11:35:16,860 - File['/usr/hdp/3.0.0.0-1334/hadoop/conf/health_check'] {'content': Template('health_check.j2'), 'owner': 'hdfs'}

2018-08-08 11:35:16,866 - File['/usr/hdp/3.0.0.0-1334/hadoop/conf/log4j.properties'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop', 'mode': 0644}

2018-08-08 11:35:16,875 - File['/usr/hdp/3.0.0.0-1334/hadoop/conf/hadoop-metrics2.properties'] {'content': InlineTemplate(...), 'owner': 'hdfs', 'group': 'hadoop'}

2018-08-08 11:35:16,875 - File['/usr/hdp/3.0.0.0-1334/hadoop/conf/task-log4j.properties'] {'content': StaticFile('task-log4j.properties'), 'mode': 0755}

2018-08-08 11:35:16,876 - File['/usr/hdp/3.0.0.0-1334/hadoop/conf/configuration.xsl'] {'owner': 'hdfs', 'group': 'hadoop'}

2018-08-08 11:35:16,880 - File['/etc/hadoop/conf/topology_mappings.data'] {'owner': 'hdfs', 'content': Template('topology_mappings.data.j2'), 'only_if': 'test -d /etc/hadoop/conf', 'group': 'hadoop', 'mode': 0644}

2018-08-08 11:35:16,884 - File['/etc/hadoop/conf/topology_script.py'] {'content': StaticFile('topology_script.py'), 'only_if': 'test -d /etc/hadoop/conf', 'mode': 0755}

2018-08-08 11:35:16,887 - Skipping unlimited key JCE policy check and setup since the Java VM is not managed by Ambari

Command failed after 1 tries

Created 08-08-2018 12:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Paul Norris ,

I just checked what should be the value of 'roleParams' once you are restarting the Appl Timeline server in my local cluster.

[root@anaik1 data]# cat command-961.json |grep -i -5 roleParams

]

},

"clusterName": "asnaik",

"commandType": "EXECUTION_COMMAND",

"taskId": 961,

"roleParams": {

"component_category": "MASTER"

},

"componentVersionMap": {

"HDFS": {

"NAMENODE": "3.0.0.0-1634",It should be as above. Can you please verify what the value in your's using command

cat /var/lib/ambari-agent/data/command-82.json |grep -i -5 roleParams

If its empty.

I would suggest you restart ambari server once and try again.

If that doesnt worked. See in /var/log/ambari-server/ambari-server.log file while restarting the App timeline server. you will get some exceptions which will give clue whats going wrong.

Hope this helps your in troubleshooting.

Created on 08-10-2018 09:49 AM - edited 08-17-2019 07:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Akhil S Naik ,

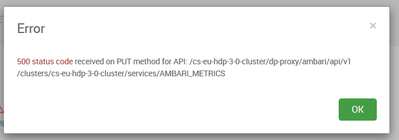

I started again with a fresh cluster, upgraded ambari server and agent to the latest version. After the upgrade when I go back into ambari and try and restart ambari metrics service I now get this error: (I've restarted the ambari-server etc.)

With this in the ambari-server.log:

2018-08-10 09:51:57,394 INFO [agent-register-processor-6] HeartBeatHandler:317 - agentOsType = centos7

2018-08-10 09:51:57,404 INFO [agent-register-processor-6] HostImpl:346 - Received host registration, host=[hostname=cs-eu-hdp-3-0-cluster-w1,fqdn=XXX$

, registrationTime=1533894717394, agentVersion=2.7.0.0

2018-08-10 09:51:57,404 INFO [agent-register-processor-6] TopologyManager:643 - TopologyManager.onHostRegistered: Entering

2018-08-10 09:51:57,404 INFO [agent-register-processor-6] TopologyManager:697 - Host XXX.ax.internal.cloudapp.net re-registered, will not b$

2018-08-10 09:51:57,451 ERROR [clientInboundChannel-7] WebSocketAnnotationMethodMessageHandler:548 - Error while processing handler method exception

org.springframework.messaging.converter.MessageConversionException: Could not write JSON: (was java.lang.ClassCastException) (through reference chain: org.springframework.messaging.support$

at org.springframework.messaging.converter.MappingJackson2MessageConverter.convertToInternal(MappingJackson2MessageConverter.java:342)

at org.springframework.messaging.converter.AbstractMessageConverter.toMessage(AbstractMessageConverter.java:194)

at org.springframework.messaging.converter.CompositeMessageConverter.toMessage(CompositeMessageConverter.java:91)

at org.springframework.messaging.core.AbstractMessageSendingTemplate.doConvert(AbstractMessageSendingTemplate.java:171)

at org.springframework.messaging.core.AbstractMessageSendingTemplate.convertAndSend(AbstractMessageSendingTemplate.java:142)

at org.springframework.messaging.simp.SimpMessagingTemplate.convertAndSendToUser(SimpMessagingTemplate.java:225)

at org.springframework.messaging.simp.SimpMessagingTemplate.convertAndSendToUser(SimpMessagingTemplate.java:208)

at org.apache.ambari.server.api.AmbariSendToMethodReturnValueHandler.handleReturnValue(AmbariSendToMethodReturnValueHandler.java:84)

at org.springframework.messaging.handler.invocation.HandlerMethodReturnValueHandlerComposite.handleReturnValue(HandlerMethodReturnValueHandlerComposite.java:103)

at org.springframework.messaging.handler.invocation.AbstractMethodMessageHandler.processHandlerMethodException(AbstractMethodMessageHandler.java:545)

at org.springframework.messaging.handler.invocation.AbstractMethodMessageHandler.handleMatch(AbstractMethodMessageHandler.java:520)

at org.springframework.messaging.simp.annotation.support.SimpAnnotationMethodMessageHandler.handleMatch(SimpAnnotationMethodMessageHandler.java:495)

at org.springframework.messaging.simp.annotation.support.SimpAnnotationMethodMessageHandler.handleMatch(SimpAnnotationMethodMessageHandler.java:87)

at org.springframework.messaging.handler.invocation.AbstractMethodMessageHandler.handleMessageInternal(AbstractMethodMessageHandler.java:463)

at org.springframework.messaging.handler.invocation.AbstractMethodMessageHandler.handleMessage(AbstractMethodMessageHandler.java:401)

at org.springframework.messaging.support.ExecutorSubscribableChannel$SendTask.run(ExecutorSubscribableChannel.java:135)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: com.fasterxml.jackson.databind.JsonMappingException: (was java.lang.ClassCastException) (through reference chain: org.springframework.messaging.support.ErrorMessage["payload"]->$

at com.fasterxml.jackson.databind.JsonMappingException.wrapWithPath(JsonMappingException.java:391)

at com.fasterxml.jackson.databind.JsonMappingException.wrapWithPath(JsonMappingException.java:351)

at com.fasterxml.jackson.databind.ser.std.StdSerializer.wrapAndThrow(StdSerializer.java:316)

at com.fasterxml.jackson.databind.ser.std.MapSerializer.serializeFieldsUsing(MapSerializer.java:818)

at com.fasterxml.jackson.databind.ser.std.MapSerializer.serialize(MapSerializer.java:637)

at com.fasterxml.jackson.databind.ser.std.MapSerializer.serialize(MapSerializer.java:33)

at com.fasterxml.jackson.databind.ser.BeanPropertyWriter.serializeAsField(BeanPropertyWriter.java:727)

at com.fasterxml.jackson.databind.ser.std.BeanSerializerBase.serializeFields(BeanSerializerBase.java:719)

at com.fasterxml.jackson.databind.ser.BeanSerializer.serialize(BeanSerializer.java:155)

at com.fasterxml.jackson.databind.ser.BeanPropertyWriter.serializeAsField(BeanPropertyWriter.java:727)

at com.fasterxml.jackson.databind.ser.std.BeanSerializerBase.serializeFields(BeanSerializerBase.java:719)

at com.fasterxml.jackson.databind.ser.BeanSerializer.serialize(BeanSerializer.java:155)

at com.fasterxml.jackson.databind.ser.BeanPropertyWriter.serializeAsField(BeanPropertyWriter.java:727)

at com.fasterxml.jackson.databind.ser.std.BeanSerializerBase.serializeFields(BeanSerializerBase.java:719)

at com.fasterxml.jackson.databind.ser.BeanSerializer.serialize(BeanSerializer.java:155)

at com.fasterxml.jackson.databind.ser.BeanPropertyWriter.serializeAsField(BeanPropertyWriter.java:727)

at com.fasterxml.jackson.databind.ser.std.BeanSerializerBase.serializeFields(BeanSerializerBase.java:719)

at com.fasterxml.jackson.databind.ser.BeanSerializer.serialize(BeanSerializer.java:155)

at com.fasterxml.jackson.databind.ser.BeanPropertyWriter.serializeAsField(BeanPropertyWriter.java:727)

at com.fasterxml.jackson.databind.ser.std.BeanSerializerBase.serializeFields(BeanSerializerBase.java:719)

at com.fasterxml.jackson.databind.ser.BeanSerializer.serialize(BeanSerializer.java:155)

at com.fasterxml.jackson.databind.ser.BeanPropertyWriter.serializeAsField(BeanPropertyWriter.java:727)

at com.fasterxml.jackson.databind.ser.std.BeanSerializerBase.serializeFields(BeanSerializerBase.java:719)

at com.fasterxml.jackson.databind.ser.BeanSerializer.serialize(BeanSerializer.java:155)

at com.fasterxml.jackson.databind.ser.DefaultSerializerProvider._serialize(DefaultSerializerProvider.java:480)

at com.fasterxml.jackson.databind.ser.DefaultSerializerProvider.serializeValue(DefaultSerializerProvider.java:319)

at com.fasterxml.jackson.databind.ObjectMapper.writeValue(ObjectMapper.java:2643)

at org.springframework.messaging.converter.MappingJackson2MessageConverter.convertToInternal(MappingJackson2MessageConverter.java:326)

... 18 more

Caused by: java.lang.ClassCastException

Created 08-10-2018 10:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Paul Norris,

This seems to be new issue, i suggest you resolve this issue by marking the best answer . and start a new thread.

There you post the Exception in code format

i am code format

additionally my initial understanding says this is becuase you have not done post upgrade tasks( which is upgrading AMS) . correct me if i am wrong .

Created 08-10-2018 10:11 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, I will close this and re-open. The code formatting is not working for me, works when I edit not after posting.

I have run the post-upgrade ambari-server task.

Thanks again.

Paul

- « Previous

-

- 1

- 2

- Next »