Support Questions

- Cloudera Community

- Support

- Support Questions

- Schedule a single nifi process group for multiple ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Schedule a single nifi process group for multiple tables in a database

- Labels:

-

Apache NiFi

Created on 05-25-2018 09:37 AM - edited 08-17-2019 09:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Matt Clarke @Matt Burgess @Shu

I have created a process group with following requirements in Nifi :

Fetch data from hive table >> Encrypt content >> upload to azure blob storage .

Now I have 3000 tables for which the above flow needs to be scheduled . Is there any way to use only single flow for all the tables instead of creating 3000 flows for each table .

Also I want to execute the azure storage for some of the tables not for all . Is there any way to give instruction in the flow based on any condition that Table 1 should go to only gcloud and not on Azure . Similarly I want Table 2 to go to both azure and gcloud.

Thanks In Advance

Created on 05-26-2018 07:14 PM - edited 08-17-2019 09:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, it's possible.

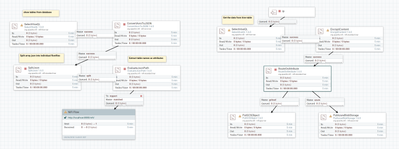

Take a look into the below sample flow

Flow overview:

1.SelectHiveQL //to list tables from specific database in avro format

HiveQL Select Query

show tables from default //to list all tables from default database

2.ConvertAvroToJson //to convert the list of tables from avro format to json format

3.SplitJson //split each table into individual flowfiles

4.EvaluateJsonPath //extract tab_name value and keep as attribute to the flowfile

5.RemoteProcessorGroup //as you are going to do for 3k tables it's better to use RPG for distributing the work.

if you don't want to use RPG then skip both 5,6 processors feed success relationship from 4 to 7.

6.InputPort //get the RPG flowfiles

7.SelectHiveQL //to pull data from the hive tables

8.EncryptContent

9.RouteOnAttribute //as selecthiveql processor writes query.input.tables attribute, so based on this attribute and NiFi expression language add two properties in the processor.

Example:

azure

${query.input.tables:startsWith("a")} //only tablenames starts with a

gcloud

${query.input.tables:startsWith("e"):or(${query.input.tables:startsWith("a")})} //we are going to route table names starts with e(or)a to gcloud Feed the gcloud relationship to PutGCSobject processor and azure relationship to PutAzureBlobStorage processor.

Refer to this link for NiFi expression language and make your expression that can route only the required tables to azure,gcs.

In addition i have used only single database to list all the tables but if your 3k tables are coming from different databases then use GenerateFlowfile processor and add all the list of databases.Extract each database name as attribute --> feed the success relationship to SelectHiveQL processor.

Refer to this link dynamically pass database attribute to first select hiveql processor.

Reference flow.xml load-hivetables-to-azure-gcs195751.xml

-

If the Answer helped to resolve your issue, Click on Accept button below to accept the answer, That would be great help to Community users to find solution quickly for these kind of issues.

Created on 05-26-2018 07:14 PM - edited 08-17-2019 09:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, it's possible.

Take a look into the below sample flow

Flow overview:

1.SelectHiveQL //to list tables from specific database in avro format

HiveQL Select Query

show tables from default //to list all tables from default database

2.ConvertAvroToJson //to convert the list of tables from avro format to json format

3.SplitJson //split each table into individual flowfiles

4.EvaluateJsonPath //extract tab_name value and keep as attribute to the flowfile

5.RemoteProcessorGroup //as you are going to do for 3k tables it's better to use RPG for distributing the work.

if you don't want to use RPG then skip both 5,6 processors feed success relationship from 4 to 7.

6.InputPort //get the RPG flowfiles

7.SelectHiveQL //to pull data from the hive tables

8.EncryptContent

9.RouteOnAttribute //as selecthiveql processor writes query.input.tables attribute, so based on this attribute and NiFi expression language add two properties in the processor.

Example:

azure

${query.input.tables:startsWith("a")} //only tablenames starts with a

gcloud

${query.input.tables:startsWith("e"):or(${query.input.tables:startsWith("a")})} //we are going to route table names starts with e(or)a to gcloud Feed the gcloud relationship to PutGCSobject processor and azure relationship to PutAzureBlobStorage processor.

Refer to this link for NiFi expression language and make your expression that can route only the required tables to azure,gcs.

In addition i have used only single database to list all the tables but if your 3k tables are coming from different databases then use GenerateFlowfile processor and add all the list of databases.Extract each database name as attribute --> feed the success relationship to SelectHiveQL processor.

Refer to this link dynamically pass database attribute to first select hiveql processor.

Reference flow.xml load-hivetables-to-azure-gcs195751.xml

-

If the Answer helped to resolve your issue, Click on Accept button below to accept the answer, That would be great help to Community users to find solution quickly for these kind of issues.