Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Services not starting for fresh installation.

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Services not starting for fresh installation.

Created 04-06-2018 10:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I installed a HDP 2.6 in Centos using the web repo.

I tried to start 4 services: Hadoop, Smartsense, Ambari-metrics and Zookeeper.

All the services got installed but none could start.

After the installation, during cluster deployment from the web UI, the services are Installed but did not start.

ambari-agent log shows the following :

ERROR 2018-04-06 20:41:19,502 script_alert.py:123 - [Alert][datanode_unmounted_data_dir] Failed with result CRITICAL: ['The following data dir(s) were not found: /hadoop/hdfs/data\n']

WARNING 2018-04-06 20:41:19,508 base_alert.py:138 - [Alert][namenode_hdfs_blocks_health] Unable to execute alert. [Alert][namenode_hdfs_blocks_health] Unable to extract JSON from JMX response

I found no documentation in hortonworks community for this.

Any help?

Created 04-08-2018 03:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Geoffery, Jay.

Thanks a lot for your replies.

I went through the logs and found out that the JVM engine is not able to start with insufficient memory.

Bumped up the server. Now it is working.

Thanks a lot

Created 04-06-2018 10:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please tell me if you need the logs, I will attach it here.

There is a file limitation so I am not attaching anything for now.

Created 04-06-2018 11:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

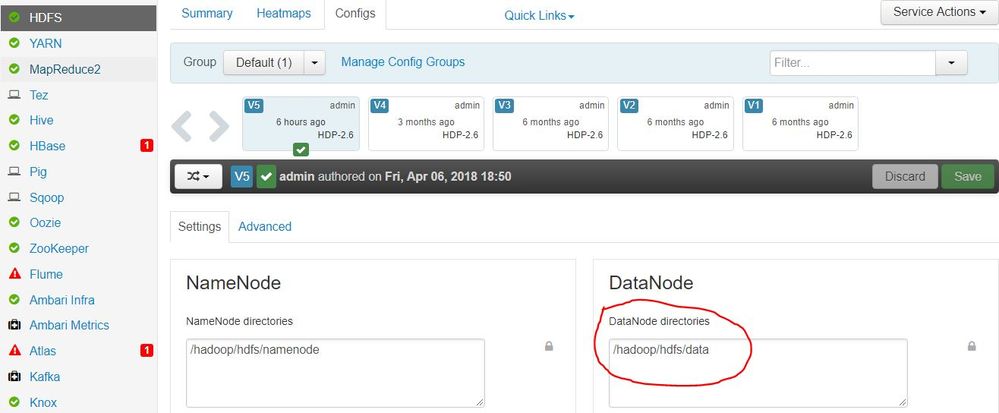

This typically indicates a missing filesystem.

[Alert][datanode_unmounted_data_dir] Failed with result CRITICAL: ['The following data dir(s) were not found: /hadoop/hdfs/data\n']

Can you check that the above mount point exists !!! see attached screenshot FS.jpg

Note: The mount points for DataNode and NameNode

if the above File system is NOT mounted update it save and retry.

Please revert

Created 04-07-2018 07:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Geoffery,

Thank you for coming back. Yes the folder is available and the permissions look correct.

drwxr-xr-x. 2 hdfs hadoop 6 Apr 6 20:43 /hadoop/hdfs/data/

This is a single node cluster so there are no different data and name nodes.

Created 04-06-2018 11:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you please try the following and share the logs:

1. Try starting the HDFS compoents manually to see if they are getting started or not? If not starting then please share the "/var/log/hadoop/hdfs/hadoop-hdfs-datanode-xxxx.log" and "/var/log/hadoop/hdfs/hadoop-hdfs-namenode-xxxx.log" logs.

# su -l hdfs -c "/usr/hdp/current/hadoop-hdfs-namenode/../hadoop/sbin/hadoop-daemon.sh start namenode" # su -l hdfs -c "/usr/hdp/current/hadoop-hdfs-datanode/../hadoop/sbin/hadoop-daemon.sh start datanode"

2. Please share the output of the following directories to see if the permissions are setup as following on the directories?

# ls -ld /hadoop/hdfs/data drwxr-x---. 3 hdfs hadoop 38 Apr 6 09:42 /hadoop/hdfs/data

3. Are you running your Ambari Agents/Server as Non Root User account? If yes then you should follow the doc to make sure that the permissions and sudoer configs are correctly set so that the ambari user can read the required directories.

https://docs.hortonworks.com/HDPDocuments/Ambari-2.6.1.5/bk_ambari-security/content/configuring_amba...

Created 04-08-2018 03:32 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Geoffery, Jay.

Thanks a lot for your replies.

I went through the logs and found out that the JVM engine is not able to start with insufficient memory.

Bumped up the server. Now it is working.

Thanks a lot

Created 04-08-2018 03:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good to know it's always important to scrutinise the logs