Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Single block created for file, now showing mis...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Single block created for file, now showing missing(possibly either NOT replicated or lost even after replication)

- Labels:

-

Apache Hadoop

Created 06-17-2016 12:42 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The detailed background is here.

Stack : HDP 2.4 using Ambari 2.2.2.0, this is a fresh cluster install done one day ago.

The cluster ran perfectly without a single alert or issue after installation and the smoke tests passed.

Then all the machines were rebooted while the cluster was running. Now the NN(l4327pp.sss.com/138.106.33.139)is stuck in the safe mode.

The end-output of the fsck command :

Total size: 87306607111 B (Total open files size: 210029 B) Total dirs: 133 Total files: 748 Total symlinks: 0 (Files currently being written: 10) Total blocks (validated): 1384 (avg. block size 63082808 B) (Total open file blocks (not validated): 10) ******************************** UNDER MIN REPL'D BLOCKS: 236 (17.052023 %) dfs.namenode.replication.min: 1 CORRUPT FILES: 185 MISSING BLOCKS: 236 MISSING SIZE: 9649979551 B CORRUPT BLOCKS: 236 ******************************** Minimally replicated blocks: 1148 (82.947975 %) Over-replicated blocks: 0 (0.0 %) Under-replicated blocks: 0 (0.0 %) Mis-replicated blocks: 0 (0.0 %) Default replication factor: 3 Average block replication: 2.4884393 Corrupt blocks: 236 Missing replicas: 0 (0.0 %) Number of data-nodes: 4 Number of racks: 1 FSCK ended at Thu Jun 16 15:23:05 CEST 2016 in 16 milliseconds The filesystem under path '/' is CORRUPT

I picked just one file of the several reported above and did a fsck on it - it seems that the block was either :

- Present only on the NN(which I can't understand how) and now is lost ! The FSCK doesn't show that the blocks were replicated to the DN

- Was replicated but after the reboot, the data has been lost but then I am confused about the 'healthy' files mentioned later in this post!

[hdfs@l4327pp opt]$ hdfs fsck /dumphere/Assign_Slave_and_Clients_1.PNG -locations -blocks -files Connecting to namenode via http://l4327pp.sss.com:50070/fsck?ugi=hdfs&locations=1&blocks=1&files=1&path=%2Fdumphere%2FAssign_Sl... FSCK started by hdfs (auth:SIMPLE) from /138.106.33.139 for path /dumphere/Assign_Slave_and_Clients_1.PNG at Thu Jun 16 14:52:59 CEST 2016 /dumphere/Assign_Slave_and_Clients_1.PNG 53763 bytes, 1 block(s): /dumphere/Assign_Slave_and_Clients_1.PNG: CORRUPT blockpool BP-1506929499-138.106.33.139-1465983488767 block blk_1073742904 MISSING 1 blocks of total size 53763 B 0. BP-1506929499-138.106.33.139-1465983488767:blk_1073742904_2080 len=53763 MISSING! Status: CORRUPT Total size: 53763 B Total dirs: 0 Total files: 1 Total symlinks: 0 Total blocks (validated): 1 (avg. block size 53763 B) ******************************** UNDER MIN REPL'D BLOCKS: 1 (100.0 %) dfs.namenode.replication.min: 1 CORRUPT FILES: 1 MISSING BLOCKS: 1 MISSING SIZE: 53763 B CORRUPT BLOCKS: 1 ******************************** Minimally replicated blocks: 0 (0.0 %) Over-replicated blocks: 0 (0.0 %) Under-replicated blocks: 0 (0.0 %) Mis-replicated blocks: 0 (0.0 %) Default replication factor: 3 Average block replication: 0.0 Corrupt blocks: 1 Missing replicas: 0 Number of data-nodes: 4 Number of racks: 1 FSCK ended at Thu Jun 16 14:52:59 CEST 2016 in 0 milliseconds The filesystem under path '/dumphere/Assign_Slave_and_Clients_1.PNG' is CORRUPT

Surprisingly, I found several files which are 'healthy'(I could download and view them from NN UI), these are small in size too but are replicated/distributed on 3 DNs :

[hdfs@l4327pp ~]$ hdfs fsck /dumphere/1_GetStarted_Name_Cluster.PNG -locations -blocks -files Connecting to namenode via http://l4327pp.sss.com:50070/fsck?ugi=hdfs&locations=1&blocks=1&files=1&path=%2Fdumphere%2F1_GetStar... FSCK started by hdfs (auth:SIMPLE) from /138.106.33.139 for path /dumphere/1_GetStarted_Name_Cluster.PNG at Thu Jun 16 16:51:54 CEST 2016 /dumphere/1_GetStarted_Name_Cluster.PNG 101346 bytes, 1 block(s): OK 0. BP-1506929499-138.106.33.139-1465983488767:blk_1073742883_2059 len=101346 repl=3 [DatanodeInfoWithStorage[138.106.33.144:50010,DS-715b0d95-c7a1-442a-a366-56712e8c792b,DISK], DatanodeInfoWithStorage[138.106.33.145:50010,DS-1f4e6b48-b2df-49c0-a53d-d49153aec4d0,DISK], DatanodeInfoWithStorage[138.106.33.148:50010,DS-faf159fb-7961-4ca2-8fe2-780fa008438c,DISK]] Status: HEALTHY Total size: 101346 B Total dirs: 0 Total files: 1 Total symlinks: 0 Total blocks (validated): 1 (avg. block size 101346 B) Minimally replicated blocks: 1 (100.0 %) Over-replicated blocks: 0 (0.0 %) Under-replicated blocks: 0 (0.0 %) Mis-replicated blocks: 0 (0.0 %) Default replication factor: 3 Average block replication: 3.0 Corrupt blocks: 0 Missing replicas: 0 (0.0 %) Number of data-nodes: 4 Number of racks: 1 FSCK ended at Thu Jun 16 16:51:54 CEST 2016 in 1 milliseconds The filesystem under path '/dumphere/1_GetStarted_Name_Cluster.PNG' is HEALTHY

Confused by below output :

[hdfs@l4327pp ~]$ hdfs fsck -list-corruptfileblocks / Connecting to namenode via http://l4327pp.sss.com:50070/fsck?ugi=hdfs&listcorruptfileblocks=1&path=%2F The filesystem under path '/' has 0 CORRUPT files

The worrisome findings :

- Probably, since this (and many more such) is small, it was stored in one block

- I suspect that this one block was stored on l4327pp.sss.com/138.106.33.139 - I tried a 'find' command on the DNs but the block blk_1073742904 was not found

The NN is stuck in the safe mode, I am clueless why it tries to connect to some weird ports on the DNs :

The reported blocks 1148 needs additional 237 blocks to reach the threshold 1.0000 of total blocks 1384. The number of live datanodes 4 has reached the minimum number 0. Safe mode will be turned off automatically once the thresholds have been reached. 2016-06-16 15:30:02,359 INFO namenode.FSEditLog (FSEditLog.java:printStatistics(699)) - Number of transactions: 1 Total time for transactions(ms): 0 Number of transactions batched in Syncs: 0 Number of syncs: 1841 SyncTimes(ms): 329 2016-06-16 15:30:02,359 INFO namenode.EditLogFileOutputStream (EditLogFileOutputStream.java:flushAndSync(200)) - Nothing to flush 2016-06-16 15:30:02,359 INFO ipc.Server (Server.java:logException(2287)) - IPC Server handler 995 on 8020, call org.apache.hadoop.hdfs.protocol.ClientProtocol.create from 138.106.33.132:54203 Call#34450 Retry#0: org.apache.hadoop.hdfs.server.namenode.SafeModeException: Cannot create file/spark-history/.6b0216e6-a166-4ad8-b345-c84f7186e3a8. Name node is in safe mode. The reported blocks 1148 needs additional 237 blocks to reach the threshold 1.0000 of total blocks 1384. The number of live datanodes 4 has reached the minimum number 0. Safe mode will be turned off automatically once the thresholds have been reached. 2016-06-16 15:30:12,361 INFO namenode.EditLogFileOutputStream (EditLogFileOutputStream.java:flushAndSync(200)) - Nothing to flush 2016-06-16 15:30:12,361 INFO ipc.Server (Server.java:logException(2287)) - IPC Server handler 902 on 8020, call org.apache.hadoop.hdfs.protocol.ClientProtocol.create from 138.106.33.132:54203 Call#34451 Retry#0: org.apache.hadoop.hdfs.server.namenode.SafeModeException: Cannot create file/spark-history/.3cd1c000-7dbd-4a02-a4ea-981973d0194f. Name node is in safe mode. The reported blocks 1148 needs additional 237 blocks to reach the threshold 1.0000 of total blocks 1384. The number of live datanodes 4 has reached the minimum number 0. Safe mode will be turned off automatically once the thresholds have been reached. 2016-06-16 15:30:22,363 INFO namenode.EditLogFileOutputStream (EditLogFileOutputStream.java:flushAndSync(200)) - Nothing to flush 2016-06-16 15:30:22,364 INFO ipc.Server (Server.java:logException(2287)) - IPC Server handler 902 on 8020, call org.apache.hadoop.hdfs.protocol.ClientProtocol.create from 138.106.33.132:54203 Call#34452 Retry#0: org.apache.hadoop.hdfs.server.namenode.SafeModeException: Cannot create file/spark-history/.1c3b2104-4cac-470c-9518-554550842603. Name node is in safe mode. The reported blocks 1148 needs additional 237 blocks to reach the threshold 1.0000 of total blocks 1384. The number of live datanodes 4 has reached the minimum number 0. Safe mode will be turned off automatically once the thresholds have been reached. 2016-06-16 15:30:32,365 INFO namenode.EditLogFileOutputStream (EditLogFileOutputStream.java:flushAndSync(200)) - Nothing to flush 2016-06-16 15:30:32,365 INFO ipc.Server (Server.java:logException(2287)) - IPC Server handler 902 on 8020, call org.apache.hadoop.hdfs.protocol.ClientProtocol.create from 138.106.33.132:54203 Call#34453 Retry#0: org.apache.hadoop.hdfs.server.namenode.SafeModeException: Cannot create file/spark-history/.a46aac41-6f61-4444-9b81-fdc0db0cc2e2. Name node is in safe mode. The reported blocks 1148 needs additional 237 blocks to reach the threshold 1.0000 of total blocks 1384. The number of live datanodes 4 has reached the minimum number 0. Safe mode will be turned off automatically once the thresholds have been reached. 2016-06-16 15:30:34,931 INFO ipc.Server (Server.java:logException(2287)) - IPC Server handler 1395 on 8020, call org.apache.hadoop.hdfs.server.protocol.NamenodeProtocol.rollEditLog from 138.106.33.146:33387 Call#1441 Retry#0: org.apache.hadoop.hdfs.server.namenode.SafeModeException: Log not rolled. Name node is in safe mode. The reported blocks 1148 needs additional 237 blocks to reach the threshold 1.0000 of total blocks 1384. The number of live datanodes 4 has reached the minimum number 0. Safe mode will be turned off automatically once the thresholds have been reached. 2016-06-16 15:30:42,368 INFO namenode.EditLogFileOutputStream (EditLogFileOutputStream.java:flushAndSync(200)) - Nothing to flush 2016-06-16 15:30:42,368 INFO ipc.Server (Server.java:logException(2287)) - IPC Server handler 955 on 8020, call org.apache.hadoop.hdfs.protocol.ClientProtocol.create from 138.106.33.132:54203 Call#34454 Retry#0: org.apache.hadoop.hdfs.server.namenode.SafeModeException: Cannot create file/spark-history/.e50303d5-46e0-4568-a7ce-994310ac9bfb. Name node is in safe mode. The reported blocks 1148 needs additional 237 blocks to reach the threshold 1.0000 of total blocks 1384. The number of live datanodes 4 has reached the minimum number 0. Safe mode will be turned off automatically once the thresholds have been reached. 2016-06-16 15:30:52,370 INFO namenode.EditLogFileOutputStream (EditLogFileOutputStream.java:flushAndSync(200)) - Nothing to flush 2016-06-16 15:30:52,370 INFO ipc.Server (Server.java:logException(2287)) - IPC Server handler 955 on 8020, call org.apache.hadoop.hdfs.protocol.ClientProtocol.create from 138.106.33.132:54203 Call#34455 Retry#0: org.apache.hadoop.hdfs.server.namenode.SafeModeException: Cannot create file/spark-history/.62244a5e-184a-4f48-9627-5904177ac5f4. Name node is in safe mode. The reported blocks 1148 needs additional 237 blocks to reach the threshold 1.0000 of total blocks 1384. The number of live datanodes 4 has reached the minimum number 0. Safe mode will be turned off automatically once the thresholds have been reached. 2016-06-16 15:31:02,372 INFO namenode.FSEditLog (FSEditLog.java:printStatistics(699)) - Number of transactions: 1 Total time for transactions(ms): 0 Number of transactions batched in Syncs: 0 Number of syncs: 1847 SyncTimes(ms): 331 2016-06-16 15:31:02,372 INFO namenode.EditLogFileOutputStream (EditLogFileOutputStream.java:flushAndSync(200)) - Nothing to flush 2016-06-16 15:31:02,373 INFO ipc.Server (Server.java:logException(2287)) - IPC Server handler 955 on 8020, call org.apache.hadoop.hdfs.protocol.ClientProtocol.create from 138.106.33.132:54203 Call#34456 Retry#0: org.apache.hadoop.hdfs.server.namenode.SafeModeException: Cannot create file/spark-history/.91a78c90-1d97-4988-8e07-ffb0f7b94ddf. Name node is in safe mode. The reported blocks 1148 needs additional 237 blocks to reach the threshold 1.0000 of total blocks 1384. The number of live datanodes 4 has reached the minimum number 0. Safe mode will be turned off automatically once the thresholds have been reached.

The DNs were able to communicate with the NN but later they received some exception but I think this is another issue :

2016-06-16 12:55:53,249 INFO web.DatanodeHttpServer (SimpleHttpProxyHandler.java:exceptionCaught(147)) - Proxy for / failed. cause: java.io.IOException: Connection reset by peer at sun.nio.ch.FileDispatcherImpl.read0(Native Method) at sun.nio.ch.SocketDispatcher.read(SocketDispatcher.java:39) at sun.nio.ch.IOUtil.readIntoNativeBuffer(IOUtil.java:223) at sun.nio.ch.IOUtil.read(IOUtil.java:192) at sun.nio.ch.SocketChannelImpl.read(SocketChannelImpl.java:380) at io.netty.buffer.UnpooledUnsafeDirectByteBuf.setBytes(UnpooledUnsafeDirectByteBuf.java:447) at io.netty.buffer.AbstractByteBuf.writeBytes(AbstractByteBuf.java:881) at io.netty.channel.socket.nio.NioSocketChannel.doReadBytes(NioSocketChannel.java:242) at io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:119) at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:511) at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:468) at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:382) at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:354) at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:111) at io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:137) at java.lang.Thread.run(Thread.java:745) 2016-06-16 14:17:53,241 INFO web.DatanodeHttpServer (SimpleHttpProxyHandler.java:exceptionCaught(147)) - Proxy for / failed. cause: java.io.IOException: Connection reset by peer at sun.nio.ch.FileDispatcherImpl.read0(Native Method) at sun.nio.ch.SocketDispatcher.read(SocketDispatcher.java:39) at sun.nio.ch.IOUtil.readIntoNativeBuffer(IOUtil.java:223) at sun.nio.ch.IOUtil.read(IOUtil.java:192) at sun.nio.ch.SocketChannelImpl.read(SocketChannelImpl.java:380) at io.netty.buffer.UnpooledUnsafeDirectByteBuf.setBytes(UnpooledUnsafeDirectByteBuf.java:447) at io.netty.buffer.AbstractByteBuf.writeBytes(AbstractByteBuf.java:881) at io.netty.channel.socket.nio.NioSocketChannel.doReadBytes(NioSocketChannel.java:242) at io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:119) at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:511) at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:468) at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:382) at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:354) at io.netty.util.concurrent.SingleThreadEventExecutor$2.run(SingleThreadEventExecutor.java:111) at io.netty.util.concurrent.DefaultThreadFactory$DefaultRunnableDecorator.run(DefaultThreadFactory.java:137) at java.lang.Thread.run(Thread.java:745) 2016-06-16 15:18:01,562 INFO datanode.DirectoryScanner (DirectoryScanner.java:scan(505)) - BlockPool BP-1506929499-138.106.33.139-1465983488767 Total blocks: 790, missing metadata files:0, missing block files:0, missing blocks in memory:0, mismatched blocks:0

**********EDIT-1**********

I tried cleaning the corrupt files but fsck failed(output same as provided above + below)

FSCK ended at Thu Jun 16 17:16:16 CEST 2016 in 134 milliseconds FSCK ended at Thu Jun 16 17:16:16 CEST 2016 in 134 milliseconds fsck encountered internal errors! Fsck on path '/' FAILED

The NN log says that move failed(possibly, due to the missing blocks themselves!) :

ERROR namenode.NameNode (NamenodeFsck.java:copyBlocksToLostFound(795)) - copyBlocksToLostFound: error processing /user/oozie/share/lib/lib_20160615114058/sqoop/hadoop-aws-2.7.1.2.4.2.0-258.jar java.io.IOException: failed to initialize lost+found at org.apache.hadoop.hdfs.server.namenode.NamenodeFsck.copyBlocksToLostFound(NamenodeFsck.java:743) at org.apache.hadoop.hdfs.server.namenode.NamenodeFsck.collectBlocksSummary(NamenodeFsck.java:689) at org.apache.hadoop.hdfs.server.namenode.NamenodeFsck.check(NamenodeFsck.java:441) at org.apache.hadoop.hdfs.server.namenode.NamenodeFsck.checkDir(NamenodeFsck.java:468) at org.apache.hadoop.hdfs.server.namenode.NamenodeFsck.check(NamenodeFsck.java:426) at org.apache.hadoop.hdfs.server.namenode.NamenodeFsck.checkDir(NamenodeFsck.java:468) at org.apache.hadoop.hdfs.server.namenode.NamenodeFsck.check(NamenodeFsck.java:426) at org.apache.hadoop.hdfs.server.namenode.NamenodeFsck.checkDir(NamenodeFsck.java:468) at org.apache.hadoop.hdfs.server.namenode.NamenodeFsck.check(NamenodeFsck.java:426) at org.apache.hadoop.hdfs.server.namenode.NamenodeFsck.checkDir(NamenodeFsck.java:468) at org.apache.hadoop.hdfs.server.namenode.NamenodeFsck.check(NamenodeFsck.java:426) at org.apache.hadoop.hdfs.server.namenode.NamenodeFsck.checkDir(NamenodeFsck.java:468) at org.apache.hadoop.hdfs.server.namenode.NamenodeFsck.check(NamenodeFsck.java:426) at org.apache.hadoop.hdfs.server.namenode.NamenodeFsck.checkDir(NamenodeFsck.java:468) at org.apache.hadoop.hdfs.server.namenode.NamenodeFsck.check(NamenodeFsck.java:426) at org.apache.hadoop.hdfs.server.namenode.NamenodeFsck.checkDir(NamenodeFsck.java:468) at org.apache.hadoop.hdfs.server.namenode.NamenodeFsck.check(NamenodeFsck.java:426) at org.apache.hadoop.hdfs.server.namenode.NamenodeFsck.fsck(NamenodeFsck.java:356) at org.apache.hadoop.hdfs.server.namenode.FsckServlet$1.run(FsckServlet.java:67) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1709) at org.apache.hadoop.hdfs.server.namenode.FsckServlet.doGet(FsckServlet.java:58) at javax.servlet.http.HttpServlet.service(HttpServlet.java:707) at javax.servlet.http.HttpServlet.service(HttpServlet.java:820) at org.mortbay.jetty.servlet.ServletHolder.handle(ServletHolder.java:511) at org.mortbay.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1221) at org.apache.hadoop.http.HttpServer2$QuotingInputFilter.doFilter(HttpServer2.java:1243) at org.mortbay.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1212) at org.apache.hadoop.http.NoCacheFilter.doFilter(NoCacheFilter.java:45) at org.mortbay.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1212) at org.apache.hadoop.http.NoCacheFilter.doFilter(NoCacheFilter.java:45) at org.mortbay.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1212) at org.mortbay.jetty.servlet.ServletHandler.handle(ServletHandler.java:399) at org.mortbay.jetty.security.SecurityHandler.handle(SecurityHandler.java:216) at org.mortbay.jetty.servlet.SessionHandler.handle(SessionHandler.java:182) at org.mortbay.jetty.handler.ContextHandler.handle(ContextHandler.java:767) at org.mortbay.jetty.webapp.WebAppContext.handle(WebAppContext.java:450) at org.mortbay.jetty.handler.ContextHandlerCollection.handle(ContextHandlerCollection.java:230) at org.mortbay.jetty.handler.HandlerWrapper.handle(HandlerWrapper.java:152) at org.mortbay.jetty.Server.handle(Server.java:326) at org.mortbay.jetty.HttpConnection.handleRequest(HttpConnection.java:542) at org.mortbay.jetty.HttpConnection$RequestHandler.headerComplete(HttpConnection.java:928) at org.mortbay.jetty.HttpParser.parseNext(HttpParser.java:549) at org.mortbay.jetty.HttpParser.parseAvailable(HttpParser.java:212) at org.mortbay.jetty.HttpConnection.handle(HttpConnection.java:404) at org.mortbay.io.nio.SelectChannelEndPoint.run(SelectChannelEndPoint.java:410) at org.mortbay.thread.QueuedThreadPool$PoolThread.run(QueuedThreadPool.java:582) 2016-06-16 17:16:16,801 WARN namenode.NameNode (NamenodeFsck.java:fsck(391)) - Fsck on path '/' FAILED java.io.IOException: fsck encountered internal errors! at org.apache.hadoop.hdfs.server.namenode.NamenodeFsck.fsck(NamenodeFsck.java:373) at org.apache.hadoop.hdfs.server.namenode.FsckServlet$1.run(FsckServlet.java:67) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:422) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1709) at org.apache.hadoop.hdfs.server.namenode.FsckServlet.doGet(FsckServlet.java:58) at javax.servlet.http.HttpServlet.service(HttpServlet.java:707) at javax.servlet.http.HttpServlet.service(HttpServlet.java:820) at org.mortbay.jetty.servlet.ServletHolder.handle(ServletHolder.java:511) at org.mortbay.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1221) at org.apache.hadoop.http.HttpServer2$QuotingInputFilter.doFilter(HttpServer2.java:1243) at org.mortbay.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1212) at org.apache.hadoop.http.NoCacheFilter.doFilter(NoCacheFilter.java:45) at org.mortbay.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1212) at org.apache.hadoop.http.NoCacheFilter.doFilter(NoCacheFilter.java:45) at org.mortbay.jetty.servlet.ServletHandler$CachedChain.doFilter(ServletHandler.java:1212) at org.mortbay.jetty.servlet.ServletHandler.handle(ServletHandler.java:399) at org.mortbay.jetty.security.SecurityHandler.handle(SecurityHandler.java:216) at org.mortbay.jetty.servlet.SessionHandler.handle(SessionHandler.java:182) at org.mortbay.jetty.handler.ContextHandler.handle(ContextHandler.java:767) at org.mortbay.jetty.webapp.WebAppContext.handle(WebAppContext.java:450) at org.mortbay.jetty.handler.ContextHandlerCollection.handle(ContextHandlerCollection.java:230) at org.mortbay.jetty.handler.HandlerWrapper.handle(HandlerWrapper.java:152) at org.mortbay.jetty.Server.handle(Server.java:326) at org.mortbay.jetty.HttpConnection.handleRequest(HttpConnection.java:542) at org.mortbay.jetty.HttpConnection$RequestHandler.headerComplete(HttpConnection.java:928) at org.mortbay.jetty.HttpParser.parseNext(HttpParser.java:549) at org.mortbay.jetty.HttpParser.parseAvailable(HttpParser.java:212) at org.mortbay.jetty.HttpConnection.handle(HttpConnection.java:404) at org.mortbay.io.nio.SelectChannelEndPoint.run(SelectChannelEndPoint.java:410) at org.mortbay.thread.QueuedThreadPool$PoolThread.run(QueuedThreadPool.java:582)

**********EDIT-2**********

Till yesterday, I was able to download the 'healthy' file mentioned in this post earlier using the NN UI, today, when I got a 'Page can't be displayed', when I tried -copyToLocal, I got the following errors :

[hdfs@l4327pp root]$ hdfs dfs -copyToLocal /dumphere/1_GetStarted_Name_Cluster.PNG /usr/share/ojoqcu/fromhdfs/

16/06/17 13:43:09 INFO hdfs.DFSClient: Access token was invalid when connecting to /138.106.33.145:50010 : org.apache.hadoop.hdfs.security.token.block.InvalidBlockTokenException: Got access token error, status message , for OP_READ_BLOCK, self=/138.106.33.139:52315, remote=/138.106.33.145:50010, for file /dumphere/1_GetStarted_Name_Cluster.PNG, for pool BP-1506929499-138.106.33.139-1465983488767 block 1073742883_2059

16/06/17 13:43:09 WARN hdfs.DFSClient: Failed to connect to /138.106.33.145:50010 for block, add to deadNodes and continue. org.apache.hadoop.hdfs.security.token.block.InvalidBlockTokenException: Got access token error, status message , for OP_READ_BLOCK, self=/138.106.33.139:52316, remote=/138.106.33.145:50010, for file /dumphere/1_GetStarted_Name_Cluster.PNG, for pool BP-1506929499-138.106.33.139-1465983488767 block 1073742883_2059

org.apache.hadoop.hdfs.security.token.block.InvalidBlockTokenException: Got access token error, status message , for OP_READ_BLOCK, self=/138.106.33.139:52316, remote=/138.106.33.145:50010, for file /dumphere/1_GetStarted_Name_Cluster.PNG, for pool BP-1506929499-138.106.33.139-1465983488767 block 1073742883_2059

at org.apache.hadoop.hdfs.protocol.datatransfer.DataTransferProtoUtil.checkBlockOpStatus(DataTransferProtoUtil.java:134)

at org.apache.hadoop.hdfs.RemoteBlockReader2.checkSuccess(RemoteBlockReader2.java:456)

at org.apache.hadoop.hdfs.RemoteBlockReader2.newBlockReader(RemoteBlockReader2.java:424)

at org.apache.hadoop.hdfs.BlockReaderFactory.getRemoteBlockReader(BlockReaderFactory.java:818)

at org.apache.hadoop.hdfs.BlockReaderFactory.getRemoteBlockReaderFromTcp(BlockReaderFactory.java:697)

at org.apache.hadoop.hdfs.BlockReaderFactory.build(BlockReaderFactory.java:355)

at org.apache.hadoop.hdfs.DFSInputStream.blockSeekTo(DFSInputStream.java:656)

at org.apache.hadoop.hdfs.DFSInputStream.readWithStrategy(DFSInputStream.java:882)

at org.apache.hadoop.hdfs.DFSInputStream.read(DFSInputStream.java:934)

at java.io.DataInputStream.read(DataInputStream.java:100)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:85)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:59)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:119)

at org.apache.hadoop.fs.shell.CommandWithDestination$TargetFileSystem.writeStreamToFile(CommandWithDestination.java:466)

at org.apache.hadoop.fs.shell.CommandWithDestination.copyStreamToTarget(CommandWithDestination.java:391)

at org.apache.hadoop.fs.shell.CommandWithDestination.copyFileToTarget(CommandWithDestination.java:328)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPath(CommandWithDestination.java:263)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPath(CommandWithDestination.java:248)

at org.apache.hadoop.fs.shell.Command.processPaths(Command.java:317)

at org.apache.hadoop.fs.shell.Command.processPathArgument(Command.java:289)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPathArgument(CommandWithDestination.java:243)

at org.apache.hadoop.fs.shell.Command.processArgument(Command.java:271)

at org.apache.hadoop.fs.shell.Command.processArguments(Command.java:255)

at org.apache.hadoop.fs.shell.CommandWithDestination.processArguments(CommandWithDestination.java:220)

at org.apache.hadoop.fs.shell.Command.processRawArguments(Command.java:201)

at org.apache.hadoop.fs.shell.Command.run(Command.java:165)

at org.apache.hadoop.fs.FsShell.run(FsShell.java:287)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:90)

at org.apache.hadoop.fs.FsShell.main(FsShell.java:340)

16/06/17 13:43:09 WARN hdfs.DFSClient: Failed to connect to /138.106.33.144:50010 for block, add to deadNodes and continue. org.apache.hadoop.hdfs.security.token.block.InvalidBlockTokenException: Got access token error, status message , for OP_READ_BLOCK, self=/138.106.33.139:53330, remote=/138.106.33.144:50010, for file /dumphere/1_GetStarted_Name_Cluster.PNG, for pool BP-1506929499-138.106.33.139-1465983488767 block 1073742883_2059

org.apache.hadoop.hdfs.security.token.block.InvalidBlockTokenException: Got access token error, status message , for OP_READ_BLOCK, self=/138.106.33.139:53330, remote=/138.106.33.144:50010, for file /dumphere/1_GetStarted_Name_Cluster.PNG, for pool BP-1506929499-138.106.33.139-1465983488767 block 1073742883_2059

at org.apache.hadoop.hdfs.protocol.datatransfer.DataTransferProtoUtil.checkBlockOpStatus(DataTransferProtoUtil.java:134)

at org.apache.hadoop.hdfs.RemoteBlockReader2.checkSuccess(RemoteBlockReader2.java:456)

at org.apache.hadoop.hdfs.RemoteBlockReader2.newBlockReader(RemoteBlockReader2.java:424)

at org.apache.hadoop.hdfs.BlockReaderFactory.getRemoteBlockReader(BlockReaderFactory.java:818)

at org.apache.hadoop.hdfs.BlockReaderFactory.getRemoteBlockReaderFromTcp(BlockReaderFactory.java:697)

at org.apache.hadoop.hdfs.BlockReaderFactory.build(BlockReaderFactory.java:355)

at org.apache.hadoop.hdfs.DFSInputStream.blockSeekTo(DFSInputStream.java:656)

at org.apache.hadoop.hdfs.DFSInputStream.readWithStrategy(DFSInputStream.java:882)

at org.apache.hadoop.hdfs.DFSInputStream.read(DFSInputStream.java:934)

at java.io.DataInputStream.read(DataInputStream.java:100)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:85)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:59)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:119)

at org.apache.hadoop.fs.shell.CommandWithDestination$TargetFileSystem.writeStreamToFile(CommandWithDestination.java:466)

at org.apache.hadoop.fs.shell.CommandWithDestination.copyStreamToTarget(CommandWithDestination.java:391)

at org.apache.hadoop.fs.shell.CommandWithDestination.copyFileToTarget(CommandWithDestination.java:328)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPath(CommandWithDestination.java:263)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPath(CommandWithDestination.java:248)

at org.apache.hadoop.fs.shell.Command.processPaths(Command.java:317)

at org.apache.hadoop.fs.shell.Command.processPathArgument(Command.java:289)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPathArgument(CommandWithDestination.java:243)

at org.apache.hadoop.fs.shell.Command.processArgument(Command.java:271)

at org.apache.hadoop.fs.shell.Command.processArguments(Command.java:255)

at org.apache.hadoop.fs.shell.CommandWithDestination.processArguments(CommandWithDestination.java:220)

at org.apache.hadoop.fs.shell.Command.processRawArguments(Command.java:201)

at org.apache.hadoop.fs.shell.Command.run(Command.java:165)

at org.apache.hadoop.fs.FsShell.run(FsShell.java:287)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:90)

at org.apache.hadoop.fs.FsShell.main(FsShell.java:340)

16/06/17 13:43:09 WARN hdfs.DFSClient: Failed to connect to /138.106.33.148:50010 for block, add to deadNodes and continue. org.apache.hadoop.hdfs.security.token.block.InvalidBlockTokenException: Got access token error, status message , for OP_READ_BLOCK, self=/138.106.33.139:32920, remote=/138.106.33.148:50010, for file /dumphere/1_GetStarted_Name_Cluster.PNG, for pool BP-1506929499-138.106.33.139-1465983488767 block 1073742883_2059

org.apache.hadoop.hdfs.security.token.block.InvalidBlockTokenException: Got access token error, status message , for OP_READ_BLOCK, self=/138.106.33.139:32920, remote=/138.106.33.148:50010, for file /dumphere/1_GetStarted_Name_Cluster.PNG, for pool BP-1506929499-138.106.33.139-1465983488767 block 1073742883_2059

at org.apache.hadoop.hdfs.protocol.datatransfer.DataTransferProtoUtil.checkBlockOpStatus(DataTransferProtoUtil.java:134)

at org.apache.hadoop.hdfs.RemoteBlockReader2.checkSuccess(RemoteBlockReader2.java:456)

at org.apache.hadoop.hdfs.RemoteBlockReader2.newBlockReader(RemoteBlockReader2.java:424)

at org.apache.hadoop.hdfs.BlockReaderFactory.getRemoteBlockReader(BlockReaderFactory.java:818)

at org.apache.hadoop.hdfs.BlockReaderFactory.getRemoteBlockReaderFromTcp(BlockReaderFactory.java:697)

at org.apache.hadoop.hdfs.BlockReaderFactory.build(BlockReaderFactory.java:355)

at org.apache.hadoop.hdfs.DFSInputStream.blockSeekTo(DFSInputStream.java:656)

at org.apache.hadoop.hdfs.DFSInputStream.readWithStrategy(DFSInputStream.java:882)

at org.apache.hadoop.hdfs.DFSInputStream.read(DFSInputStream.java:934)

at java.io.DataInputStream.read(DataInputStream.java:100)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:85)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:59)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:119)

at org.apache.hadoop.fs.shell.CommandWithDestination$TargetFileSystem.writeStreamToFile(CommandWithDestination.java:466)

at org.apache.hadoop.fs.shell.CommandWithDestination.copyStreamToTarget(CommandWithDestination.java:391)

at org.apache.hadoop.fs.shell.CommandWithDestination.copyFileToTarget(CommandWithDestination.java:328)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPath(CommandWithDestination.java:263)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPath(CommandWithDestination.java:248)

at org.apache.hadoop.fs.shell.Command.processPaths(Command.java:317)

at org.apache.hadoop.fs.shell.Command.processPathArgument(Command.java:289)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPathArgument(CommandWithDestination.java:243)

at org.apache.hadoop.fs.shell.Command.processArgument(Command.java:271)

at org.apache.hadoop.fs.shell.Command.processArguments(Command.java:255)

at org.apache.hadoop.fs.shell.CommandWithDestination.processArguments(CommandWithDestination.java:220)

at org.apache.hadoop.fs.shell.Command.processRawArguments(Command.java:201)

at org.apache.hadoop.fs.shell.Command.run(Command.java:165)

at org.apache.hadoop.fs.FsShell.run(FsShell.java:287)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:90)

at org.apache.hadoop.fs.FsShell.main(FsShell.java:340)

16/06/17 13:43:09 INFO hdfs.DFSClient: Could not obtain BP-1506929499-138.106.33.139-1465983488767:blk_1073742883_2059 from any node: java.io.IOException: No live nodes contain block BP-1506929499-138.106.33.139-1465983488767:blk_1073742883_2059 after checking nodes = [DatanodeInfoWithStorage[138.106.33.145:50010,DS-1f4e6b48-b2df-49c0-a53d-d49153aec4d0,DISK], DatanodeInfoWithStorage[138.106.33.144:50010,DS-715b0d95-c7a1-442a-a366-56712e8c792b,DISK], DatanodeInfoWithStorage[138.106.33.148:50010,DS-faf159fb-7961-4ca2-8fe2-780fa008438c,DISK]], ignoredNodes = null No live nodes contain current block Block locations: DatanodeInfoWithStorage[138.106.33.145:50010,DS-1f4e6b48-b2df-49c0-a53d-d49153aec4d0,DISK] DatanodeInfoWithStorage[138.106.33.144:50010,DS-715b0d95-c7a1-442a-a366-56712e8c792b,DISK] DatanodeInfoWithStorage[138.106.33.148:50010,DS-faf159fb-7961-4ca2-8fe2-780fa008438c,DISK] Dead nodes: DatanodeInfoWithStorage[138.106.33.144:50010,DS-715b0d95-c7a1-442a-a366-56712e8c792b,DISK] DatanodeInfoWithStorage[138.106.33.145:50010,DS-1f4e6b48-b2df-49c0-a53d-d49153aec4d0,DISK] DatanodeInfoWithStorage[138.106.33.148:50010,DS-faf159fb-7961-4ca2-8fe2-780fa008438c,DISK]. Will get new block locations from namenode and retry...

16/06/17 13:43:09 WARN hdfs.DFSClient: DFS chooseDataNode: got # 1 IOException, will wait for 1592.930873348516 msec.

16/06/17 13:43:11 WARN hdfs.DFSClient: Failed to connect to /138.106.33.148:50010 for block, add to deadNodes and continue. org.apache.hadoop.hdfs.security.token.block.InvalidBlockTokenException: Got access token error, status message , for OP_READ_BLOCK, self=/138.106.33.139:32922, remote=/138.106.33.148:50010, for file /dumphere/1_GetStarted_Name_Cluster.PNG, for pool BP-1506929499-138.106.33.139-1465983488767 block 1073742883_2059

org.apache.hadoop.hdfs.security.token.block.InvalidBlockTokenException: Got access token error, status message , for OP_READ_BLOCK, self=/138.106.33.139:32922, remote=/138.106.33.148:50010, for file /dumphere/1_GetStarted_Name_Cluster.PNG, for pool BP-1506929499-138.106.33.139-1465983488767 block 1073742883_2059

at org.apache.hadoop.hdfs.protocol.datatransfer.DataTransferProtoUtil.checkBlockOpStatus(DataTransferProtoUtil.java:134)

at org.apache.hadoop.hdfs.RemoteBlockReader2.checkSuccess(RemoteBlockReader2.java:456)

at org.apache.hadoop.hdfs.RemoteBlockReader2.newBlockReader(RemoteBlockReader2.java:424)

at org.apache.hadoop.hdfs.BlockReaderFactory.getRemoteBlockReader(BlockReaderFactory.java:818)

at org.apache.hadoop.hdfs.BlockReaderFactory.getRemoteBlockReaderFromTcp(BlockReaderFactory.java:697)

at org.apache.hadoop.hdfs.BlockReaderFactory.build(BlockReaderFactory.java:355)

at org.apache.hadoop.hdfs.DFSInputStream.blockSeekTo(DFSInputStream.java:656)

at org.apache.hadoop.hdfs.DFSInputStream.readWithStrategy(DFSInputStream.java:882)

at org.apache.hadoop.hdfs.DFSInputStream.read(DFSInputStream.java:934)

at java.io.DataInputStream.read(DataInputStream.java:100)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:85)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:59)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:119)

at org.apache.hadoop.fs.shell.CommandWithDestination$TargetFileSystem.writeStreamToFile(CommandWithDestination.java:466)

at org.apache.hadoop.fs.shell.CommandWithDestination.copyStreamToTarget(CommandWithDestination.java:391)

at org.apache.hadoop.fs.shell.CommandWithDestination.copyFileToTarget(CommandWithDestination.java:328)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPath(CommandWithDestination.java:263)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPath(CommandWithDestination.java:248)

at org.apache.hadoop.fs.shell.Command.processPaths(Command.java:317)

at org.apache.hadoop.fs.shell.Command.processPathArgument(Command.java:289)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPathArgument(CommandWithDestination.java:243)

at org.apache.hadoop.fs.shell.Command.processArgument(Command.java:271)

at org.apache.hadoop.fs.shell.Command.processArguments(Command.java:255)

at org.apache.hadoop.fs.shell.CommandWithDestination.processArguments(CommandWithDestination.java:220)

at org.apache.hadoop.fs.shell.Command.processRawArguments(Command.java:201)

at org.apache.hadoop.fs.shell.Command.run(Command.java:165)

at org.apache.hadoop.fs.FsShell.run(FsShell.java:287)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:90)

at org.apache.hadoop.fs.FsShell.main(FsShell.java:340)

16/06/17 13:43:11 WARN hdfs.DFSClient: Failed to connect to /138.106.33.145:50010 for block, add to deadNodes and continue. org.apache.hadoop.hdfs.security.token.block.InvalidBlockTokenException: Got access token error, status message , for OP_READ_BLOCK, self=/138.106.33.139:52321, remote=/138.106.33.145:50010, for file /dumphere/1_GetStarted_Name_Cluster.PNG, for pool BP-1506929499-138.106.33.139-1465983488767 block 1073742883_2059

org.apache.hadoop.hdfs.security.token.block.InvalidBlockTokenException: Got access token error, status message , for OP_READ_BLOCK, self=/138.106.33.139:52321, remote=/138.106.33.145:50010, for file /dumphere/1_GetStarted_Name_Cluster.PNG, for pool BP-1506929499-138.106.33.139-1465983488767 block 1073742883_2059

at org.apache.hadoop.hdfs.protocol.datatransfer.DataTransferProtoUtil.checkBlockOpStatus(DataTransferProtoUtil.java:134)

at org.apache.hadoop.hdfs.RemoteBlockReader2.checkSuccess(RemoteBlockReader2.java:456)

at org.apache.hadoop.hdfs.RemoteBlockReader2.newBlockReader(RemoteBlockReader2.java:424)

at org.apache.hadoop.hdfs.BlockReaderFactory.getRemoteBlockReader(BlockReaderFactory.java:818)

at org.apache.hadoop.hdfs.BlockReaderFactory.getRemoteBlockReaderFromTcp(BlockReaderFactory.java:697)

at org.apache.hadoop.hdfs.BlockReaderFactory.build(BlockReaderFactory.java:355)

at org.apache.hadoop.hdfs.DFSInputStream.blockSeekTo(DFSInputStream.java:656)

at org.apache.hadoop.hdfs.DFSInputStream.readWithStrategy(DFSInputStream.java:882)

at org.apache.hadoop.hdfs.DFSInputStream.read(DFSInputStream.java:934)

at java.io.DataInputStream.read(DataInputStream.java:100)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:85)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:59)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:119)

at org.apache.hadoop.fs.shell.CommandWithDestination$TargetFileSystem.writeStreamToFile(CommandWithDestination.java:466)

at org.apache.hadoop.fs.shell.CommandWithDestination.copyStreamToTarget(CommandWithDestination.java:391)

at org.apache.hadoop.fs.shell.CommandWithDestination.copyFileToTarget(CommandWithDestination.java:328)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPath(CommandWithDestination.java:263)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPath(CommandWithDestination.java:248)

at org.apache.hadoop.fs.shell.Command.processPaths(Command.java:317)

at org.apache.hadoop.fs.shell.Command.processPathArgument(Command.java:289)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPathArgument(CommandWithDestination.java:243)

at org.apache.hadoop.fs.shell.Command.processArgument(Command.java:271)

at org.apache.hadoop.fs.shell.Command.processArguments(Command.java:255)

at org.apache.hadoop.fs.shell.CommandWithDestination.processArguments(CommandWithDestination.java:220)

at org.apache.hadoop.fs.shell.Command.processRawArguments(Command.java:201)

at org.apache.hadoop.fs.shell.Command.run(Command.java:165)

at org.apache.hadoop.fs.FsShell.run(FsShell.java:287)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:90)

at org.apache.hadoop.fs.FsShell.main(FsShell.java:340)

16/06/17 13:43:11 WARN hdfs.DFSClient: Failed to connect to /138.106.33.144:50010 for block, add to deadNodes and continue. org.apache.hadoop.hdfs.security.token.block.InvalidBlockTokenException: Got access token error, status message , for OP_READ_BLOCK, self=/138.106.33.139:53335, remote=/138.106.33.144:50010, for file /dumphere/1_GetStarted_Name_Cluster.PNG, for pool BP-1506929499-138.106.33.139-1465983488767 block 1073742883_2059

org.apache.hadoop.hdfs.security.token.block.InvalidBlockTokenException: Got access token error, status message , for OP_READ_BLOCK, self=/138.106.33.139:53335, remote=/138.106.33.144:50010, for file /dumphere/1_GetStarted_Name_Cluster.PNG, for pool BP-1506929499-138.106.33.139-1465983488767 block 1073742883_2059

at org.apache.hadoop.hdfs.protocol.datatransfer.DataTransferProtoUtil.checkBlockOpStatus(DataTransferProtoUtil.java:134)

at org.apache.hadoop.hdfs.RemoteBlockReader2.checkSuccess(RemoteBlockReader2.java:456)

at org.apache.hadoop.hdfs.RemoteBlockReader2.newBlockReader(RemoteBlockReader2.java:424)

at org.apache.hadoop.hdfs.BlockReaderFactory.getRemoteBlockReader(BlockReaderFactory.java:818)

at org.apache.hadoop.hdfs.BlockReaderFactory.getRemoteBlockReaderFromTcp(BlockReaderFactory.java:697)

at org.apache.hadoop.hdfs.BlockReaderFactory.build(BlockReaderFactory.java:355)

at org.apache.hadoop.hdfs.DFSInputStream.blockSeekTo(DFSInputStream.java:656)

at org.apache.hadoop.hdfs.DFSInputStream.readWithStrategy(DFSInputStream.java:882)

at org.apache.hadoop.hdfs.DFSInputStream.read(DFSInputStream.java:934)

at java.io.DataInputStream.read(DataInputStream.java:100)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:85)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:59)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:119)

at org.apache.hadoop.fs.shell.CommandWithDestination$TargetFileSystem.writeStreamToFile(CommandWithDestination.java:466)

at org.apache.hadoop.fs.shell.CommandWithDestination.copyStreamToTarget(CommandWithDestination.java:391)

at org.apache.hadoop.fs.shell.CommandWithDestination.copyFileToTarget(CommandWithDestination.java:328)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPath(CommandWithDestination.java:263)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPath(CommandWithDestination.java:248)

at org.apache.hadoop.fs.shell.Command.processPaths(Command.java:317)

at org.apache.hadoop.fs.shell.Command.processPathArgument(Command.java:289)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPathArgument(CommandWithDestination.java:243)

at org.apache.hadoop.fs.shell.Command.processArgument(Command.java:271)

at org.apache.hadoop.fs.shell.Command.processArguments(Command.java:255)

at org.apache.hadoop.fs.shell.CommandWithDestination.processArguments(CommandWithDestination.java:220)

at org.apache.hadoop.fs.shell.Command.processRawArguments(Command.java:201)

at org.apache.hadoop.fs.shell.Command.run(Command.java:165)

at org.apache.hadoop.fs.FsShell.run(FsShell.java:287)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:90)

at org.apache.hadoop.fs.FsShell.main(FsShell.java:340)

16/06/17 13:43:11 INFO hdfs.DFSClient: Could not obtain BP-1506929499-138.106.33.139-1465983488767:blk_1073742883_2059 from any node: java.io.IOException: No live nodes contain block BP-1506929499-138.106.33.139-1465983488767:blk_1073742883_2059 after checking nodes = [DatanodeInfoWithStorage[138.106.33.148:50010,DS-faf159fb-7961-4ca2-8fe2-780fa008438c,DISK], DatanodeInfoWithStorage[138.106.33.145:50010,DS-1f4e6b48-b2df-49c0-a53d-d49153aec4d0,DISK], DatanodeInfoWithStorage[138.106.33.144:50010,DS-715b0d95-c7a1-442a-a366-56712e8c792b,DISK]], ignoredNodes = null No live nodes contain current block Block locations: DatanodeInfoWithStorage[138.106.33.148:50010,DS-faf159fb-7961-4ca2-8fe2-780fa008438c,DISK] DatanodeInfoWithStorage[138.106.33.145:50010,DS-1f4e6b48-b2df-49c0-a53d-d49153aec4d0,DISK] DatanodeInfoWithStorage[138.106.33.144:50010,DS-715b0d95-c7a1-442a-a366-56712e8c792b,DISK] Dead nodes: DatanodeInfoWithStorage[138.106.33.144:50010,DS-715b0d95-c7a1-442a-a366-56712e8c792b,DISK] DatanodeInfoWithStorage[138.106.33.145:50010,DS-1f4e6b48-b2df-49c0-a53d-d49153aec4d0,DISK] DatanodeInfoWithStorage[138.106.33.148:50010,DS-faf159fb-7961-4ca2-8fe2-780fa008438c,DISK]. Will get new block locations from namenode and retry...

16/06/17 13:43:11 WARN hdfs.DFSClient: DFS chooseDataNode: got # 2 IOException, will wait for 7474.389631544021 msec.

16/06/17 13:43:18 WARN hdfs.DFSClient: Failed to connect to /138.106.33.145:50010 for block, add to deadNodes and continue. org.apache.hadoop.hdfs.security.token.block.InvalidBlockTokenException: Got access token error, status message , for OP_READ_BLOCK, self=/138.106.33.139:52324, remote=/138.106.33.145:50010, for file /dumphere/1_GetStarted_Name_Cluster.PNG, for pool BP-1506929499-138.106.33.139-1465983488767 block 1073742883_2059

org.apache.hadoop.hdfs.security.token.block.InvalidBlockTokenException: Got access token error, status message , for OP_READ_BLOCK, self=/138.106.33.139:52324, remote=/138.106.33.145:50010, for file /dumphere/1_GetStarted_Name_Cluster.PNG, for pool BP-1506929499-138.106.33.139-1465983488767 block 1073742883_2059

at org.apache.hadoop.hdfs.protocol.datatransfer.DataTransferProtoUtil.checkBlockOpStatus(DataTransferProtoUtil.java:134)

at org.apache.hadoop.hdfs.RemoteBlockReader2.checkSuccess(RemoteBlockReader2.java:456)

at org.apache.hadoop.hdfs.RemoteBlockReader2.newBlockReader(RemoteBlockReader2.java:424)

at org.apache.hadoop.hdfs.BlockReaderFactory.getRemoteBlockReader(BlockReaderFactory.java:818)

at org.apache.hadoop.hdfs.BlockReaderFactory.getRemoteBlockReaderFromTcp(BlockReaderFactory.java:697)

at org.apache.hadoop.hdfs.BlockReaderFactory.build(BlockReaderFactory.java:355)

at org.apache.hadoop.hdfs.DFSInputStream.blockSeekTo(DFSInputStream.java:656)

at org.apache.hadoop.hdfs.DFSInputStream.readWithStrategy(DFSInputStream.java:882)

at org.apache.hadoop.hdfs.DFSInputStream.read(DFSInputStream.java:934)

at java.io.DataInputStream.read(DataInputStream.java:100)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:85)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:59)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:119)

at org.apache.hadoop.fs.shell.CommandWithDestination$TargetFileSystem.writeStreamToFile(CommandWithDestination.java:466)

at org.apache.hadoop.fs.shell.CommandWithDestination.copyStreamToTarget(CommandWithDestination.java:391)

at org.apache.hadoop.fs.shell.CommandWithDestination.copyFileToTarget(CommandWithDestination.java:328)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPath(CommandWithDestination.java:263)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPath(CommandWithDestination.java:248)

at org.apache.hadoop.fs.shell.Command.processPaths(Command.java:317)

at org.apache.hadoop.fs.shell.Command.processPathArgument(Command.java:289)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPathArgument(CommandWithDestination.java:243)

at org.apache.hadoop.fs.shell.Command.processArgument(Command.java:271)

at org.apache.hadoop.fs.shell.Command.processArguments(Command.java:255)

at org.apache.hadoop.fs.shell.CommandWithDestination.processArguments(CommandWithDestination.java:220)

at org.apache.hadoop.fs.shell.Command.processRawArguments(Command.java:201)

at org.apache.hadoop.fs.shell.Command.run(Command.java:165)

at org.apache.hadoop.fs.FsShell.run(FsShell.java:287)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:90)

at org.apache.hadoop.fs.FsShell.main(FsShell.java:340)

16/06/17 13:43:18 WARN hdfs.DFSClient: Failed to connect to /138.106.33.144:50010 for block, add to deadNodes and continue. org.apache.hadoop.hdfs.security.token.block.InvalidBlockTokenException: Got access token error, status message , for OP_READ_BLOCK, self=/138.106.33.139:53338, remote=/138.106.33.144:50010, for file /dumphere/1_GetStarted_Name_Cluster.PNG, for pool BP-1506929499-138.106.33.139-1465983488767 block 1073742883_2059

org.apache.hadoop.hdfs.security.token.block.InvalidBlockTokenException: Got access token error, status message , for OP_READ_BLOCK, self=/138.106.33.139:53338, remote=/138.106.33.144:50010, for file /dumphere/1_GetStarted_Name_Cluster.PNG, for pool BP-1506929499-138.106.33.139-1465983488767 block 1073742883_2059

at org.apache.hadoop.hdfs.protocol.datatransfer.DataTransferProtoUtil.checkBlockOpStatus(DataTransferProtoUtil.java:134)

at org.apache.hadoop.hdfs.RemoteBlockReader2.checkSuccess(RemoteBlockReader2.java:456)

at org.apache.hadoop.hdfs.RemoteBlockReader2.newBlockReader(RemoteBlockReader2.java:424)

at org.apache.hadoop.hdfs.BlockReaderFactory.getRemoteBlockReader(BlockReaderFactory.java:818)

at org.apache.hadoop.hdfs.BlockReaderFactory.getRemoteBlockReaderFromTcp(BlockReaderFactory.java:697)

at org.apache.hadoop.hdfs.BlockReaderFactory.build(BlockReaderFactory.java:355)

at org.apache.hadoop.hdfs.DFSInputStream.blockSeekTo(DFSInputStream.java:656)

at org.apache.hadoop.hdfs.DFSInputStream.readWithStrategy(DFSInputStream.java:882)

at org.apache.hadoop.hdfs.DFSInputStream.read(DFSInputStream.java:934)

at java.io.DataInputStream.read(DataInputStream.java:100)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:85)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:59)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:119)

at org.apache.hadoop.fs.shell.CommandWithDestination$TargetFileSystem.writeStreamToFile(CommandWithDestination.java:466)

at org.apache.hadoop.fs.shell.CommandWithDestination.copyStreamToTarget(CommandWithDestination.java:391)

at org.apache.hadoop.fs.shell.CommandWithDestination.copyFileToTarget(CommandWithDestination.java:328)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPath(CommandWithDestination.java:263)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPath(CommandWithDestination.java:248)

at org.apache.hadoop.fs.shell.Command.processPaths(Command.java:317)

at org.apache.hadoop.fs.shell.Command.processPathArgument(Command.java:289)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPathArgument(CommandWithDestination.java:243)

at org.apache.hadoop.fs.shell.Command.processArgument(Command.java:271)

at org.apache.hadoop.fs.shell.Command.processArguments(Command.java:255)

at org.apache.hadoop.fs.shell.CommandWithDestination.processArguments(CommandWithDestination.java:220)

at org.apache.hadoop.fs.shell.Command.processRawArguments(Command.java:201)

at org.apache.hadoop.fs.shell.Command.run(Command.java:165)

at org.apache.hadoop.fs.FsShell.run(FsShell.java:287)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:90)

at org.apache.hadoop.fs.FsShell.main(FsShell.java:340)

16/06/17 13:43:18 WARN hdfs.DFSClient: Failed to connect to /138.106.33.148:50010 for block, add to deadNodes and continue. org.apache.hadoop.hdfs.security.token.block.InvalidBlockTokenException: Got access token error, status message , for OP_READ_BLOCK, self=/138.106.33.139:32928, remote=/138.106.33.148:50010, for file /dumphere/1_GetStarted_Name_Cluster.PNG, for pool BP-1506929499-138.106.33.139-1465983488767 block 1073742883_2059

org.apache.hadoop.hdfs.security.token.block.InvalidBlockTokenException: Got access token error, status message , for OP_READ_BLOCK, self=/138.106.33.139:32928, remote=/138.106.33.148:50010, for file /dumphere/1_GetStarted_Name_Cluster.PNG, for pool BP-1506929499-138.106.33.139-1465983488767 block 1073742883_2059

at org.apache.hadoop.hdfs.protocol.datatransfer.DataTransferProtoUtil.checkBlockOpStatus(DataTransferProtoUtil.java:134)

at org.apache.hadoop.hdfs.RemoteBlockReader2.checkSuccess(RemoteBlockReader2.java:456)

at org.apache.hadoop.hdfs.RemoteBlockReader2.newBlockReader(RemoteBlockReader2.java:424)

at org.apache.hadoop.hdfs.BlockReaderFactory.getRemoteBlockReader(BlockReaderFactory.java:818)

at org.apache.hadoop.hdfs.BlockReaderFactory.getRemoteBlockReaderFromTcp(BlockReaderFactory.java:697)

at org.apache.hadoop.hdfs.BlockReaderFactory.build(BlockReaderFactory.java:355)

at org.apache.hadoop.hdfs.DFSInputStream.blockSeekTo(DFSInputStream.java:656)

at org.apache.hadoop.hdfs.DFSInputStream.readWithStrategy(DFSInputStream.java:882)

at org.apache.hadoop.hdfs.DFSInputStream.read(DFSInputStream.java:934)

at java.io.DataInputStream.read(DataInputStream.java:100)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:85)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:59)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:119)

at org.apache.hadoop.fs.shell.CommandWithDestination$TargetFileSystem.writeStreamToFile(CommandWithDestination.java:466)

at org.apache.hadoop.fs.shell.CommandWithDestination.copyStreamToTarget(CommandWithDestination.java:391)

at org.apache.hadoop.fs.shell.CommandWithDestination.copyFileToTarget(CommandWithDestination.java:328)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPath(CommandWithDestination.java:263)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPath(CommandWithDestination.java:248)

at org.apache.hadoop.fs.shell.Command.processPaths(Command.java:317)

at org.apache.hadoop.fs.shell.Command.processPathArgument(Command.java:289)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPathArgument(CommandWithDestination.java:243)

at org.apache.hadoop.fs.shell.Command.processArgument(Command.java:271)

at org.apache.hadoop.fs.shell.Command.processArguments(Command.java:255)

at org.apache.hadoop.fs.shell.CommandWithDestination.processArguments(CommandWithDestination.java:220)

at org.apache.hadoop.fs.shell.Command.processRawArguments(Command.java:201)

at org.apache.hadoop.fs.shell.Command.run(Command.java:165)

at org.apache.hadoop.fs.FsShell.run(FsShell.java:287)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:90)

at org.apache.hadoop.fs.FsShell.main(FsShell.java:340)

16/06/17 13:43:18 INFO hdfs.DFSClient: Could not obtain BP-1506929499-138.106.33.139-1465983488767:blk_1073742883_2059 from any node: java.io.IOException: No live nodes contain block BP-1506929499-138.106.33.139-1465983488767:blk_1073742883_2059 after checking nodes = [DatanodeInfoWithStorage[138.106.33.145:50010,DS-1f4e6b48-b2df-49c0-a53d-d49153aec4d0,DISK], DatanodeInfoWithStorage[138.106.33.144:50010,DS-715b0d95-c7a1-442a-a366-56712e8c792b,DISK], DatanodeInfoWithStorage[138.106.33.148:50010,DS-faf159fb-7961-4ca2-8fe2-780fa008438c,DISK]], ignoredNodes = null No live nodes contain current block Block locations: DatanodeInfoWithStorage[138.106.33.145:50010,DS-1f4e6b48-b2df-49c0-a53d-d49153aec4d0,DISK] DatanodeInfoWithStorage[138.106.33.144:50010,DS-715b0d95-c7a1-442a-a366-56712e8c792b,DISK] DatanodeInfoWithStorage[138.106.33.148:50010,DS-faf159fb-7961-4ca2-8fe2-780fa008438c,DISK] Dead nodes: DatanodeInfoWithStorage[138.106.33.144:50010,DS-715b0d95-c7a1-442a-a366-56712e8c792b,DISK] DatanodeInfoWithStorage[138.106.33.145:50010,DS-1f4e6b48-b2df-49c0-a53d-d49153aec4d0,DISK] DatanodeInfoWithStorage[138.106.33.148:50010,DS-faf159fb-7961-4ca2-8fe2-780fa008438c,DISK]. Will get new block locations from namenode and retry...

16/06/17 13:43:18 WARN hdfs.DFSClient: DFS chooseDataNode: got # 3 IOException, will wait for 14579.784044247666 msec.

16/06/17 13:43:33 WARN hdfs.DFSClient: Failed to connect to /138.106.33.148:50010 for block, add to deadNodes and continue. org.apache.hadoop.hdfs.security.token.block.InvalidBlockTokenException: Got access token error, status message , for OP_READ_BLOCK, self=/138.106.33.139:32931, remote=/138.106.33.148:50010, for file /dumphere/1_GetStarted_Name_Cluster.PNG, for pool BP-1506929499-138.106.33.139-1465983488767 block 1073742883_2059

org.apache.hadoop.hdfs.security.token.block.InvalidBlockTokenException: Got access token error, status message , for OP_READ_BLOCK, self=/138.106.33.139:32931, remote=/138.106.33.148:50010, for file /dumphere/1_GetStarted_Name_Cluster.PNG, for pool BP-1506929499-138.106.33.139-1465983488767 block 1073742883_2059

at org.apache.hadoop.hdfs.protocol.datatransfer.DataTransferProtoUtil.checkBlockOpStatus(DataTransferProtoUtil.java:134)

at org.apache.hadoop.hdfs.RemoteBlockReader2.checkSuccess(RemoteBlockReader2.java:456)

at org.apache.hadoop.hdfs.RemoteBlockReader2.newBlockReader(RemoteBlockReader2.java:424)

at org.apache.hadoop.hdfs.BlockReaderFactory.getRemoteBlockReader(BlockReaderFactory.java:818)

at org.apache.hadoop.hdfs.BlockReaderFactory.getRemoteBlockReaderFromTcp(BlockReaderFactory.java:697)

at org.apache.hadoop.hdfs.BlockReaderFactory.build(BlockReaderFactory.java:355)

at org.apache.hadoop.hdfs.DFSInputStream.blockSeekTo(DFSInputStream.java:656)

at org.apache.hadoop.hdfs.DFSInputStream.readWithStrategy(DFSInputStream.java:882)

at org.apache.hadoop.hdfs.DFSInputStream.read(DFSInputStream.java:934)

at java.io.DataInputStream.read(DataInputStream.java:100)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:85)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:59)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:119)

at org.apache.hadoop.fs.shell.CommandWithDestination$TargetFileSystem.writeStreamToFile(CommandWithDestination.java:466)

at org.apache.hadoop.fs.shell.CommandWithDestination.copyStreamToTarget(CommandWithDestination.java:391)

at org.apache.hadoop.fs.shell.CommandWithDestination.copyFileToTarget(CommandWithDestination.java:328)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPath(CommandWithDestination.java:263)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPath(CommandWithDestination.java:248)

at org.apache.hadoop.fs.shell.Command.processPaths(Command.java:317)

at org.apache.hadoop.fs.shell.Command.processPathArgument(Command.java:289)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPathArgument(CommandWithDestination.java:243)

at org.apache.hadoop.fs.shell.Command.processArgument(Command.java:271)

at org.apache.hadoop.fs.shell.Command.processArguments(Command.java:255)

at org.apache.hadoop.fs.shell.CommandWithDestination.processArguments(CommandWithDestination.java:220)

at org.apache.hadoop.fs.shell.Command.processRawArguments(Command.java:201)

at org.apache.hadoop.fs.shell.Command.run(Command.java:165)

at org.apache.hadoop.fs.FsShell.run(FsShell.java:287)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:90)

at org.apache.hadoop.fs.FsShell.main(FsShell.java:340)

16/06/17 13:43:33 WARN hdfs.DFSClient: Failed to connect to /138.106.33.144:50010 for block, add to deadNodes and continue. org.apache.hadoop.hdfs.security.token.block.InvalidBlockTokenException: Got access token error, status message , for OP_READ_BLOCK, self=/138.106.33.139:53343, remote=/138.106.33.144:50010, for file /dumphere/1_GetStarted_Name_Cluster.PNG, for pool BP-1506929499-138.106.33.139-1465983488767 block 1073742883_2059

org.apache.hadoop.hdfs.security.token.block.InvalidBlockTokenException: Got access token error, status message , for OP_READ_BLOCK, self=/138.106.33.139:53343, remote=/138.106.33.144:50010, for file /dumphere/1_GetStarted_Name_Cluster.PNG, for pool BP-1506929499-138.106.33.139-1465983488767 block 1073742883_2059

at org.apache.hadoop.hdfs.protocol.datatransfer.DataTransferProtoUtil.checkBlockOpStatus(DataTransferProtoUtil.java:134)

at org.apache.hadoop.hdfs.RemoteBlockReader2.checkSuccess(RemoteBlockReader2.java:456)

at org.apache.hadoop.hdfs.RemoteBlockReader2.newBlockReader(RemoteBlockReader2.java:424)

at org.apache.hadoop.hdfs.BlockReaderFactory.getRemoteBlockReader(BlockReaderFactory.java:818)

at org.apache.hadoop.hdfs.BlockReaderFactory.getRemoteBlockReaderFromTcp(BlockReaderFactory.java:697)

at org.apache.hadoop.hdfs.BlockReaderFactory.build(BlockReaderFactory.java:355)

at org.apache.hadoop.hdfs.DFSInputStream.blockSeekTo(DFSInputStream.java:656)

at org.apache.hadoop.hdfs.DFSInputStream.readWithStrategy(DFSInputStream.java:882)

at org.apache.hadoop.hdfs.DFSInputStream.read(DFSInputStream.java:934)

at java.io.DataInputStream.read(DataInputStream.java:100)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:85)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:59)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:119)

at org.apache.hadoop.fs.shell.CommandWithDestination$TargetFileSystem.writeStreamToFile(CommandWithDestination.java:466)

at org.apache.hadoop.fs.shell.CommandWithDestination.copyStreamToTarget(CommandWithDestination.java:391)

at org.apache.hadoop.fs.shell.CommandWithDestination.copyFileToTarget(CommandWithDestination.java:328)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPath(CommandWithDestination.java:263)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPath(CommandWithDestination.java:248)

at org.apache.hadoop.fs.shell.Command.processPaths(Command.java:317)

at org.apache.hadoop.fs.shell.Command.processPathArgument(Command.java:289)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPathArgument(CommandWithDestination.java:243)

at org.apache.hadoop.fs.shell.Command.processArgument(Command.java:271)

at org.apache.hadoop.fs.shell.Command.processArguments(Command.java:255)

at org.apache.hadoop.fs.shell.CommandWithDestination.processArguments(CommandWithDestination.java:220)

at org.apache.hadoop.fs.shell.Command.processRawArguments(Command.java:201)

at org.apache.hadoop.fs.shell.Command.run(Command.java:165)

at org.apache.hadoop.fs.FsShell.run(FsShell.java:287)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:90)

at org.apache.hadoop.fs.FsShell.main(FsShell.java:340)

16/06/17 13:43:33 WARN hdfs.DFSClient: Failed to connect to /138.106.33.145:50010 for block, add to deadNodes and continue. org.apache.hadoop.hdfs.security.token.block.InvalidBlockTokenException: Got access token error, status message , for OP_READ_BLOCK, self=/138.106.33.139:52331, remote=/138.106.33.145:50010, for file /dumphere/1_GetStarted_Name_Cluster.PNG, for pool BP-1506929499-138.106.33.139-1465983488767 block 1073742883_2059

org.apache.hadoop.hdfs.security.token.block.InvalidBlockTokenException: Got access token error, status message , for OP_READ_BLOCK, self=/138.106.33.139:52331, remote=/138.106.33.145:50010, for file /dumphere/1_GetStarted_Name_Cluster.PNG, for pool BP-1506929499-138.106.33.139-1465983488767 block 1073742883_2059

at org.apache.hadoop.hdfs.protocol.datatransfer.DataTransferProtoUtil.checkBlockOpStatus(DataTransferProtoUtil.java:134)

at org.apache.hadoop.hdfs.RemoteBlockReader2.checkSuccess(RemoteBlockReader2.java:456)

at org.apache.hadoop.hdfs.RemoteBlockReader2.newBlockReader(RemoteBlockReader2.java:424)

at org.apache.hadoop.hdfs.BlockReaderFactory.getRemoteBlockReader(BlockReaderFactory.java:818)

at org.apache.hadoop.hdfs.BlockReaderFactory.getRemoteBlockReaderFromTcp(BlockReaderFactory.java:697)

at org.apache.hadoop.hdfs.BlockReaderFactory.build(BlockReaderFactory.java:355)

at org.apache.hadoop.hdfs.DFSInputStream.blockSeekTo(DFSInputStream.java:656)

at org.apache.hadoop.hdfs.DFSInputStream.readWithStrategy(DFSInputStream.java:882)

at org.apache.hadoop.hdfs.DFSInputStream.read(DFSInputStream.java:934)

at java.io.DataInputStream.read(DataInputStream.java:100)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:85)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:59)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:119)

at org.apache.hadoop.fs.shell.CommandWithDestination$TargetFileSystem.writeStreamToFile(CommandWithDestination.java:466)

at org.apache.hadoop.fs.shell.CommandWithDestination.copyStreamToTarget(CommandWithDestination.java:391)

at org.apache.hadoop.fs.shell.CommandWithDestination.copyFileToTarget(CommandWithDestination.java:328)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPath(CommandWithDestination.java:263)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPath(CommandWithDestination.java:248)

at org.apache.hadoop.fs.shell.Command.processPaths(Command.java:317)

at org.apache.hadoop.fs.shell.Command.processPathArgument(Command.java:289)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPathArgument(CommandWithDestination.java:243)

at org.apache.hadoop.fs.shell.Command.processArgument(Command.java:271)

at org.apache.hadoop.fs.shell.Command.processArguments(Command.java:255)

at org.apache.hadoop.fs.shell.CommandWithDestination.processArguments(CommandWithDestination.java:220)

at org.apache.hadoop.fs.shell.Command.processRawArguments(Command.java:201)

at org.apache.hadoop.fs.shell.Command.run(Command.java:165)

at org.apache.hadoop.fs.FsShell.run(FsShell.java:287)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:90)

at org.apache.hadoop.fs.FsShell.main(FsShell.java:340)

16/06/17 13:43:33 WARN hdfs.DFSClient: Could not obtain block: BP-1506929499-138.106.33.139-1465983488767:blk_1073742883_2059 file=/dumphere/1_GetStarted_Name_Cluster.PNG No live nodes contain current block Block locations: DatanodeInfoWithStorage[138.106.33.148:50010,DS-faf159fb-7961-4ca2-8fe2-780fa008438c,DISK] DatanodeInfoWithStorage[138.106.33.144:50010,DS-715b0d95-c7a1-442a-a366-56712e8c792b,DISK] DatanodeInfoWithStorage[138.106.33.145:50010,DS-1f4e6b48-b2df-49c0-a53d-d49153aec4d0,DISK] Dead nodes: DatanodeInfoWithStorage[138.106.33.144:50010,DS-715b0d95-c7a1-442a-a366-56712e8c792b,DISK] DatanodeInfoWithStorage[138.106.33.145:50010,DS-1f4e6b48-b2df-49c0-a53d-d49153aec4d0,DISK] DatanodeInfoWithStorage[138.106.33.148:50010,DS-faf159fb-7961-4ca2-8fe2-780fa008438c,DISK]. Throwing a BlockMissingException

16/06/17 13:43:33 WARN hdfs.DFSClient: Could not obtain block: BP-1506929499-138.106.33.139-1465983488767:blk_1073742883_2059 file=/dumphere/1_GetStarted_Name_Cluster.PNG No live nodes contain current block Block locations: DatanodeInfoWithStorage[138.106.33.148:50010,DS-faf159fb-7961-4ca2-8fe2-780fa008438c,DISK] DatanodeInfoWithStorage[138.106.33.144:50010,DS-715b0d95-c7a1-442a-a366-56712e8c792b,DISK] DatanodeInfoWithStorage[138.106.33.145:50010,DS-1f4e6b48-b2df-49c0-a53d-d49153aec4d0,DISK] Dead nodes: DatanodeInfoWithStorage[138.106.33.144:50010,DS-715b0d95-c7a1-442a-a366-56712e8c792b,DISK] DatanodeInfoWithStorage[138.106.33.145:50010,DS-1f4e6b48-b2df-49c0-a53d-d49153aec4d0,DISK] DatanodeInfoWithStorage[138.106.33.148:50010,DS-faf159fb-7961-4ca2-8fe2-780fa008438c,DISK]. Throwing a BlockMissingException

16/06/17 13:43:33 WARN hdfs.DFSClient: DFS Read

org.apache.hadoop.hdfs.BlockMissingException: Could not obtain block: BP-1506929499-138.106.33.139-1465983488767:blk_1073742883_2059 file=/dumphere/1_GetStarted_Name_Cluster.PNG

at org.apache.hadoop.hdfs.DFSInputStream.chooseDataNode(DFSInputStream.java:983)

at org.apache.hadoop.hdfs.DFSInputStream.blockSeekTo(DFSInputStream.java:642)

at org.apache.hadoop.hdfs.DFSInputStream.readWithStrategy(DFSInputStream.java:882)

at org.apache.hadoop.hdfs.DFSInputStream.read(DFSInputStream.java:934)

at java.io.DataInputStream.read(DataInputStream.java:100)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:85)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:59)

at org.apache.hadoop.io.IOUtils.copyBytes(IOUtils.java:119)

at org.apache.hadoop.fs.shell.CommandWithDestination$TargetFileSystem.writeStreamToFile(CommandWithDestination.java:466)

at org.apache.hadoop.fs.shell.CommandWithDestination.copyStreamToTarget(CommandWithDestination.java:391)

at org.apache.hadoop.fs.shell.CommandWithDestination.copyFileToTarget(CommandWithDestination.java:328)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPath(CommandWithDestination.java:263)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPath(CommandWithDestination.java:248)

at org.apache.hadoop.fs.shell.Command.processPaths(Command.java:317)

at org.apache.hadoop.fs.shell.Command.processPathArgument(Command.java:289)

at org.apache.hadoop.fs.shell.CommandWithDestination.processPathArgument(CommandWithDestination.java:243)

at org.apache.hadoop.fs.shell.Command.processArgument(Command.java:271)

at org.apache.hadoop.fs.shell.Command.processArguments(Command.java:255)

at org.apache.hadoop.fs.shell.CommandWithDestination.processArguments(CommandWithDestination.java:220)

at org.apache.hadoop.fs.shell.Command.processRawArguments(Command.java:201)

at org.apache.hadoop.fs.shell.Command.run(Command.java:165)

at org.apache.hadoop.fs.FsShell.run(FsShell.java:287)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:90)

at org.apache.hadoop.fs.FsShell.main(FsShell.java:340)

copyToLocal: Could not obtain block: BP-1506929499-138.106.33.139-1465983488767:blk_1073742883_2059 file=/dumphere/1_GetStarted_Name_Cluster.PNGThe problem is the tcp connection seems to work fine from 138.106.33.139 with the datanodes(138.106.33.144, 138.106.33.145, 138.106.33.147, 138.106.33.148), the blocks are also present :

[root@l4327pp ~]# nc -v 138.106.33.145 50010 Ncat: Version 6.40 ( http://nmap.org/ncat ) Ncat: Connected to 138.106.33.145:50010. ^C [root@l4327pp ~]# [root@l4327pp ~]# nc -v 138.106.33.144 50010 Ncat: Version 6.40 ( http://nmap.org/ncat ) Ncat: Connected to 138.106.33.144:50010. ^C [root@l4327pp ~]# [root@l4327pp ~]# nc -v 138.106.33.148 50010 Ncat: Version 6.40 ( http://nmap.org/ncat ) Ncat: Connected to 138.106.33.148:50010. ^C [root@l4327pp ~]#

But when I restarted the NN(which again is stuck in 'safe mode'), I was able to download the file both via UI and -copyToLocal.

Created 06-18-2016 12:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

First of all, next time stop all services from Ambari before rebooting the machines. At this point you can try to force hdfs out of safe mode by setting dfs.namenode.safemode.threshold-pct either to 0.0f or to 0.8f (since 1148/1384 = 82.9%) and restarting hdfs. When hdfs starts and is out of safe mode, locate corrupted files with fsck and delete them, and restore important ones using HDP distribution files in /usr/hdp/current. There is no need (and in some cases no way) to restore those related to ambari-qa and in /tmp, and some others.

Created 06-17-2016 09:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, as a start you can try the following:

- Check the DataNodes tab of the NameNode web UI to verify DataNodes are heartbeating. Also check that there are no failed disks.

- Check the DataNode logs to see whether block reports were successfully sent after restart (you should see a log statement like "Successfully sent block report".

- The access token error indicates a permissions problem. If you have enabled Kerberos, check that clocks are in sync on all the nodes.

Created 06-18-2016 12:26 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

First of all, next time stop all services from Ambari before rebooting the machines. At this point you can try to force hdfs out of safe mode by setting dfs.namenode.safemode.threshold-pct either to 0.0f or to 0.8f (since 1148/1384 = 82.9%) and restarting hdfs. When hdfs starts and is out of safe mode, locate corrupted files with fsck and delete them, and restore important ones using HDP distribution files in /usr/hdp/current. There is no need (and in some cases no way) to restore those related to ambari-qa and in /tmp, and some others.

Created on 06-20-2016 07:12 AM - edited 08-19-2019 01:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

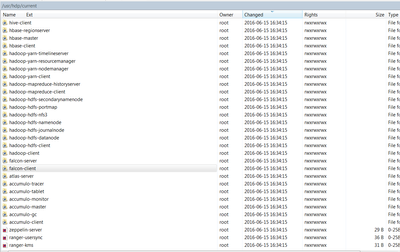

Can you elaborate 'and restore important ones using HDP distribution files in /usr/hdp/current.' I found the following files there, why and what to restore and how ? Is it safe to simply format the NN and start afresh(as there is no business data, only the test files ?

Yeah I will try these steps but what worries me is that, theoretically, all the machines can reboot anytime when the services are running - if then HDFS lands in issues, it would be disaster 😞

Created 06-20-2016 07:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

No, I mean, after hdfs is back, you detect corrupted files in hdfs, and restore important ones, like those in /hdp and /user/oozie/share/lib. You can also reformat and start afresh, but wait to see what files and how many of them are damaged. Regarding your worries about machines rebooting, theoretically yes, a thunderbolt can strike from a blue skies, but usually it doesn't 🙂 and when machines reboot they do so one at a time. That's why you do NN HA, backup NN metadata, avoid chipo servers, use UPS, etc.

Created 06-20-2016 09:27 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Can you validate my assumptions :

- Way/Option-1 : Reformatting will provide a clean HDFS where I need not do a -copyFromLocal for files like /user/oozie/share/lib. It's like I can then start using the cluster as good as a fresh installation

- Way/Option-2 : Exit safe mode, find and delete corrupt files and if required(how to determine this?), -copyFromLocal for files like /user/oozie/share/lib

Created 06-20-2016 09:55 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Option 1: reformat: you will need not only to "copyFromLocal" but also recreate the file system. See for example this for details. Option 2: Exit safe mode and find out where you are. I'd recommend this one. You can also find out what caused the trouble, maybe all corrupted blocks are on a bad disk or something like that. You can share the list of files you are uncertain whether to restore them or not.