Support Questions

- Cloudera Community

- Support

- Support Questions

- Site-Site Load Balancing in the same cluster.

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Site-Site Load Balancing in the same cluster.

- Labels:

-

Apache NiFi

Created on 08-22-2016 12:46 PM - edited 08-19-2019 04:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

In reference to a post https://community.hortonworks.com/questions/52015/fetch-file-from-the-ncm-of-a-nifi-cluster.html

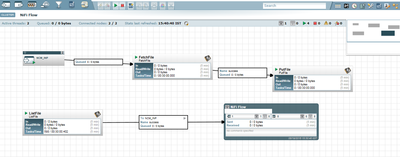

according to what @Simon Elliston Ball have explained, I have simulated the scenario in my native cluster. I have three nodes, among which Node 1 is configured as an NCM and rest two are primary and secondary slaves added in simple Master-Slave mode. Now, against a shared directory location, I have used a ListFile Processor and then fed the flow files site-to-site back to the same cluster by using input ports to load-balance a FetchFile processor followed by remaining flow. I have attached a .png file for reference.

Q. 1 Can anyone please confirm if the mentioned model should work correctly in terms of processing the load in a node-distributed fashion?

Q. 2 Is the above model will hold preferable if the origin directory location cant be shared by a business restriction and it will only reside in primary node? What could be the desired site-to-site model then?

Attached Image :

Created 08-22-2016 01:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is the correct setup... you would likely want to schedule ListFile to run on Primary Node only, this is an option on the scheduling tab of the processors. That would take care of the problem when the directory is not a shared location, but even when it is shared, you still probably want Primary Node only because you don't want two instances of ListFile retrieving the same files.

Created 08-22-2016 01:59 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is the correct setup... you would likely want to schedule ListFile to run on Primary Node only, this is an option on the scheduling tab of the processors. That would take care of the problem when the directory is not a shared location, but even when it is shared, you still probably want Primary Node only because you don't want two instances of ListFile retrieving the same files.

Created 08-23-2016 01:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the confirmation @Bryan Bende, Like you said , if the source directory is not meant to be a shared location, then it always should be on Primary Node. So, I tried the same with the ListFile Processor, I changed the source directory to a path : which is not a shared location, and also in Scheduling Information, I have prompted it to run on "Primary node only". The problem is that its throwing an error of the like "Cant find path", meaning its not being able to locate where the file is in the primary node. Surprisingly if i take that the next FetchFile processor out of the Process Group ( and out of Load balanced mode ) and put in succession to the ListFile, the error stops popping up. Which also means that FetchFile will no longer work in distributed mode.

Is it the expected behavior or am i missing something?

Created 08-23-2016 01:14 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ah right, I'm used to setting this up with ListHDFS + FetchHDFS which is different because its a shared resource... You are right that it is not going to work correctly when it is not a shared location because another node can't fetch a file that is only on primary node. Sorry about the confusion.