Support Questions

- Cloudera Community

- Support

- Support Questions

- Slowness in NIFI UI

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Slowness in NIFI UI

- Labels:

-

Apache NiFi

Created on

12-27-2019

03:17 AM

- last edited on

12-27-2019

07:44 AM

by

cjervis

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Team,

I have been facing slowness in NIFI Web UI for the last 2 months , as a fix I would restart NIFI service and working normal for some time and again after certain period of time it started responding slow. Kindly provide a permanent fix for this issue.

Created 12-27-2019 06:19 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @saivenkatg55 can you please provide more details about your Nifi environment? Without this kind of information we cannot be very helpful:

- How many Nodes?

- How much Ram?

- How many Cores?

- What are the min:max values for Memory in the NiFi Configuration?

- How many Processors are running?

That said, restarting Nifi often, on a heavily used system is a good practice. This is however an indication of the system needing additional attention for Performance Tuning. Items that could need attention are memory settings, garbage collection settings, increasing resources (nodes/ram/cores) and optimization of the flow itself.

Created 12-27-2019 07:09 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

A very common reason for UI slowness is JVM Garbage Collection (GC). All GC events are stop-the-world events whether it is a partial or full GC event. Partial/young GC is normal and healthy, but if it is being triggered back to back non stop or is running against a very large configured JVM heap it can take time to complete.

You can enable some GC logging in your NiFi bootstrap.conf file so you can see how often GC is running to attempt to free space in your NiFi JVM. To do this you need to add some additional java,arg.<unique num>= entries in your NiFi bootstrap.conf as follows:

java.arg.20=-XX:+PrintGCDetails

java.arg.21=-XX:+PrintGCTimeStamps

java.arg.22=-XX:+PrintGCDateStamps

java.arg.23=-Xloggc:<file>The last entry allows you to specific a separate log file for this output to be written in to rather than stdout.

NiFi does store information about component status in heap memory. This is the info you can see on any component (processor, connection, process group, etc.) when you right click on it and select "view status history" from the displayed context menu. You'll notice that these component report status for a number of data points. When your restart your NiFi, everything in the JVM heap memory is gone. So over the next 24 hours (default data point retention) the JVM heap will be holding a full set of status points again.

You can adjust the component status history buffer size and datapoint frequency to reduce heap usage here if this status history is not that important to you via the following properties in the nifi.properties file:

nifi.components.status.repository.buffer.size=1440

nifi.components.status.snapshot.frequency=1 minabove represents defaults. For every status history point for every single component, NiFi will retain 1440 status points (recording 1 point every 1 min). This totals 24 hours worth of status history for every status point. Changing the buffer to 288 and frequency to 5 minutes will reduce number of points retained by 80% while still giving your 24 hours worth of points.

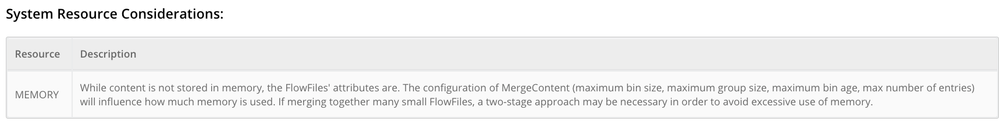

The dataflows you build may result in high heap usage triggering a lot of heap pressure. Those NiFi components that can result in high heap usage are documented. From the NiFi Global menu in the upper right corner of the NIFi UI, select "Help". You will see the complete list of components on the left had side. When you select a component, details about that component will be displayed. One of the details is "System Resource Considerations".

For example, here is the system resource considerations for the MergeContent processor:

You may need to make adjustments to your dataflow designs to reduce heap usage.

NiFi also holds FlowFile metadata for queued FlowFiles in heap memory. NiFi Does have a configurable swap threshold (which is applied per connection) to help with heap usage here. When a queue grows too large, FlowFile metatdata in excess of the configured swap threshold will be written to disk. The swapping in and swapping out of FlowFiles from disk can affect dataflow performance. NiFi's default backpressure object thresholds settings for connections is set low enough that swapping would typically never occur. However, if you have lots and lots of connections with queued FlowFiles, that heap usage can add up. This article I wrote may help you here:

https://community.cloudera.com/t5/Community-Articles/Dissecting-the-NiFi-quot-connection-quot-Heap-u...

-----

Other than heap usage, component validation can affect NiFi UI responsiveness. Here is an article i wrote about that:

https://community.cloudera.com/t5/Community-Articles/HDF-NiFi-Improving-the-performance-of-your-UI/t...

Here is another useful article you may want to read:

https://community.cloudera.com/t5/Community-Articles/HDF-NIFI-Best-practices-for-setting-up-a-high-p...

Hope this helps you with some direction to help improve your NiFi UI responsiveness/performance,

Matt