Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Spark 1.5 with Zeppelin - but sc.version print...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

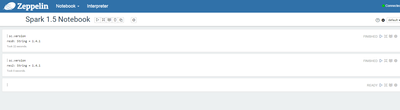

Spark 1.5 with Zeppelin - but sc.version prints 1.4.1

- Labels:

-

Apache Ambari

-

Apache Spark

-

Apache Zeppelin

Created on 01-28-2016 07:06 PM - edited 08-19-2019 03:50 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Created 01-29-2016 07:56 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Zeppelin ships with Spark embedded with it. If you follow the Zeppelin tech preview link Neeraj pointed you can get it to work with Spark 1.5.x in HDP 2.3.4.

In Apache, Zeppelin released a version compatible with Spark 1.6 on Jan 22nd. You can get the binary of that Zeppelin from https://zeppelin.incubator.apache.org/download.html and follow the Zeppelin TP http://hortonworks.com/hadoop-tutorial/apache-zeppelin/ to get it work with Spark 1.6 Tech Preview (http://hortonworks.com/hadoop-tutorial/apache-spark-1-6-technical-preview-with-hdp-2-3/)

Created 01-28-2016 08:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That's strange, do you have something in your classpatg referencing old Spark libs? @vbhoomireddy @vshukla

Created 01-28-2016 10:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

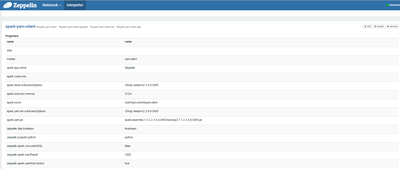

Can you check what the SPARK_HOME value is in zeppelin-env.sh?

Created 01-28-2016 10:17 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Interesting, my SPARK_HOME is not defined in that file. I have only that comment:

# export SPARK_HOME # (required) When it is defined, load it instead of Zeppelin embedded Spark libraries

Which explains why we use the Spark libraries in the zeppelin jar instead of the one defined in spark.yarn.jar ?

Created 01-29-2016 03:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

have you tried to set SPARK_HOME? I think it most likely solves your problem

for more info take a look at this link, scroll down to the Configure section: https://github.com/apache/incubator-zeppelin

Created 01-28-2016 10:13 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have the same behaviour on my Sandbox (HDP2.3.4). This seems strange because the version number in spark.yarn.jar and in spark.home seemed to be totally bypassed.

If you look at the jar zeppelin-spark-0.6.0-incubating-SNAPSHOT.jar inside <ZEPPELIN-HOME>/interpreter/spark, and if you extract the file META-INF/maven/org.apache.zeppelin/zeppelin-spark/pom.xml, you'll see this:

<spark.version>1.4.1</spark.version>

Created 01-28-2016 10:26 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Sourygna Luangsay sandbox came out before spark 1.5.2 so its expected. Just make sure classpath is not pointibg to old jars

Created 01-28-2016 10:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Actually, I upgraded my Sandbox to the last version of HDP.

When I do a "locate" on my Sandbox, I no longer find a reference to any spark-1.4.1 jar, only 1.5.2 jars.

Created 01-28-2016 10:50 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Sourygna Luangsay and it still says sc.version 1.4.1? can you go into admin page on ambari and doublecheck that you completed the upgrade?

Created 01-29-2016 03:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please follow this step by step http://hortonworks.com/hadoop-tutorial/apache-zeppelin/