Support Questions

- Cloudera Community

- Support

- Support Questions

- Spark 2 with Python3.6.5 on HDP-2.6.2

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Spark 2 with Python3.6.5 on HDP-2.6.2

Created on 10-23-2018 06:28 AM - edited 08-17-2019 07:05 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

I have a cluster running HDP-2.6.2 and I am currently having issues trying to run Spark2 with Python 3.6.5 (Anaconda).

The cluster has 10 nodes (1 edge node, 2 master nodes, 5 data nodes and 2 security nodes). The cluster uses Kerberos for security.

All the nodes in the cluster have Anaconda Python 3.6.5 installed on /root/opt/anaconda3.

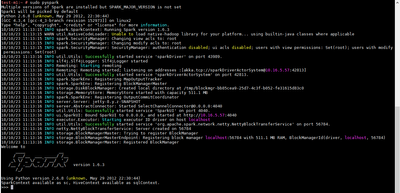

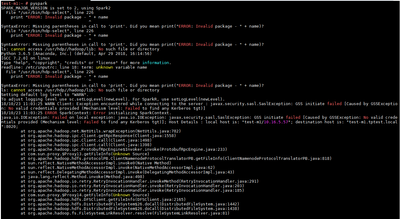

When I try to run pyspark from root login user I get the following errors (spark-error.png file attached)

However if I run sudo pyspark the error gets resolved but it starts with Spark 1.6.3 and Python 2.6.8 (Sudo-Pyspark.png file attached)I have tried updating the .bashrc with PYSPARK_PYTHON pointing to the anaconda Python3 path and SPARK_MAJOR_VERSION to 2.

After executing build .bashrc and trying to run pyspark I get the same old error as mentioned above. Running sudo pyspark does not take into consideration the updated variables as they are set only for the root user and defaults to Spark 1.6.3 and Python 2.6.8

I have found some other forum posts with similar error messages but have not yet found a resolution that works for me. Would really appreciate if someone can help with this.

Created 10-23-2018 05:34 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you do the kinit before launching the pyspark shell?

Created 10-24-2018 05:15 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the reply and good question. The usual method I follow for kerberos is to generate a ticket using the MIT Kerberos desktop application. But I do that for my production cluster. This is a new development cluster I have recently got access to. I will have to check with the system admin about the kinit. But it does seem a reason for not having access to certain folders.

Created 11-08-2018 08:39 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You'd need to have a valid ticket in in the machine you are launching pyspark shell (which is test-m1). Looking at the exception it is clearly saying 'No valid credentials provided'.

Hope this helps.

Created 10-23-2018 08:16 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Make sure you are pointing to the right spark directory.

it looks like your SPARK_HOME might be pointing to "/usr/hdp/2.6.0.11-1/spark" instead of "/usr/hdp/2.6.0.11-1/spark2".

For spark2 your bash_profile should like as below

export SPARK_HOME="/usr/hdp/2.6.0.11-1/spark2" export SPARK_MAJOR_VERSION=2 export PYSPARK_PYTHON=python3.5