Support Questions

- Cloudera Community

- Support

- Support Questions

- Spark Phoenix Kerberos write failure

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Spark Phoenix Kerberos write failure

- Labels:

-

Apache Phoenix

-

Apache Spark

Created on 06-29-2017 02:01 PM - edited 08-17-2019 06:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

Although reading from Phoenix is fine ( i'm getting a dataFrame), I'm not able to write to.

I'm using this command :

spark-submit --keytab /export/home/user/keytab --principal user --class testPhoenix.Main --master yarn --deploy-mode client --files /etc/spark/conf/hive-site.xml,/etc/hbase/2.5.3.0-37/0/hbase-site.xml --jars /usr/hdp/current/phoenix-client/phoenix-client.jar,/usr/hdp/current/hbase-client/lib/hbase-common.jar,/usr/hdp/current/hbase-client/lib/hbase-client.jar,/usr/hdp/current/hbase-client/lib/hbase-client.jar,/usr/hdp/current/hbase-client/lib/hbase-server.jar,/usr/hdp/current/hbase-client/lib/hbase-protocol.jar,/usr/hdp/current/hbase-client/lib/guava-12.0.1.jar,/usr/hdp/current/hbase-client/lib/htrace-core-3.1.0-incubating.jar --conf "spark.executor.extraJavaOptions=-Djava.security.auth.login.config=/export/home/user/jaas-client.conf" --conf "spark.driver.extraClassPath=/usr/hdp/current/phoenix-client/phoenix-client.jar,:/usr/hdp/current/hbase-client/lib/hbase-common.jar:/usr/hdp/current/hbase-client/lib/hbase-client.jar:/usr/hdp/current/hbase-client/lib/hbase-client.jar:/usr/hdp/current/hbase-client/lib/hbase-server.jar:/usr/hdp/current/hbase-client/lib/hbase-protocol.jar:/usr/hdp/current/hbase-client/lib/guava-12.0.1.jar:/usr/hdp/current/hbase-client/lib/htrace-core-3.1.0-incubating.jar" --driver-java-options "-Djava.security.auth.login.config=/export/home/user/jaas-client.conf" testphoenix_2.10-1.0.jar

i'm getting this error :

-17/06/28 11:35:15 INFO deprecation: mapred.output.dir is deprecated. Instead, use mapreduce.output.fileoutputformat.outputdir

Exception in thread "main" java.lang.RuntimeException: java.lang.ClassNotFoundException: Class org.apache.phoenix.mapreduce.PhoenixOutputFormat not found

If i change ( in the command) the phoenix client jar to phoenix-4.7.0-HBase-1.1-client-spark.jar, it says :

- Exception in thread "main" org.codehaus.jackson.map.exc.UnrecognizedPropertyException: Unrecognized field "Token" (Class org.apache.hadoop.yarn.api.records.timeline.TimelineDelegationTokenResponse), not marked as ignorable

I'm using :

Spark 1.6 Scala 2.10.6 Phoenix 4.7

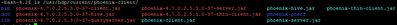

libraryDependencies ++= Seq( "org.apache.spark" % "spark-core_2.10" % "1.6.0" exclude ("org.apache.hadoop","hadoop-yarn-server-web-proxy"),"org.apache.spark" % "spark-sql_2.10" % "1.6.0" exclude ("org.apache.hadoop","hadoop-yarn-server-web-proxy"),"org.apache.hadoop" % "hadoop-common" % "2.7.0" exclude ("org.apache.hadoop","hadoop-yarn-server-web-proxy"),"org.apache.spark" % "spark-sql_2.10" % "1.6.0" exclude ("org.apache.hadoop","hadoop-yarn-server-web-proxy"),"org.apache.spark" % "spark-hive_2.10" % "1.6.0" exclude ("org.apache.hadoop","hadoop-yarn-server-web-proxy"),"org.apache.spark" % "spark-yarn_2.10" % "1.6.0" exclude ("org.apache.hadoop","hadoop-yarn-server-web-proxy"),"org.apache.phoenix" % "phoenix-spark" % "4.7.0-HBase-1.1" exclude ("org.apache.hadoop","hadoop-yarn-server-web-proxy"))this is the /usdr/hdp/current/phoenix_client directory content :

and the lib subdirectory :

Best,

Daniel,

Created 07-13-2017 02:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, i have found the answer. I need to use this cmd line :

spark-submit --master yarn --deploy-mode client --class testPhoenix.Main --conf "spark.executor.extraClassPath=/usr/hdp/current/hbase-client/lib/hbase-common.jar:/usr/hdp/current/hbase-client/lib/hbase-client.jar:/usr/hdp/current/hbase-client/lib/hbase-server.jar:/usr/hdp/current/hbase-client/lib/hbase-protocol.jar:/usr/hdp/current/hbase-client/lib/guava-12.0.1.jar:/usr/hdp/current/hbase-client/lib/htrace-core-3.1.0-incubating.jar:/usr/hdp/current/spark-client/lib/spark-assembly-1.6.2.2.5.3.0-37-hadoop2.7.3.2.5.3.0-37.jar:/usr/hdp/current/phoenix-client/phoenix-client.jar:/usr/hdp/current/phoenix-client/phoenix-spark-4.7.0.2.5.3.0-37.jar" --conf "spark.driver.extraClassPath=/usr/hdp/current/hbase-client/lib/hbase-common.jar:/usr/hdp/current/hbase-client/lib/hbase-client.jar:/usr/hdp/current/hbase-client/lib/hbase-server.jar:/usr/hdp/current/hbase-client/lib/hbase-protocol.jar:/usr/hdp/current/hbase-client/lib/guava-12.0.1.jar:/usr/hdp/current/hbase-client/lib/htrace-core-3.1.0-incubating.jar:/usr/hdp/current/spark-client/lib/spark-assembly-1.6.2.2.5.3.0-37-hadoop2.7.3.2.5.3.0-37.jar:/usr/hdp/current/phoenix-client/phoenix-client.jar:/usr/hdp/current/phoenix-client/phoenix-spark-4.7.0.2.5.3.0-37.jar" --keytab PATH_KEYTAB --principal ACCOUNT --conf "spark.executor.extraJavaOptions=-Djava.security.auth.login.config=/export/home/adq34proc/jaas-client.conf" --conf "spark.driver.extraJavaOptions=-Djava.security.auth.login.config=/export/home/adq34proc/jaas-client.conf" --files /usr/hdp/current/hbase-client/conf/hbase-site.xml testphoenix_2.10-1.0.jar

Also, the path to the hbase-site.xml wasn't good. I need to use /usr/hdp/current/hbase-client/conf/hbase-site.xml nstead of /etc/spark/conf/hive-site.xml,/etc/hbase/2.5.3.0-37/0/hbase-site.xml

Created 06-29-2017 02:01 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

this is the output

17/06/28 12:07:27 INFO ZooKeeper: Initiating client connection, connectString=sldifrdwbhn01.fr.intranet:2181,sldifrdwbhd01.fr.intranet:2181,sldifrdwbhd02.fr.intranet:2181,sldifrdwbhn02.fr.intranet:2181 sessionTimeout=90000 watcher=hconnection-0x6629643d0x0, quorum=sldifrdwbhn01.fr.intranet:2181,sldifrdwbhd01.fr.intranet:2181,sldifrdwbhd02.fr.intranet:2181,sldifrdwbhn02.fr.intranet:2181, baseZNode=/hbase-secure

Debug is true storeKey true useTicketCache false useKeyTab true doNotPrompt false ticketCache is null isInitiator true KeyTab is /export/home/user/keytab refreshKrb5Config is false principal is user@DOMAIN.NET tryFirstPass is false useFirstPass is false storePass is false clearPass is false

principal is user@DOMAIN.NET

Will use keytab

Commit Succeeded

17/06/28 12:07:27 INFO Login: successfully logged in.

17/06/28 12:07:27 INFO Login: TGT refresh thread started.

17/06/28 12:07:27 INFO ZooKeeperSaslClient: Client will use GSSAPI as SASL mechanism.

17/06/28 12:07:27 INFO ClientCnxn: Opening socket connection to server sldifrdwbhd02.fr.intranet/100.78.161.161:2181. Will attempt to SASL-authenticate using Login Context section 'Client'

17/06/28 12:07:27 INFO ClientCnxn: Socket connection established to sldifrdwbhd02.fr.intranet/100.78.161.161:2181, initiating session

17/06/28 12:07:27 INFO Login: TGT valid starting at: Wed Jun 28 12:07:27 CEST 2017

17/06/28 12:07:27 INFO Login: TGT expires: Wed Jun 28 22:07:27 CEST 2017

17/06/28 12:07:27 INFO Login: TGT refresh sleeping until: Wed Jun 28 20:34:47 CEST 2017

17/06/28 12:07:27 INFO ClientCnxn: Session establishment complete on server sldifrdwbhd02.fr.intranet/100.78.161.161:2181, sessionid = 0x25cca6e608d69ef, negotiated timeout = 40000

17/06/28 12:07:28 INFO ConnectionManager$HConnectionImplementation: Closing zookeeper sessionid=0x25cca6e608d69ef

17/06/28 12:07:28 INFO ZooKeeper: Session: 0x25cca6e608d69ef closed

17/06/28 12:07:28 INFO ClientCnxn: EventThread shut down

17/06/28 12:07:28 INFO YarnSparkHadoopUtil: Added HBase security token to credentials.

17/06/28 12:07:28 INFO Client: To enable the AM to login from keytab, credentials are being copied over to the AM via the YARN Secure Distributed Cache.

17/06/28 12:07:28 INFO Client: Uploading resource file:/export/home/user/keytab -> hdfs://hdfsdwb/user/user/.sparkStaging/application_1497275429905_26958/keytab

17/06/28 12:07:29 INFO Client: Using the spark assembly jar on HDFS because you are using HDP, defaultSparkAssembly:hdfs://hdfsdwb/hdp/apps/2.5.3.0-37/spark/spark-hdp-assembly.jar

17/06/28 12:07:29 INFO Client: Source and destination file systems are the same. Not copying hdfs://hdfsdwb/hdp/apps/2.5.3.0-37/spark/spark-hdp-assembly.jar

17/06/28 12:07:29 INFO Client: Uploading resource file:/etc/spark/conf/hive-site.xml -> hdfs://hdfsdwb/user/user/.sparkStaging/application_1497275429905_26958/hive-site.xml

17/06/28 12:07:29 INFO Client: Uploading resource file:/etc/hbase/2.5.3.0-37/0/hbase-site.xml -> hdfs://hdfsdwb/user/user/.sparkStaging/application_1497275429905_26958/hbase-site.xml

17/06/28 12:07:30 INFO Client: Uploading resource file:/tmp/spark-06881a01-219d-44aa-bdf8-2815c10a2b17/__spark_conf__1484350768415321037.zip -> hdfs://hdfsdwb/user/user/.sparkStaging/application_1497275429905_26958/__spark_conf__1484350768415321037.zip

17/06/28 12:07:30 INFO SecurityManager: Changing view acls to: user

17/06/28 12:07:30 INFO SecurityManager: Changing modify acls to: user

17/06/28 12:07:30 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(user); users with modify permissions: Set(user)

17/06/28 12:07:30 INFO Client: Submitting application 26958 to ResourceManager

17/06/28 12:07:30 INFO Client: Deleting staging directory .sparkStaging/application_1497275429905_26958

17/06/28 12:07:30 ERROR SparkContext: Error initializing SparkContext.

org.codehaus.jackson.map.exc.UnrecognizedPropertyException: Unrecognized field "Token" (Class org.apache.hadoop.yarn.api.records.timeline.TimelineDelegationTokenResponse), not marked as ignorable

at [Source: N/A; line: -1, column: -1] (through reference chain: org.apache.hadoop.yarn.api.records.timeline.TimelineDelegationTokenResponse["Token"])

at org.codehaus.jackson.map.exc.UnrecognizedPropertyException.from(UnrecognizedPropertyException.java:53)

at org.codehaus.jackson.map.deser.StdDeserializationContext.unknownFieldException(StdDeserializationContext.java:267)

at org.codehaus.jackson.map.deser.std.StdDeserializer.reportUnknownProperty(StdDeserializer.java:673)

at org.codehaus.jackson.map.deser.std.StdDeserializer.handleUnknownProperty(StdDeserializer.java:659)

at org.codehaus.jackson.map.deser.BeanDeserializer.handleUnknownProperty(BeanDeserializer.java:1365)

at org.codehaus.jackson.map.deser.BeanDeserializer._handleUnknown(BeanDeserializer.java:725)

at org.codehaus.jackson.map.deser.BeanDeserializer.deserializeFromObject(BeanDeserializ

Created 07-13-2017 02:02 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, i have found the answer. I need to use this cmd line :

spark-submit --master yarn --deploy-mode client --class testPhoenix.Main --conf "spark.executor.extraClassPath=/usr/hdp/current/hbase-client/lib/hbase-common.jar:/usr/hdp/current/hbase-client/lib/hbase-client.jar:/usr/hdp/current/hbase-client/lib/hbase-server.jar:/usr/hdp/current/hbase-client/lib/hbase-protocol.jar:/usr/hdp/current/hbase-client/lib/guava-12.0.1.jar:/usr/hdp/current/hbase-client/lib/htrace-core-3.1.0-incubating.jar:/usr/hdp/current/spark-client/lib/spark-assembly-1.6.2.2.5.3.0-37-hadoop2.7.3.2.5.3.0-37.jar:/usr/hdp/current/phoenix-client/phoenix-client.jar:/usr/hdp/current/phoenix-client/phoenix-spark-4.7.0.2.5.3.0-37.jar" --conf "spark.driver.extraClassPath=/usr/hdp/current/hbase-client/lib/hbase-common.jar:/usr/hdp/current/hbase-client/lib/hbase-client.jar:/usr/hdp/current/hbase-client/lib/hbase-server.jar:/usr/hdp/current/hbase-client/lib/hbase-protocol.jar:/usr/hdp/current/hbase-client/lib/guava-12.0.1.jar:/usr/hdp/current/hbase-client/lib/htrace-core-3.1.0-incubating.jar:/usr/hdp/current/spark-client/lib/spark-assembly-1.6.2.2.5.3.0-37-hadoop2.7.3.2.5.3.0-37.jar:/usr/hdp/current/phoenix-client/phoenix-client.jar:/usr/hdp/current/phoenix-client/phoenix-spark-4.7.0.2.5.3.0-37.jar" --keytab PATH_KEYTAB --principal ACCOUNT --conf "spark.executor.extraJavaOptions=-Djava.security.auth.login.config=/export/home/adq34proc/jaas-client.conf" --conf "spark.driver.extraJavaOptions=-Djava.security.auth.login.config=/export/home/adq34proc/jaas-client.conf" --files /usr/hdp/current/hbase-client/conf/hbase-site.xml testphoenix_2.10-1.0.jar

Also, the path to the hbase-site.xml wasn't good. I need to use /usr/hdp/current/hbase-client/conf/hbase-site.xml nstead of /etc/spark/conf/hive-site.xml,/etc/hbase/2.5.3.0-37/0/hbase-site.xml