Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Spark job in YARN queue depends on jobs in ano...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Spark job in YARN queue depends on jobs in another queue

- Labels:

-

Apache Spark

-

Apache YARN

-

Apache Zeppelin

Created on 03-21-2017 04:18 PM - edited 08-18-2019 04:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

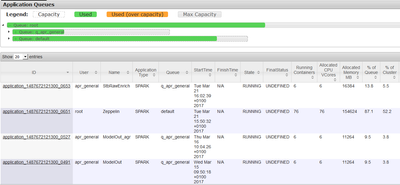

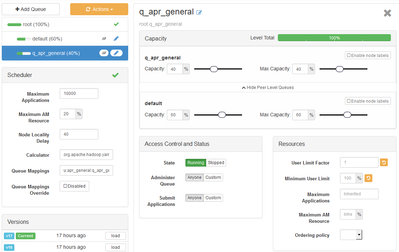

Hi. I've got a problem with YARN and Capacity Scheduler. I created two queues:

1. default - 60%

2. q_apr_general - 40%

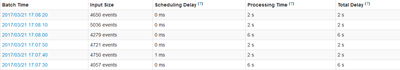

There is one Spark Streaming job in queue 'q_apr_general'. Processing time for every single batch is ~2-6 seconds.

In the default queue I started Zeppelin with preconfigured resources. I added to zeppelin-env.sh one line:

export ZEPPELIN_JAVA_OPTS="-Dhdp.version=2.4.2.0-258 -Dspark.executor.instances=75 -Dspark.executor.cores=6 -Dspark.executor.memory=13G

The problem is that when I execute Spark SQL query in Zeppelin, processing time is ~20-30 seconds. It is weird for me, because Zeppelin process and Spark streaming are in different queues.

Spark streaming process should not depend on Zeppelin process in another queue. Does anyone know what is the reason of my problem?

Created 03-22-2017 05:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Mateusz Grabowski, Ideally other jobs running in seperate queue ( example: streaming) should not affect your zeppelin processes. You can set up max limit for q_apr_general queue to make sure that minimum 60% of the resources are reserved for default queue. ( set yarn.scheduler.capacity.root.q_apr_general.maximum-capacity=40)

reference for capacity scheduler config : https://docs.hortonworks.com/HDPDocuments/HDP2/HDP-2.3.2/bk_performance_tuning/content/section_creat...

Regarding spark sql execution time, There have been few reports of slow execution with spark sql via zeppelin.

Apache Jira tracking this issue : ZEPPELIN-323

https://community.hortonworks.com/questions/33484/spark-sql-query-execution-is-very-very-slow-when-c...

Created on 03-22-2017 08:55 AM - edited 08-18-2019 04:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

But the problem is that because of Zeppelin, processing time in q_apr_general queue is longer. This is weird because processes are in different queue and YARN should reserve resources available for that queue, not more.

I set up max limit but it won't help. Do you have another ideas?

Created 03-22-2017 05:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Mateusz Grabowski, The above comment is not clear. Do you mean to say that Zeppelin application is taking resources from the q_apr_general queue ?

If the applications running in default queue are not acquiring containers from q_apr_general queue, it can not affect the performance of any application in q_apr_general queue. In this case, you should debug the streaming application to see where longer delays are happening.

Created 03-22-2017 11:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Mateusz Grabowski, queue distribution ensures the capacity distribution. However it is possible that containers from different queues can run on same node manager host. In this case, execution time of a container may be affected.

So, isolating queues is not sufficient. You will also need to configure CGroup for cpu isolation.

Find some good links on CGroup as below.

https://hortonworks.com/blog/managing-cpu-resources-in-your-hadoop-yarn-clusters/