Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Spark jobs are stuck under YARN Fair Scheduler

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Spark jobs are stuck under YARN Fair Scheduler

Created on

12-30-2019

08:54 PM

- last edited on

12-31-2019

01:09 AM

by

VidyaSargur

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I have setup YARN Fair-scheduler in Ambari (HDP 3.1.0.0-78) for "Default" queue itself. So far, I haven't added any new queues.

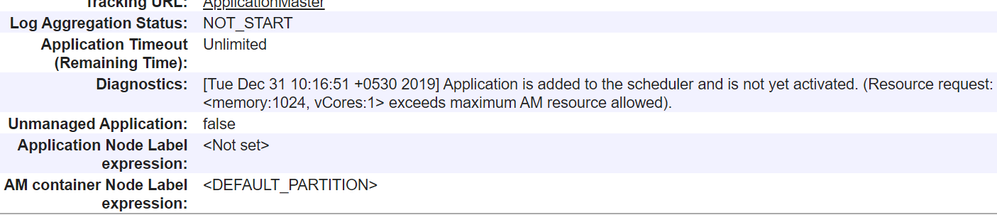

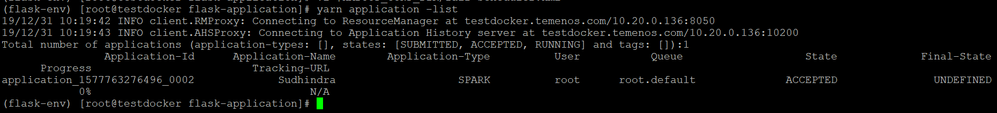

Now, I want to run a simple job against the queue and when I submit the job, the application state is in "ACCEPTED" state forever. I get the below message in YARN logs:

The additional information is given below. Please help me in fixing this issue at the earliest.

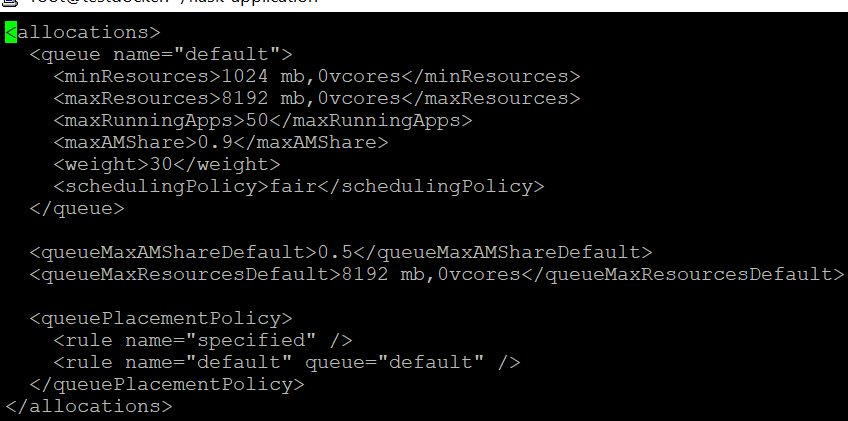

For "default" queue, the below parameters are set through "fair-scheduler.xml".

Also, no jobs are currently running, apart from the one that I have launched.

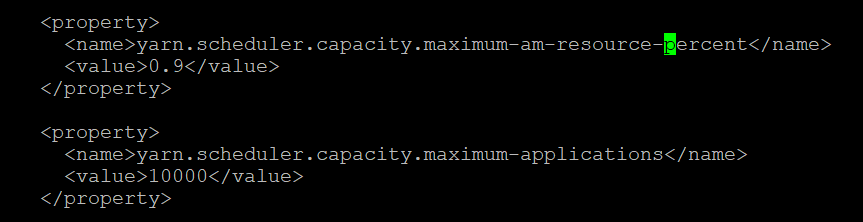

Given below is the screenshot, which confirms that the maximum AM resource percent is greater than 0.1

Created 01-17-2020 04:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Sudhnidra,

Please take a look at:

https://blog.cloudera.com/yarn-fairscheduler-preemption-deep-dive/

https://blog.cloudera.com/untangling-apache-hadoop-yarn-part-3-scheduler-concepts/

https://clouderatemp.wpengine.com/blog/2016/06/untangling-apache-hadoop-yarn-part-4-fair-scheduler-q...

What type of FairScheduler are you using:

Steady FairShare

Instantaneous FairShare

What is the weight of the default queue you are submitting your apps to?

Best,

Lyubomir

Created 01-10-2020 03:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @ssk26

From my perspictive you are limiting your default queue to use at minimum 1024MB 0vCores and at maximum 8196MB 0vCores. In both cases no cores are set - when you try to run a job it requires to run with 1024MB memory and 1vCores - it then fails to allocate the 1vCore due to 0vCore min/max restriction and it sends 'exceeds maximum AM resources allowed'

That's why I think the issue is with the core utilization and not with memory.

HTH

Best,

Lyubomir

Created 01-08-2020 06:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

In your screenshot the

<queueMaxResourcesDefault>

Is set to 8192 mb, 0vcore

And your job requires at least 1vcore as seen in the Diagnostics section.

Please try increasing the vcore size in <queueMaxResourcesDefault> and try to run the job again.

Best,

Lyubomir

- « Previous

-

- 1

- 2

- Next »