Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Spark not showing Kafka Data Properly

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Spark not showing Kafka Data Properly

Created on 01-31-2022 05:41 AM - edited 01-31-2022 05:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

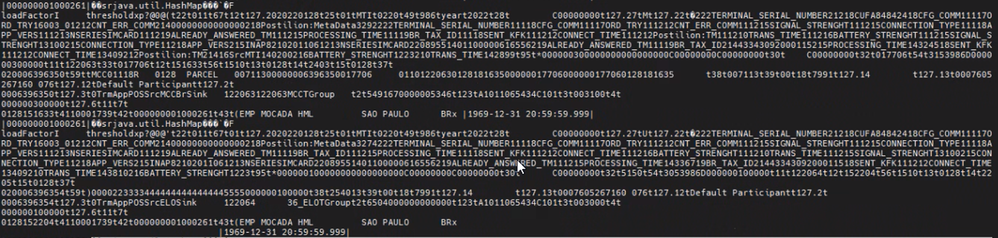

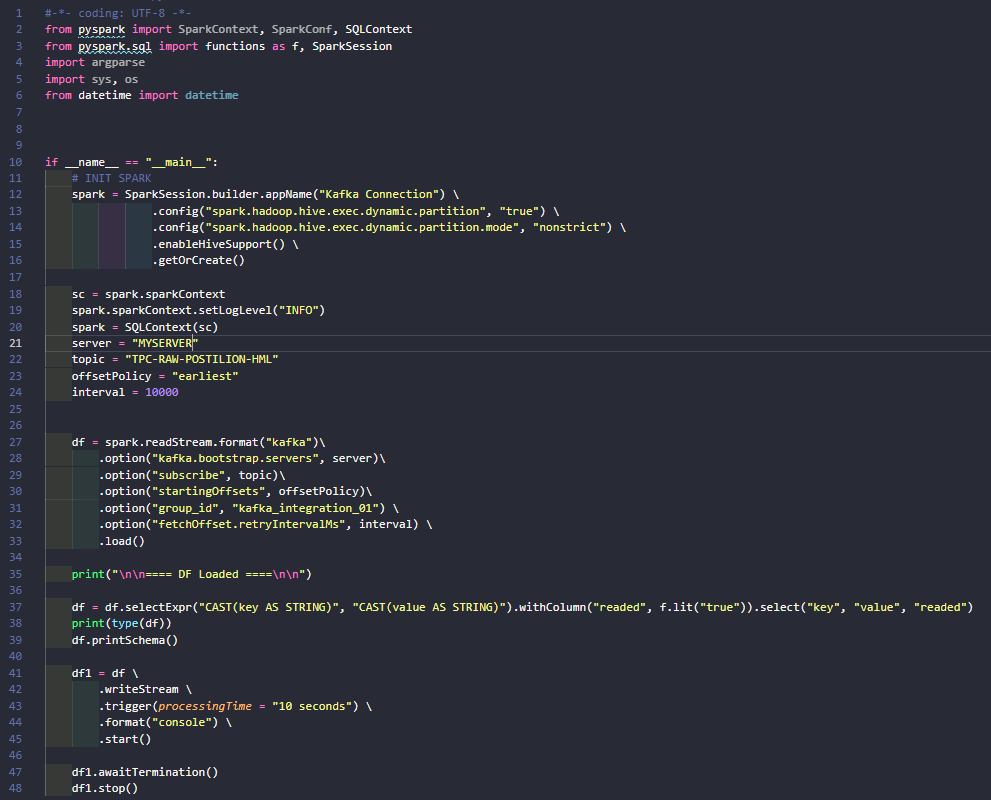

I'm trying to use kafka data using pyspark but I having difficult because it's in Hashmap type

The Question is, how can I convert this to a useful df to be treated in pyspark?

This is the output and my actual code:

Any suggestion and steps?

Created 02-08-2022 02:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looking at the serialized data, that seems like the Java binary serialization protocol. It seems to me that the producer is simply writing the HashMap java object directly to Kafka, rather than using a proper serializer (Avro, JSON, String, etc.)

You should look into modifying your producer so that you can properly deserialize the data that you're reading from Kafka.

Was your question answered? Please take some time to click on "Accept as Solution" below this post.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created 02-06-2022 09:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You need to find out what's the serializer that's being used to write data to Kafka and use an associated deserializer to read those messages.

Was your question answered? Please take some time to click on "Accept as Solution" below this post.

If you find a reply useful, say thanks by clicking on the thumbs up button.

Created 02-08-2022 04:07 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You need to check the producer code at which format kafka message is produced and what kind of Serializer class you have used. Same format/serialiser you need to use while deserialising the data. For example while writing data if you have used Avro then while deserialising you need to Avro.

@araujo You are right. Customer needs to check their producer code and serializer class.

Created 02-08-2022 02:44 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Looking at the serialized data, that seems like the Java binary serialization protocol. It seems to me that the producer is simply writing the HashMap java object directly to Kafka, rather than using a proper serializer (Avro, JSON, String, etc.)

You should look into modifying your producer so that you can properly deserialize the data that you're reading from Kafka.

Was your question answered? Please take some time to click on "Accept as Solution" below this post.

If you find a reply useful, say thanks by clicking on the thumbs up button.