Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Spark on Yarn - How to run multiple tasks in a...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Spark on Yarn - How to run multiple tasks in a Spark Resource Pool

Created on

01-06-2020

10:05 PM

- last edited on

01-06-2020

10:49 PM

by

VidyaSargur

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am running Spark jobs on YARN, using HDP 3.1.1.0-78 version.

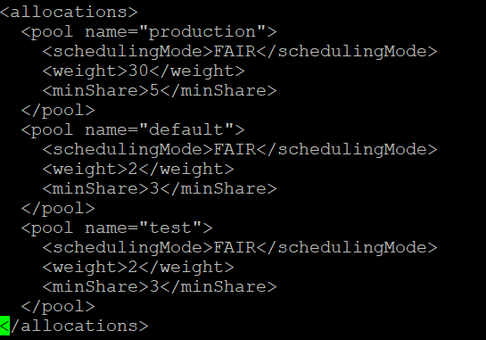

I have set the Spark Scheduler Mode to FAIR by setting the parameter "spark.scheduler.mode" to FAIR. The fairscheduler.xml is as follows:

I have also configured my program to use "production" pool.

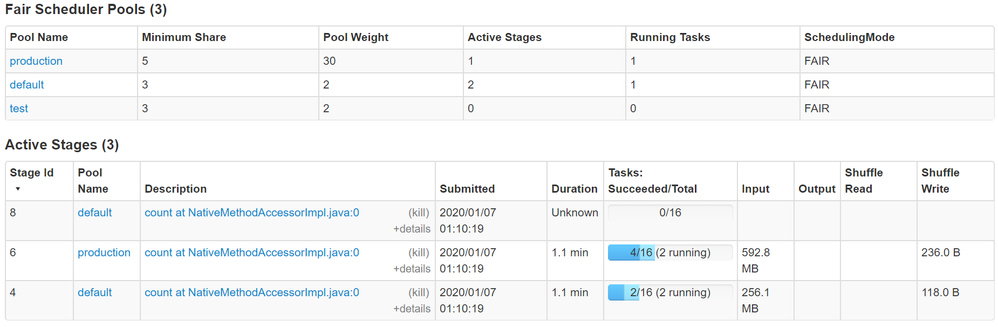

Upon running the job, it has been observed that although 4 stages are running, only 1 stage run under "production" and rest 3 run under "default" pool.

So, at any point of time, I am able to make sure that only 2 tasks are running in parallel. If I want to make sure that 3 tasks or more run in parallel, then 2 tasks should run under "production" and rest 2 should run under "default".

Is there any programmatic way to achieve that, by setting configuration parameters?

Any inputs will be really helpful.

Thanks and Regards,

Sudhindra

Created 01-07-2020 01:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have observed that by increasing the number of cores/executors and driver/executor memory, I was able to verify that around 6 tasks are running in parallel at a time.

Thanks and Regards,

Sudhindra

Created 01-06-2020 10:15 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Additional Information:

As we can see, even though there are 3 stages active, only 1 task each is running in Production as well as Default pools.

My basic question is - how can we increase the parallelism within pools? In other words, how can I make sure that the Stage ID "8" in the above screenshot also runs in parallel with the other 2

Thanks and Regards,

Sudhindra

Created 01-07-2020 01:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have observed that by increasing the number of cores/executors and driver/executor memory, I was able to verify that around 6 tasks are running in parallel at a time.

Thanks and Regards,

Sudhindra