Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Sqoop Export Specific Columns Failure

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Sqoop Export Specific Columns Failure

- Labels:

-

Apache Hadoop

-

Apache Sqoop

Created 11-17-2020 03:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Trying to export specific columns from Hive Table to Teradata tables. Can we export specific columns not entire Hive table

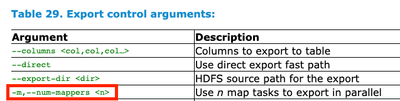

getting Unsupported parameter --columns from below export command

sqoop export --connect jdbc:teradat://.... --username xxx --password bbb --table table_name

--columns "col1, col2, col3" --m 6 --export-dir /table/location

Running Sqoop Version 1.4.7-cdh6.2.1

Created 11-18-2020 09:09 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The issue is not with --columns parameter, actually. Problem is the sqoop can't parse the command because it expects "-m" instead of "--m". Remember, when using a short-form parameter that is a single letter, use a single dash, otherwise use double dash.

Hope this helps!

Created 11-19-2020 07:33 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

tried with -m and --num-mappers still same error

Created 11-19-2020 09:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Your connection string is "teradat" instead of "teradata" - this can also lead to parsing error.

Otherwise, have you tried exporting the entire table, without giving specific columns?

Created 11-20-2020 08:28 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

the export command is correct, just typo when I typed it here

Tried exporting entire table: Hive to Teradata

Error: com.teradata.connector.common.exception.connectorException: Index outof boundary

both tables in source and destination have exact same columns all varchar in Teradata and string in Hive/Impala

Created 11-20-2020 09:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Ok, fair enough. There is also a CDH specific connector for Teradata (available here https://www.cloudera.com/downloads/connectors/sqoop/teradata/1-7c6.html). Try that.

The installation and usage guide is here: https://docs.cloudera.com/documentation/other/connectors/teradata/1-x/PDF/Cloudera-Connector-for-Ter...

Created 11-20-2020 09:47 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You also want to check the structure of your raw data. Specifically, look for any instances where there are extra delimiters (e.g. a string field that includes commas as part of the string).

Created 11-22-2020 03:53 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Everything is done as per the installation guide you provided.... still no joy

Weird thing is import from Teradata to CDH works seamlessly,

Just can't get export working