Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Sqoop Export from Hive table - specifying Deli...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Sqoop Export from Hive table - specifying Delimiters

- Labels:

-

Apache Hive

-

Apache Sqoop

Created 03-11-2016 03:02 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

| 0favorite | I have a hive table thats Gzip compresed. Table Creation The xml column has xmls data which is pretty printed. Thats why different line delimiter is chosen. Data Load from another table Validating the load This gives one record, which is fine as the source table table_avro also has one record. Sqoop export I want to export this gzip compressed data from this hive table to mysql table. This exports 14000 odd rows to the mysql table. In fact the xml has 14000 odd lines. How do i specify the input data line and field separators so that the data is read correctly and exported correctly? Should the output delimiters also need to be specified? |

Created 03-13-2016 10:52 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

appears that to use -hcatalog option in sqoop, the table needs to be first created with hcat option... eg: hcat -e "create table....

in addition: below needs to provided as well as per docs: https://docs.hortonworks.com/HDPDocuments/HDP2/HDP-2.3.2/bk_dataintegration/content/sqoop-hcatalog-i...

hcatalog-database | Specifies the database name for the HCatalog table. If not specified, the default database name ‘default’ is used. Providing the --hcatalog-database option without --hcatalog-table is an error. This is not a required option. |

kindly let me know if this works.... else you can switch to "--export-dir <directory>.*" option.... ( " * " for exporting partitions recursively )

Created 03-14-2016 12:57 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Mayank Shekhar Its not about the target table not found. It exports but exports with no knowledge of the input field and the record delimiters.

Created 03-14-2016 02:42 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

redefining the table with hcat option and then sqooping using hcatalog feature didnt work as well ? How about switching back to '--export-dir <directory>.*' option ?

Created 03-14-2016 04:20 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Mayank Shekhar, hcat option did not make any different which i think is expected as creating an hive table is same as create a table in hcat. Even the --export-dir did not work. Below is the command used,

sqoop export --connect jdbc:mysql://localhost/test --driver com.mysql.jdbc.Driver --username hive --password hive --table mysql_table_export --export-dir /apps/hive/warehouse/table_text/**/* --input-fields-terminated-by '|' --input-lines-terminated-by '#' --fields-terminated-by '|' --lines-terminated-by '#'

I get an exception in this case,

Error: java.io.IOException: Can't export data, please check failed map task logs at org.apache.sqoop.mapreduce.TextExportMapper.map(TextExportMapper.java:112) at org.apache.sqoop.mapreduce.TextExportMapper.map(TextExportMapper.java:39) at org.apache.hadoop.mapreduce.Mapper.run(Mapper.java:146) at org.apache.sqoop.mapreduce.AutoProgressMapper.run(AutoProgressMapper.java:64) at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:787) at org.apache.hadoop.mapred.MapTask.run(MapTask.java:341) at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:164) at java.security.AccessController.doPrivileged(Native Method) at javax.security.auth.Subject.doAs(Subject.java:415) at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1657) at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:158)

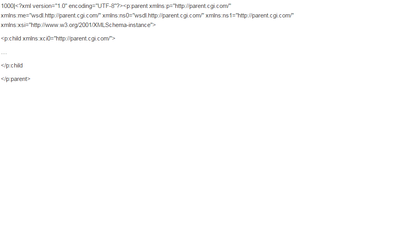

Caused by: java.lang.RuntimeException: Can't parse input data: ' </child></parent>' at noblob_table_avro_export_1.__loadFromFields(noblob_table_avro_export_1.java:249) at noblob_table_avro_export_1.parse(noblob_table_avro_export_1.java:192) at org.apache.sqoop.mapreduce.TextExportMapper.map(TextExportMapper.java:83) ... 10 more

Caused by: java.util.NoSuchElementException at java.util.ArrayList$Itr.next(ArrayList.java:834) at noblob_table_avro_export_1.__loadFromFields(noblob_table_avro_export_1.java:244) ... 12 more

The hive table has only one record as follows

<code>1000|<parent><child>sample </child></parent>

It definitely looks like that sqoop is not able to read with record delimiter as '#' although the sqoop generated java class has input delimiters as (char) 124 and (char) 35.

In the generated class,

private static final DelimiterSet __inputDelimiters = new DelimiterSet((char) 124, (char) 35, (char) 0, (char) 0, false);

Created on 03-14-2016 04:44 PM - edited 08-19-2019 03:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I just provided an XML sample which looks like similar to the one that i use as i cannot share. The difference is that the XML that i use has the namespaces defined for the xml tags.