Support Questions

- Cloudera Community

- Support

- Support Questions

- Sqoop import from Postgresql doesn't work

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Sqoop import from Postgresql doesn't work

- Labels:

-

Apache Accumulo

-

Apache Sqoop

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi colleagues!

I need help with Apache Sqoope omport task from PSQL!

I have MaсOS with Catalina 10.15.2

Installed Sqoop and Hadoop locally.

Trying to import table and getting error:

"ERROR tool.ImportTool: Error during import: Import job failed"

the command to Sqoop is:

% sqoop import --connect jdbc:postgresql://127.0.0.1:54322/postgres --username postgres -P -table dim_parameters -- --schema dwh

What I'm missing?

Full error log is:

Warning: /usr/local/Cellar/sqoop/1.4.6_1/libexec/../accumulo does not exist! Accumulo imports will fail.

Please set $ACCUMULO_HOME to the root of your Accumulo installation.

Warning: /usr/local/Cellar/sqoop/1.4.6_1/libexec/../zookeeper does not exist! Accumulo imports will fail.

Please set $ZOOKEEPER_HOME to the root of your Zookeeper installation.

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/Cellar/hadoop/3.2.1/libexec/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/Cellar/hbase/1.3.5/libexec/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

2020-01-19 12:29:04,468 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6

Enter password:

2020-01-19 12:29:08,788 INFO manager.SqlManager: Using default fetchSize of 1000

2020-01-19 12:29:08,788 INFO manager.PostgresqlManager: We will use schema dwh

2020-01-19 12:29:08,788 INFO tool.CodeGenTool: Beginning code generation

2020-01-19 12:29:09,001 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM "dwh"."dim_vendor" AS t LIMIT 1

2020-01-19 12:29:09,060 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /usr/local/Cellar/hadoop/3.2.1/libexec

Note: /tmp/sqoop-kirilldemidov/compile/9114309c90c8eca54315622d42c5e09a/dim_vendor.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

2020-01-19 12:29:10,437 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-kirilldemidov/compile/9114309c90c8eca54315622d42c5e09a/dim_vendor.jar

2020-01-19 12:29:10,449 WARN manager.PostgresqlManager: It looks like you are importing from postgresql.

2020-01-19 12:29:10,449 WARN manager.PostgresqlManager: This transfer can be faster! Use the --direct

2020-01-19 12:29:10,449 WARN manager.PostgresqlManager: option to exercise a postgresql-specific fast path.

2020-01-19 12:29:10,477 INFO mapreduce.ImportJobBase: Beginning import of dim_vendor

2020-01-19 12:29:10,478 INFO Configuration.deprecation: mapred.job.tracker is deprecated. Instead, use mapreduce.jobtracker.address

2020-01-19 12:29:10,576 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2020-01-19 12:29:10,601 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

2020-01-19 12:29:10,751 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

2020-01-19 12:29:10,852 INFO impl.MetricsConfig: Loaded properties from hadoop-metrics2.properties

2020-01-19 12:29:11,024 INFO impl.MetricsSystemImpl: Scheduled Metric snapshot period at 10 second(s).

2020-01-19 12:29:11,024 INFO impl.MetricsSystemImpl: JobTracker metrics system started

2020-01-19 12:29:11,182 INFO db.DBInputFormat: Using read commited transaction isolation

2020-01-19 12:29:11,227 INFO mapreduce.JobSubmitter: number of splits:1

2020-01-19 12:29:11,347 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_local252881625_0001

2020-01-19 12:29:11,347 INFO mapreduce.JobSubmitter: Executing with tokens: []

2020-01-19 12:29:11,523 INFO mapred.LocalDistributedCacheManager: Creating symlink: /tmp/hadoop-kirilldemidov/mapred/local/job_local252881625_0001_9c2bb09f-a6ec-40ff-aa51-60f96d47c600/libjars <- /usr/local/Cellar/sqoop/1.4.6_1/libexec/conf/libjars/*

2020-01-19 12:29:11,528 WARN fs.FileUtil: Command 'ln -s /tmp/hadoop-kirilldemidov/mapred/local/job_local252881625_0001_9c2bb09f-a6ec-40ff-aa51-60f96d47c600/libjars /usr/local/Cellar/sqoop/1.4.6_1/libexec/conf/libjars/*' failed 1 with: ln: /usr/local/Cellar/sqoop/1.4.6_1/libexec/conf/libjars/*: No such file or directory

2020-01-19 12:29:11,528 WARN mapred.LocalDistributedCacheManager: Failed to create symlink: /tmp/hadoop-kirilldemidov/mapred/local/job_local252881625_0001_9c2bb09f-a6ec-40ff-aa51-60f96d47c600/libjars <- /usr/local/Cellar/sqoop/1.4.6_1/libexec/conf/libjars/*

2020-01-19 12:29:11,528 INFO mapred.LocalDistributedCacheManager: Localized file:/tmp/hadoop/mapred/staging/kirilldemidov252881625/.staging/job_local252881625_0001/libjars as file:/tmp/hadoop-kirilldemidov/mapred/local/job_local252881625_0001_9c2bb09f-a6ec-40ff-aa51-60f96d47c600/libjars

2020-01-19 12:29:11,581 INFO mapreduce.Job: The url to track the job: http://localhost:8080/

2020-01-19 12:29:11,582 INFO mapreduce.Job: Running job: job_local252881625_0001

2020-01-19 12:29:11,583 INFO mapred.LocalJobRunner: OutputCommitter set in config null

2020-01-19 12:29:11,595 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 2

2020-01-19 12:29:11,595 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2020-01-19 12:29:11,596 INFO mapred.LocalJobRunner: OutputCommitter is org.apache.hadoop.mapreduce.lib.output.FileOutputCommitter

2020-01-19 12:29:11,645 INFO mapred.LocalJobRunner: Waiting for map tasks

2020-01-19 12:29:11,645 INFO mapred.LocalJobRunner: Starting task: attempt_local252881625_0001_m_000000_0

2020-01-19 12:29:11,670 INFO output.FileOutputCommitter: File Output Committer Algorithm version is 2

2020-01-19 12:29:11,670 INFO output.FileOutputCommitter: FileOutputCommitter skip cleanup _temporary folders under output directory:false, ignore cleanup failures: false

2020-01-19 12:29:11,679 INFO util.ProcfsBasedProcessTree: ProcfsBasedProcessTree currently is supported only on Linux.

2020-01-19 12:29:11,680 INFO mapred.Task: Using ResourceCalculatorProcessTree : null

2020-01-19 12:29:11,701 INFO db.DBInputFormat: Using read commited transaction isolation

2020-01-19 12:29:11,709 INFO mapred.MapTask: Processing split: 1=1 AND 1=1

2020-01-19 12:29:11,719 INFO mapred.LocalJobRunner: map task executor complete.

2020-01-19 12:29:11,720 WARN mapred.LocalJobRunner: job_local252881625_0001

java.lang.Exception: java.lang.RuntimeException: java.lang.ClassNotFoundException: Class dim_vendor not found

at org.apache.hadoop.mapred.LocalJobRunner$Job.runTasks(LocalJobRunner.java:492)

at org.apache.hadoop.mapred.LocalJobRunner$Job.run(LocalJobRunner.java:552)

Caused by: java.lang.RuntimeException: java.lang.ClassNotFoundException: Class dim_vendor not found

at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2638)

at org.apache.sqoop.mapreduce.db.DBConfiguration.getInputClass(DBConfiguration.java:403)

at org.apache.sqoop.mapreduce.db.DataDrivenDBInputFormat.createDBRecordReader(DataDrivenDBInputFormat.java:237)

at org.apache.sqoop.mapreduce.db.DBInputFormat.createRecordReader(DBInputFormat.java:263)

at org.apache.hadoop.mapred.MapTask$NewTrackingRecordReader.<init>(MapTask.java:527)

at org.apache.hadoop.mapred.MapTask.runNewMapper(MapTask.java:770)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:347)

at org.apache.hadoop.mapred.LocalJobRunner$Job$MapTaskRunnable.run(LocalJobRunner.java:271)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

Caused by: java.lang.ClassNotFoundException: Class dim_vendor not found

at org.apache.hadoop.conf.Configuration.getClassByName(Configuration.java:2542)

at org.apache.hadoop.conf.Configuration.getClass(Configuration.java:2636)

... 12 more

2020-01-19 12:29:12,590 INFO mapreduce.Job: Job job_local252881625_0001 running in uber mode : false

2020-01-19 12:29:12,591 INFO mapreduce.Job: map 0% reduce 0%

2020-01-19 12:29:12,593 INFO mapreduce.Job: Job job_local252881625_0001 failed with state FAILED due to: NA

2020-01-19 12:29:12,601 INFO mapreduce.Job: Counters: 0

2020-01-19 12:29:12,606 WARN mapreduce.Counters: Group FileSystemCounters is deprecated. Use org.apache.hadoop.mapreduce.FileSystemCounter instead

2020-01-19 12:29:12,607 INFO mapreduce.ImportJobBase: Transferred 0 bytes in 1.8433 seconds (0 bytes/sec)

2020-01-19 12:29:12,608 WARN mapreduce.Counters: Group org.apache.hadoop.mapred.Task$Counter is deprecated. Use org.apache.hadoop.mapreduce.TaskCounter instead

2020-01-19 12:29:12,608 INFO mapreduce.ImportJobBase: Retrieved 0 records.

2020-01-19 12:29:12,608 ERROR tool.ImportTool: Error during import: Import job failed!

Created on 01-19-2020 09:42 AM - edited 01-19-2020 09:44 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I can see 2 syntax errors one on -table dim_parameters should be double dash [ -- ]and between dim_parameters -- --schema dwh the [-- -- ]

sqoop import --connect jdbc:postgresql://127.0.0.1:54322/postgres --username postgres -P -table dim_parameters -- --schema dwh

Could you try the below syntax I intentionally added the --password

sqoop import \

--connect jdbc:postgresql://127.0.0.1:54322/postgres \

--username postgres \

--password xxxx \

--table dim_parameters \

--schema dwh

Please let me know if that helped.

Created 01-19-2020 11:31 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for youк reply - it didn't help - the problem is not in connection to database but in process writing to HDFS

Created 01-20-2020 01:29 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

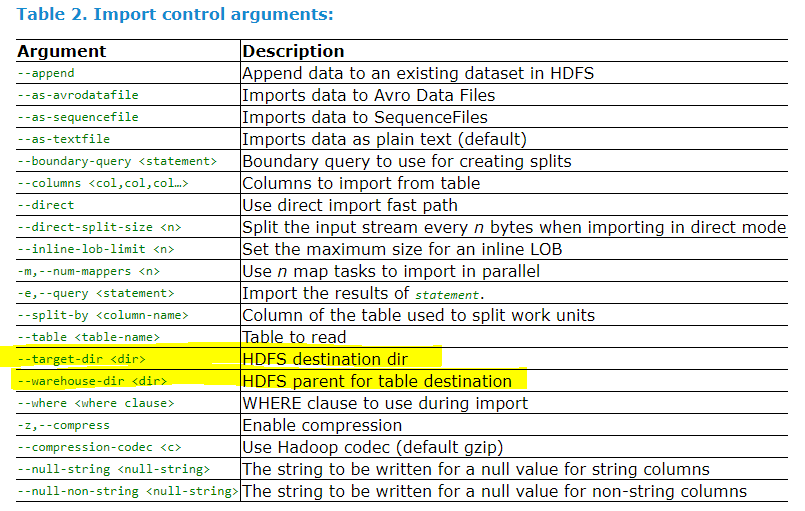

I thought you had the output directory and it was the connect command which was giving you issues! If you had time to read through the sqoop documentation you will see that you need to give sqoop the destination directory. See the high lighted options

This is how your command should have been , the below assumes you have the privileges to write to HDFS directory /user/kirkade

sqoop import \

--connect jdbc:postgresql://127.0.0.1:54322/postgres \

--username postgres \

--password xxxx \

--table dim_parameters \

--schema dwh \

--target-dir /user/kirkadeHope that helps

Created 01-23-2020 02:32 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm afraid it doesn't help as well 😞

I suppose what problem is in configuration in hadoop environment - I will try ones again on new linux server with clean installation

Thanks a lot!

Created 01-23-2020 03:34 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Unfortunately I have MySQL databases but if you could conserve that instalment I could try to reproduce your problem this weekend. I have been overloaded these past days ....To my experience reinstallation might not resolve the problem its usually a good learning curve to face a problem head one just imagine ypu were at a client site you wouldn't ask them to reinstall the environment. Just share the create and postgres load scripts and version so I can reproduce your problem.