Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Starting Namenode: Can't find HmacSHA1 algorit...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Starting Namenode: Can't find HmacSHA1 algorithm

- Labels:

-

Apache Hadoop

Created on 02-26-2018 09:15 PM - edited 08-17-2019 05:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

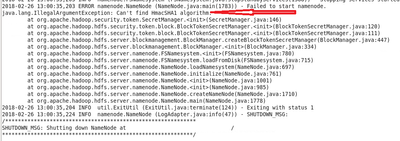

My namenode service suddenly stopped. I don't see any error messages in the log either.

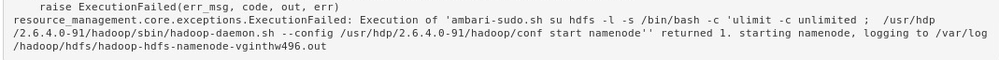

When I tried starting it from the Ambari, it says below.

ulimit -c unlimited ; /usr/hdp/2.6.4.0-91/hadoop/sbin/hadoop-daemon.sh --config /usr/hdp/2.6.4.0-91/hadoop/conf start namenode'' returned 1

When I tried to start the namenode from the command line, it gives me an error as below.

Can't find HmacSHA1 algorithm

Created 02-26-2018 11:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As the error is coming from the following piece of code: https://github.com/apache/hadoop/blob/release-2.7.3-RC1/hadoop-common-project/hadoop-common/src/main...

Which is using Java "KeyGenerarot" to generate "HmacSHA1" tokens: https://docs.oracle.com/javase/7/docs/api/javax/crypto/KeyGenerator.html

So it looks like your JDK is not having the JCE packages installed. Can you please check if recently your JDK is upgraded (Or if the JAVA_HOME) is changed which does not have the JCE installed?

You can find the detailed information about JCE: https://docs.hortonworks.com/HDPDocuments/Ambari-2.6.1.3/bk_ambari-security/content/distribute_and_i...

.

Created 02-26-2018 11:07 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

As the error is coming from the following piece of code: https://github.com/apache/hadoop/blob/release-2.7.3-RC1/hadoop-common-project/hadoop-common/src/main...

Which is using Java "KeyGenerarot" to generate "HmacSHA1" tokens: https://docs.oracle.com/javase/7/docs/api/javax/crypto/KeyGenerator.html

So it looks like your JDK is not having the JCE packages installed. Can you please check if recently your JDK is upgraded (Or if the JAVA_HOME) is changed which does not have the JCE installed?

You can find the detailed information about JCE: https://docs.hortonworks.com/HDPDocuments/Ambari-2.6.1.3/bk_ambari-security/content/distribute_and_i...

.

Created 02-26-2018 11:36 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Jay Kumar SenSharma Thanks for the prompt response. You are right. Somehow my jdk got corrupted. I've set the JAVA_HOME again and I see the services are starting.

Also I see this happening all the time that my JDK is getting corrupte. Do you have any suggestions on the JDK permissions for the ambari user and other services in the hadoop group?

Created 02-26-2018 11:39 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Good to know that your current issue is resolved. It will be great if you can mark this HCC thread as answered by clicking the "Accept" link on the correct answer, that way other HCC users can quickly find the solution when they encounter same error.

.

Regarding your JDK issue, Can you please clarify what do you mean by "Somehow my jdk got corrupted" ? how do you find that JDK got corrupted? Do you see any JDK crash report like (hs_err_pid" ...) Or do you see any other JDK level errors?

Created 02-27-2018 09:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Jay Kumar SenSharma So the reason I felt my JDK was corrupt was, replacing the JDK with a fresh copy which I had on my home directory(The same version as the previous one and the same copy of it which I used) fixed my issue. That means some pieces of the existing JDK were lost since it's the same copy I use each time.

I didnt find any DK crash report like (hs_err_pid" ...) or JDK level errors.

And the initial issue isn't completely resolved yet, once I started my namenode after replacing the JDK as mentioned above and set the env to the right location, the datanode service on all the data nodes came down. Trying to start the service doesn't even show an error this time. It starts fine but comes down immediately without any trace of error.

Created 02-28-2018 12:31 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I think I found the answer. The dfs.datanode.dir was found inconsistent as I saw it from the logs. I added a healthy datanode, balanced the cluster then deleted the data direcories from the other inconsistent nodes after taking a backup at /tmp. Restarting after that works fine now.