Support Questions

- Cloudera Community

- Support

- Support Questions

- Storm - missing messages in pipeline

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Storm - missing messages in pipeline

- Labels:

-

Apache Storm

Created on 03-17-2017 11:43 AM - edited 08-18-2019 04:41 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

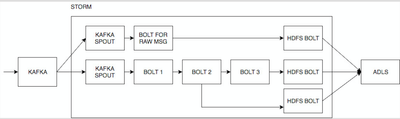

Hi all..We are noticing that there are some messages which get lost during storm processing..below is a brief outline of our pipeline.

We have messages coming to Kafka which then get consumed by 2 different kafka spouts in Storm. One Spout writes the message to raw stream and other storm starts processing the message. We need to store the output of Bolt2 to HDFS and also send it down for further processing which will then eventually end up in ADLS as well.

All the 3 HDFS bolts are configured to write to different folder structures in ADLS. In an ideal scenario I should see all the 3 messages in ADLS ( raw, out of bolt2 and output of bolt3). But we are noticing that raw gets written always but sometimes only one of the output (bolt2 or bolt3) gets written to ADLS. Its inconsistent on which one misses. Sometimes both get written. There aren't any errors/exceptions in log messages.

Did anyone run into such issues? Any insight will be appreciated. Are there any good monitoring tools other than Storm UI that gives insight into what is going on? We are using HDInsight and are hosted on Azure and are using Storm 1.0.1

Thanks.

Created 03-17-2017 06:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Laxmi Chary thanks for your question. Do you know if there's ever a case where Message from Bolt 2 doesn't get written but from Bolt 3 does get written? Are you anchoring tuples in your topology? collector.emit(tuple, new Field()) [the tuple is the anchor]

Are you doing any microbatching in your topology?

Created 03-17-2017 06:51 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Laxmi Chary thanks for your question. Do you know if there's ever a case where Message from Bolt 2 doesn't get written but from Bolt 3 does get written? Are you anchoring tuples in your topology? collector.emit(tuple, new Field()) [the tuple is the anchor]

Are you doing any microbatching in your topology?

Created 03-21-2017 03:21 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Ambud SharmaYes. There is a case where the message from Bolt 2 doesn't get written but from bolt3 should get written. But if Bolt2 output is written, Bolt 3 output should always be there. vice versa is not true. Is that a problem?

We are not anchoring tuples. We are extending BaseBasicBolt and from I understand we need to anchor tuples only if we extend BaseRichBolt..Is that incorrect?

No, we are not doing any microbatching.

Created 03-22-2017 03:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Ambud Sharma wondering if u have more insight. Let me know if you need more details. TIA

Created 03-22-2017 06:33 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You should be anchoring, without anchoring Storm doesn't guarantee at least once semantics which means it's best effort.

Anchoring is a factor of your delivery semantics, you should be using BaseRichBolt, otherwise you don't have a collector.

Created 03-22-2017 06:57 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Ambud Sharma Doesn't the BaseBasicBolt do that for u?

Created 03-22-2017 07:00 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This is what was mentioned in Storm Applied book

"The beauty of using BaseBasicBolt as our base class is that it automatically provides anchoring and acking for us." and we are using BaseBasicBolt. Are you saying that this is incorrect?

Created 03-22-2017 07:06 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, that is incorrect, https://github.com/apache/storm/blob/master/storm-core/src/jvm/org/apache/storm/topology/base/BaseBa... this bolt class doesn't even have a collector to acknowledge messages.

Created 03-22-2017 07:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

ok. Do you know if there is any documentation on this?

Created 03-22-2017 07:12 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here's some example code to show you how explicit anchoring and acking can be done: https://github.com/Symantec/hendrix/blob/current/hendrix-storm/src/main/java/io/symcpe/hendrix/storm...