Support Questions

- Cloudera Community

- Support

- Support Questions

- The best approach to the thousands of small partit...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

The best approach to the thousands of small partitions

- Labels:

-

Apache Hive

Created 10-17-2016 08:14 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

There is a lot of information how is necessary to avoid small files and a large number of partitions in Hive. But what if I can’t avoid them?

I have to store a Hive-table with 10 years of history data. It contains 3710 daily partitions at present day. Every partition is really small, from 80 to 15000 records. In csv format partitions vary from 25Kb to 10Mb. In ORC format partitions vary from 10Kb to 2Mb. Though I don’t think that ORC format would be effective for that small size. Queries to this table usually include date or period of dates, so daily partition is preferred.

What would be optimal approach (in terms of performance) for such a large amount of small data?

Created 10-17-2016 06:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Disclaimer: Without having any knowledge of the data

STEPS:

1. For such small sets of data I would partition by YEAR.

2. I would insert the data ordering by timestamp. (Use PIG if Hive is taking more time)

3. Table structure:

....

PARTITIONED BY (

year STRING COMMENT '')

STORED AS ORC tblproperties("orc.compress"="ZLIB",

"orc.bloom.filter.columns"="time_stamp",

"orc.create.index"="true",

"orc.stripe.size"="268435456"

,"orc.row.index.stride"="12000",

"orc.compress.size"="262144"

);

4. Collect statistics on table.

5. Set few config parameters in hive

set hive.optimize.index.filter=true;

set hive.exec.orc.skip.corrupt.data=true;

set hive.vectorized.execution.enabled=true;

set hive.exec.compress.output=true;

set hive.execution.engine=tez;

set tez.am.container.reuse.enabled=TRUE;

set hive.compute.query.using.stats=true;

set stats.reliable=true; set hive.cbo.enable=true;

set hive.optimize.sort.dynamic.partition=true;

set hive.optimize.ppd=true;

set hive.optimize.ppd.storage=true;

set hive.merge.tezfiles=true;

set hive.hadoop.supports.splittable.combineinputformat=true;

set mapreduce.map.speculative=true;

6. Query with YEAR extracted from timestamp (try regex_replace function in hive) and TIMESTAMP

DO not miss any of the steps above and post us about the awesome results you get:)

Thanks

Created 10-17-2016 10:21 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Alena Melnikova,

Following link would help.

http://www.slideshare.net/BenjaminLeonhardi/hive-loading-data

Hope this helps

Created 10-17-2016 11:36 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@jramakrishnan Thanks.

I have already read these links. There is no clear answer. What would be good file format for small partitions? csv, orc, smth else? HBase as an alternative metastore is fine, but my Hive 1.2.1 still uses MySQL. It was an idea about generate hash using the date. I would be glad if someone explains this idea in details.

Created 10-17-2016 11:54 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The whole goal of having partitions is to allow Hive to limit the files it will have to look at in order to fulfill the SQL request you send into it. On the other hand, you also clearly understand that having too many small files to look at is a performance/scalability drag. With so few number of records for each day, I'd suggest partitioning at the month level (as a single string such as @Joseph Niemiec and @bpreachuk suggest in their answers to https://community.hortonworks.com/questions/29031/best-pratices-for-hive-partitioning-especially-by.....

This will allow you to keep your "original" dates as a column and let the partition months be a new virtual column. Of course, you'll need to train/explain to your query writers the benefit of using this virtual column of the partition name in the queries, but will then get the value of partitioning all while having 1/30th of the files and each of them being 30x bigger.

Good luck!

Created 10-17-2016 01:45 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Lester Martin Thank you,

I keep in reserve option with monthly partition (YYYY-MM). This complicates queries. But if it's the only way I'll have to use it.

Created 10-17-2016 06:48 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Disclaimer: Without having any knowledge of the data

STEPS:

1. For such small sets of data I would partition by YEAR.

2. I would insert the data ordering by timestamp. (Use PIG if Hive is taking more time)

3. Table structure:

....

PARTITIONED BY (

year STRING COMMENT '')

STORED AS ORC tblproperties("orc.compress"="ZLIB",

"orc.bloom.filter.columns"="time_stamp",

"orc.create.index"="true",

"orc.stripe.size"="268435456"

,"orc.row.index.stride"="12000",

"orc.compress.size"="262144"

);

4. Collect statistics on table.

5. Set few config parameters in hive

set hive.optimize.index.filter=true;

set hive.exec.orc.skip.corrupt.data=true;

set hive.vectorized.execution.enabled=true;

set hive.exec.compress.output=true;

set hive.execution.engine=tez;

set tez.am.container.reuse.enabled=TRUE;

set hive.compute.query.using.stats=true;

set stats.reliable=true; set hive.cbo.enable=true;

set hive.optimize.sort.dynamic.partition=true;

set hive.optimize.ppd=true;

set hive.optimize.ppd.storage=true;

set hive.merge.tezfiles=true;

set hive.hadoop.supports.splittable.combineinputformat=true;

set mapreduce.map.speculative=true;

6. Query with YEAR extracted from timestamp (try regex_replace function in hive) and TIMESTAMP

DO not miss any of the steps above and post us about the awesome results you get:)

Thanks

Created 10-17-2016 06:54 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Alena Melnikova taking a second glance at my answer I feel that you would not need any partition at all, provided you can compact yearly data into 1 file. So 10 files in total. A bit more steps but would work as gracefully as the yearly partition.

So try out solution 1 and when it works, try out solution 2 and then pick one:)

Thanks

Created 10-18-2016 10:58 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@rbiswas Thank you! It's interesting idea. I'll test parititions by year (YYYY), by year and month as suggested @Lester Martin (YYYY-MM) and daily (YYYY-MM-DD). I'll share results here.

By the way, what would be difference between two of your approaches? Partitions by year and compact one year in one file gives the same 10 files.

Created 10-18-2016 02:04 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Alena Melnikova you got it, there is no difference apart from a very subtle one. On approach one we still kept a bit of dependency on partition pruning and on approach 2 it is entirely dependent on ordering of data via ORC index.

Created on 10-21-2016 11:11 AM - edited 08-19-2019 02:03 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

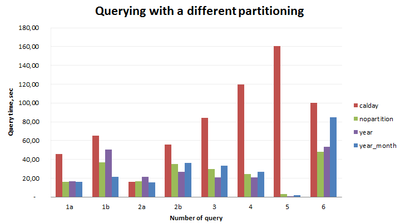

I tested 4 variants of partitioning on 6 queries:

Daily partitions (calday=2016-10-20) Year-month partitions (year_month=2016-10) Year partitions (year=2016) No partitions (but 10 files with yearly data)

It was created 4 tables following @rbiswas recommendations.

Here is yearly aggregate information about data. Just to give you idea about scale of data.

| partition | size | records |

| year=2006 | 539.4 K | 12 217 |

| year=2007 | 2.8 M | 75 584 |

| year=2008 | 6.4 M | 155 850 |

| year=2009 | 9.1 M | 228 247 |

| year=2010 | 9.3 M | 225 357 |

| year=2011 | 8.5 M | 196 280 |

| year=2012 | 19.5 M | 448 145 |

| year=2013 | 113.4 M | 2 494 787 |

| year=2014 | 196.7 M | 4 038 632 |

| year=2015 | 204.3 M | 4 047 002 |

| year=2016 | 227.2 M | 4 363 214 |

I run every query 5 times, cast the worst/best results and took the average of the remaining three.

The results are below:

Obviously, daily partitioning is the worst case. But it is not so clearly to the rest of the options. The results depend on the query. In the end I decided that the yearly partitioning in our case would be optimal. @rbiswas, thanks for the idea!

@rbiswas, I have couple of questions:

1. Given that I have less than 10,000 records per day would it be better to set orc.row.index.stride less than 12000?

2. In my table I have columns:

Order_date string (looks '2016-10-20'), Order_time timestamp (looks '2016-10-20 12:45:55')

The table is sorted by order_time as you recommended and has a bloom filter index. But filter

WHERE to_date(order_time) BETWEEN ... any period

works 15-20% slower than

WHERE order_date BETWEEN ... any period

Actually I expected that using column with bloom filter speeds up query execution. Why it did not happen?