Support Questions

- Cloudera Community

- Support

- Support Questions

- Re: Timestamp Format Issue (NiFi's PutDatabaseReco...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Timestamp Format Issue (NiFi's PutDatabaseRecord)

- Labels:

-

Apache NiFi

Created on

11-12-2019

10:03 AM

- last edited on

11-12-2019

10:07 PM

by

ask_bill_brooks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

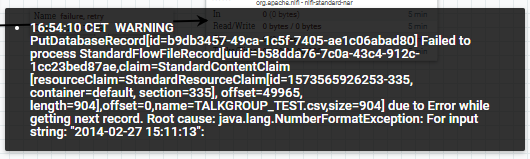

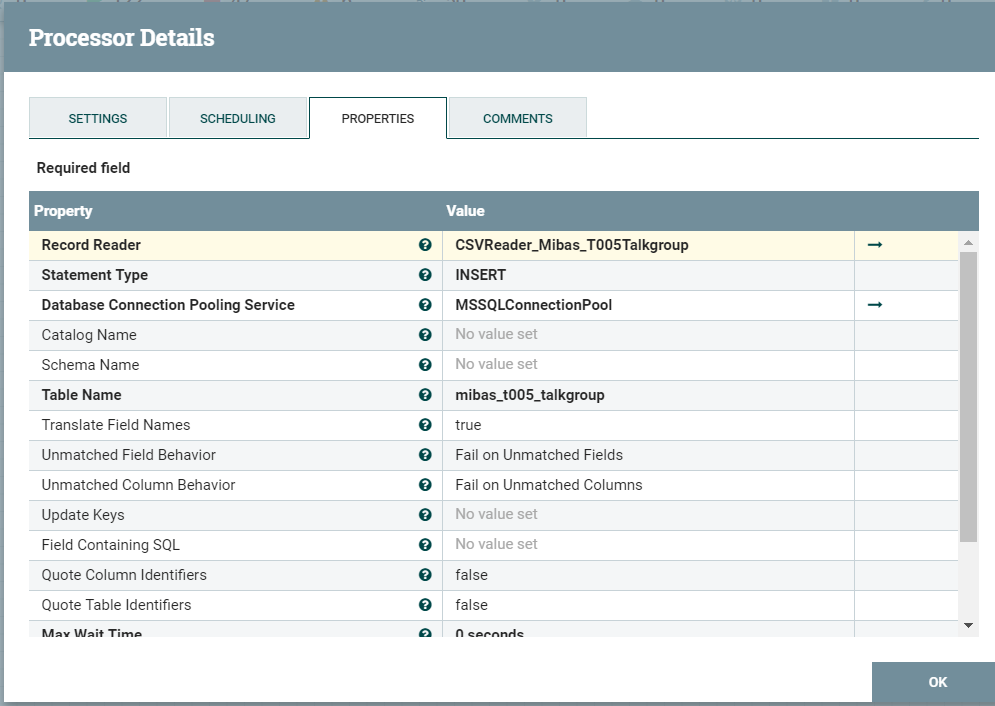

Getting error when inserting a csv-file into a MSSQL database using the PutDatabaseRecord (1.9.2) processor in Nifi.

ListFile → FetchFile → PutDatabaseRecord

The schema used:

{ "type" : "record", "name" : "mibas_t005_talkgroup", "fields" : [

{ "name" : "talkgroup_ref", "type" : ["null","long"] },

{ "name" : "fleet_ref", "type" : ["null","long"] },

{ "name" : "talkgroup_id", "type" : ["null","long"] },

{ "name" : "talkgroup_name", "type" : ["null","string"] },

{ "name" : "group_type", "type" : ["null","string"] },

{ "name" : "dispatcher_ref", "type" : ["null","string"] },

{ "name" : "multigroup_ref", "type" : ["null","string"] },

{ "name" : "owner_", "type" : ["null","string"] },

{ "name" : "fne_state", "type" : ["null","string"] },

{ "name" : "addl_attrvar", "type" : ["null","string"] },

{ "name" : "last_user_modified", "type" : ["null","string"] },

{ "name" : "time_stamp", "type" : ["null", { "type":"long", "logicaltype":"timestamp-millis"} ]},

{ "name" : "fne_oid", "type" : ["null","string"] }]}

Datatype in the table is DateTime

Created 12-04-2019 05:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The solution was changing the datatype definition in the schema from logicaltype to logicalType (Upper case T there for you). wtf!!!

Eg: *{ "name" : "time_stamp", "type" : ["null", { "type":"long", "logicalType":"timestamp-millis"}*

The irritating part is that the schema validates. No warning! But, if you mistakenly use String instead of the correct string (lower case S) or Type instead of type in you schema, your schema will not validate and you can correct it. But for logicalType no.

Created 11-14-2019 05:49 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It seems that the PutDatabaseRecord processor expects your timestamp to be numeric (probably milliseconds since the epoch).

I notice your screenshot contains a specification of the timestamp format, but I don't see this as an option in the documentation, so possibly that has no effect in this processor.

Here is the reference: https://nifi.apache.org/docs/nifi-docs/components/org.apache.nifi/nifi-standard-nar/1.9.2/org.apache...

----

Possible solution: Load or convert the timestamp to the right value.

A simple trick to check what the value should be, is by manually inserting a date into the table, loading it with Nifi and then inspecting the value.

(You can also test writing it back, that should confirm that the value works).

- Dennis Jaheruddin

If this answer helped, please mark it as 'solved' and/or if it is valuable for future readers please apply 'kudos'.

Created on 11-15-2019 02:42 AM - edited 11-15-2019 02:43 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your reply!

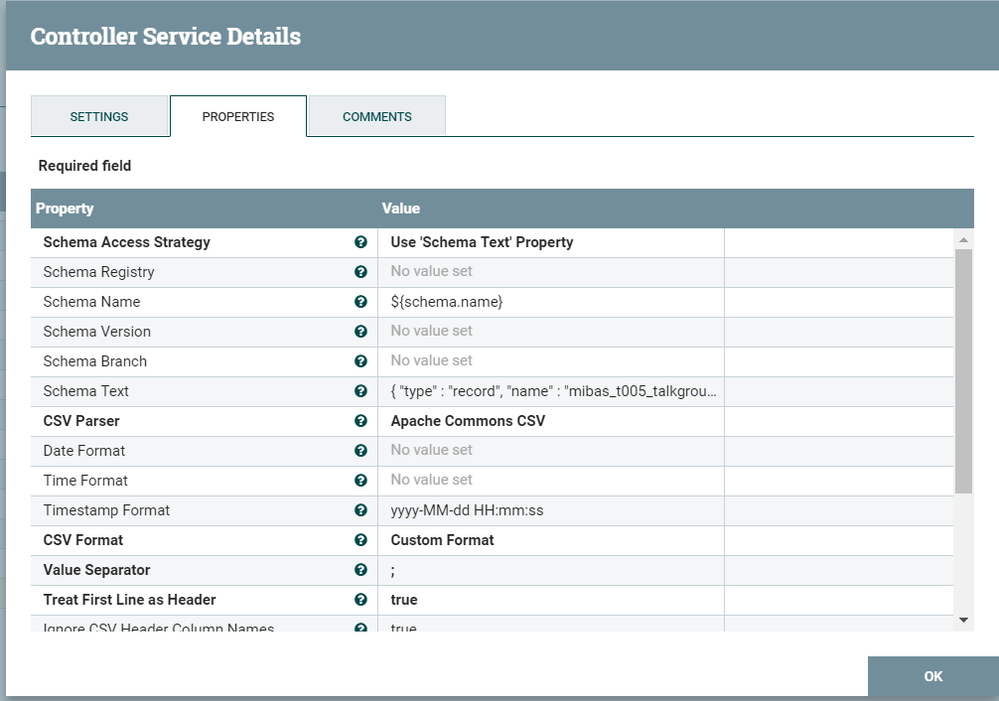

The timestamp format is an option in the CSVReader Controller Service, which is a valid service

record reader in the PutDatabaseRecord processor. The format used, is working fine in other workflows with the same setup.

Created 11-15-2019 05:00 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I see, as there are several steps here it is a bit hard to pinpoint the problem. Please check what the timestamp looks like just before you write it to the database. If it still looks like a string, presumably something went wrong with the reading.

- Dennis Jaheruddin

If this answer helped, please mark it as 'solved' and/or if it is valuable for future readers please apply 'kudos'.

Created 12-04-2019 05:45 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The solution was changing the datatype definition in the schema from logicaltype to logicalType (Upper case T there for you). wtf!!!

Eg: *{ "name" : "time_stamp", "type" : ["null", { "type":"long", "logicalType":"timestamp-millis"}*

The irritating part is that the schema validates. No warning! But, if you mistakenly use String instead of the correct string (lower case S) or Type instead of type in you schema, your schema will not validate and you can correct it. But for logicalType no.

Created 12-04-2019 06:08 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I understand this was hard to find.

One point that is not relevant now, but may help with the general understanding:

If you make a typo in the field names, avro will just ignore the actions of the right field and instead process the new field, which will be empty.

This is technically quite a different problem than when you actually make a typo in the Type, as those are possible to check against the list of possible types.

So detection of typos in field names is hard, and despite what the name suggests it seems LogicalType is actually a field name from Nifi perspective.

- Dennis Jaheruddin

If this answer helped, please mark it as 'solved' and/or if it is valuable for future readers please apply 'kudos'.