Support Questions

- Cloudera Community

- Support

- Support Questions

- Trigger an spark application from NiFi

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Float this Question for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Trigger an spark application from NiFi

- Labels:

-

Apache NiFi

-

Apache Spark

Created 06-06-2018 09:01 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My Requirement is as soon as source put the files my spark job should get triggered out and process the files.

Currently i am thinking to do like below.

1. Source will push the files in local directory /temp/abc

2. NiFi ListFiles and fetchFile will take care of ingestion of those files into HDFS.

3. On success relationship of putHDFS thinking to setup executeStreamCommand.

Could you please suggest is there any best approach to do it? what will be the configuration for executeStreamCommand?

Thanks in advance,

R

Created on 06-06-2018 10:14 AM - edited 08-17-2019 08:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Once you ingest the files into HDFS then only you need to trigger the spark application then using ExecuteStreamCommand processor would be the correct approach as this processor accepts incoming connection and triggers spark applications.

if you are having more than one file that you are storing into HDFS then it would be better to use MergeContent processor after PutHDFS processor.

Configure the MergeContent processor to wait for minimum number of entries (or) by using Max Bin Age..etc property because if you connect Success relation from PutHDFS to ExecuteStreamCommand processor as soon as first file written to HDFS then application is going to be triggered from NiFi we are not waiting for all the files stored into HDFS directory.

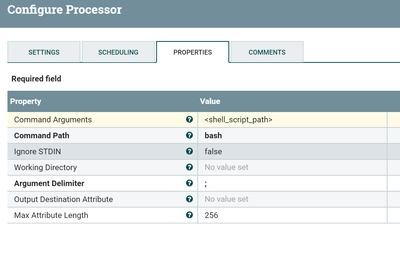

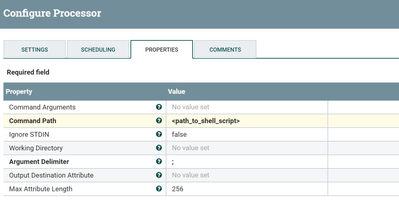

Triggering shell script using ExecuteStreamCommand processor configs:

Created 06-06-2018 01:40 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@Shu thanks for your answer,i have one doubt about your answer the command argument should not take the shell script as input instead of that we should have shell script in command path which is tried and tested.

Created on 06-06-2018 04:02 PM - edited 08-17-2019 08:11 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It depends on how your bashrc file is configured, if your path variable in bashrc file having the directory path that your script is in.

Then you don't need to specify bash in command path argument

Created 06-12-2018 11:25 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Does the answer helpful to resolve your issue..!!

Take a moment to Log in and Click on Accept button below to accept the answer, That would be great help to Community users to find solution quickly for these kind of issues and close this thread.

Created 06-06-2018 07:53 PM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

@RAUI Another option is to build a spark-streaming application that pulls those files directly from hdfs and process them.

https://spark.apache.org/docs/latest/streaming-programming-guide.html#file-streams

HTH